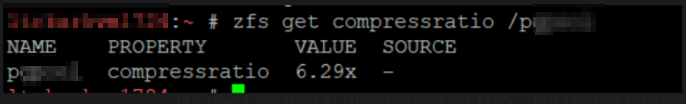

We’ve got several PostgreSQL servers using ZFS file system for the database, and I needed to know how compressed the data is. Fortunately, there appears to be a zfs command that does exactly that: report the compression ratio for a zfs file system. Use zfs get compressratio /path/to/mount

Author: Lisa

Web Proxy Auto Discovery (WPAD) DNS Failure

I wanted to set up automatic proxy discovery on our home network — but it just didn’t work. The website is there, it looks fine … but it doesn’t work. Turns out Microsoft introduced some security idea in Windows 2008 that prevents Windows DNS servers from serving specific names. They “banned” Web Proxy Auto Discovery (WPAD) and Intra-site Automatic Tunnel Addressing Protocol (ISATAP). Even if you’ve got a valid wpad.example.com host recorded in your domain, Windows DNS server says “Nope, no such thing!”. I guess I can appreciate the logic — some malicious actor can hijack all of your connections by tunnelling or proxying your traffic. But … doesn’t the fact I bothered to manually create a hostname kind of clue you into the fact I am trying to do this?!?

I gave up and added the proxy config to my group policy — a few computers, then, needed to be manually configured. It worked. Looking in the event log for a completely different problem, I saw the following entry:

Event ID 6268

The global query block list is a feature that prevents attacks on your network by blocking DNS queries for specific host names. This feature has caused the DNS server to fail a query with error code NAME ERROR for wpad.example.com. even though data for this DNS name exists in the DNS database. Other queries in all locally authoritative zones for other names

that begin with labels in the block list will also fail, but no event will be logged when further queries are blocked until the DNS server service on this computer is restarted. See product documentation for information about this feature and instructions on how to configure it.

The oddest bit is that this appears to be a substring ‘starts with’ query — like wpadlet or wpadding would also fail? A quick search produced documentation on this Global Query Blocklist … and two quick ways to resolve the issue.

(1) Change the block list to contain only the services you don’t want to use. I don’t use ISATAP, so blocking isatap* hostnames isn’t problematic:

dnscmd /config /globalqueryblocklist isatap

View the current blocklist with:

dnscmd /info /globalqueryblocklist

– Or –

(2) Disable the block list — more risk, but it avoids having to figure this all out again in a few years when a hostname starting with isatap doesn’t work for no reason!

dnscmd /config /enableglobalqueryblocklist 0

Let it snow …

Chocolate Crepe Rolled Cake

This was another video recipe that I tried to transcribe into a list of ingredients

Cake Base

Whip together 2 eggs and 70g sugar

Add 1 tablespoon honey, and whip some more

Stir in 20g butter and 30ml milk

Sift together 65g baking flour and 10g unsweetened cocoa, then fold into egg mixture. Pour thin layer in bottom of cake pan and bake — this is the base of the crepe rolled cake.

Crepes

Whisk together 180g flour and 35g unsweetened cocoa

Add a pinch salt and 10g sugar. Whisk to combine.

Blend in 3 eggs, 260g warm milk, and 160g boiling water. Strain to remove any lumps, then mix in 60g of liquid honey and 25 (g? ml?) vegetable oil.

Cover with cling film and rest for 20 mins, then make crepes in a pan.

To make cream cheese filling:

Blend 250g cream cheese, 45g powdered sugar, and 150g Greek yogurt

In a separate bowl, mix 250g cream 33% and 45g powdered sugar. Whip to firm peak state.

whip to firm peak

Fold whipped cream into cream cheese mixture.

Fold in 20g liquid honey.

Assembly:

Lay out a crepe, coat with whip, and fold in thirds toward center. Repeat with all crepes.

Put cake base on plate, coat with cream mixture. Roll crepes into a big circle, then place on cake layer. Wrap additional crepes around. Chill in fridge for 3-4 hours, preferably overnight.

Dust with powdered sugar. Cut and serve.

Cranberry Crumble

I made a cranberry crumble that Scott found in the local news paper

FILLING:

- 2 cups cranberries

- Zest and juice of 1/2 orange

- 1/2 cup maple syrup

- 1 1/2 tablespoons cornstarch

- 1/4 teaspoon ground cinnamon

CRUST:

- 1 1/2 cups all-purpose flour

- 1 1/2 cups almond flour

- 1/2 cup granulated sugar

- 1 teaspoon baking powder

- 1/4 teaspoon salt

- 1/4 teaspoon ground nutmeg

- Zest of 1/2 orange

- 4 tablespoons cold unsalted butter, cubed

- 2 large egg whites

- 1 1/2 teaspoons vanilla extract

Preheat oven to 375F.

Toss cranberries with corn starch and cinnamon. Add orange zest and mix. Then stir in orange juice and maple syrup.

Combine dry crust ingredients. Cut in butter, then add egg whites and vanilla to form a crumble. Press some into bottom of pan. Cover with cranberry mixture, then crumble crust mixture on top.

Bake at 375F for 40 minutes. Allow to cool.

Lamb Shank Tagine

Cooked lamb shanks in the tagine today

- 4 smoked ancho chillies

- 5 dried apricots

- 4 lamb shanks

- olive oil

- 1 red onions

- 8 cloves of garlic

- 5 fresh red chillies

- 1 heaped teaspoon smoked paprika

- 3 fresh bay leaves

- 2 sprigs of fresh rosemary

- 400 g tin of plum tomatoes

- 1 liter chicken stock

Coat shanks with salt and pepper. Sear shanks in olive oil, then add onions and garlic 1for a few minutes until fragrant. Remove from pan.

Add stock, plum tomatoes, spices, and peppers. Add shanks back, cover, and simmer at low heat for about 3 hours.

Rose Hip Jelly

Anya and I picked more rose hips today — we’ve got a LOT of the little things. About half a pound.

We boiled them (plus an apple) in enough water to cover by 1″, added a little lemon juice, then drained the juice. Added a whole bunch of sugar to make jelly (14 oz for every pint of juice), and let it cool and congeal overnight.

ElasticSearch — Too Many Shards

Our ElasticSearch environment melted down in a fairly spectacular fashion — evidently (at least in older iterations), it’s an unhandled Java exception when a server is trying to send data over to another server that is refusing it because that would put the receiver over the shard limit. So we didn’t just have a server or three go into read only mode — we had cascading failure where java would except out and the process was dead. Restarting the ElasticSearch service temporarily restored functionality — so I quickly increased the max shards per node limit to keep the system up whilst I cleaned up whatever I could clean up

curl -X PUT http://uid:pass@`hostname`:9200/_cluster/settings -H "Content-Type: application/json" -d '{ "persistent": { "cluster.max_shards_per_node": "5000" } }'

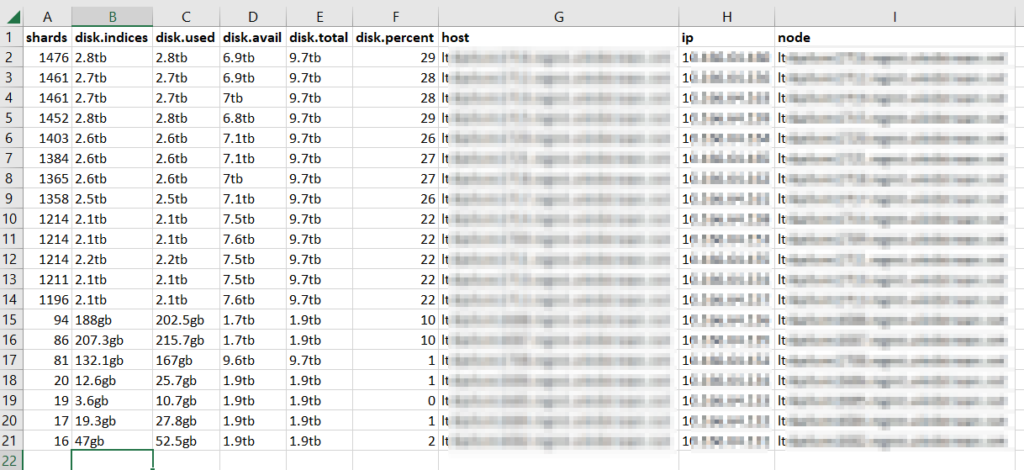

There were two requests against the ES API that were helpful in cleaning ‘stuff’ up — GET /_cat/allocation?v returns a list of each node in the ES cluster with a count of shards (plus disk space) being used. This was useful in confirming that load across ‘hot’, ‘warm’, and ‘cold’ nodes was reasonable. If it was not, we would want to investigate why some nodes were under-allocated. We were, however, fine.

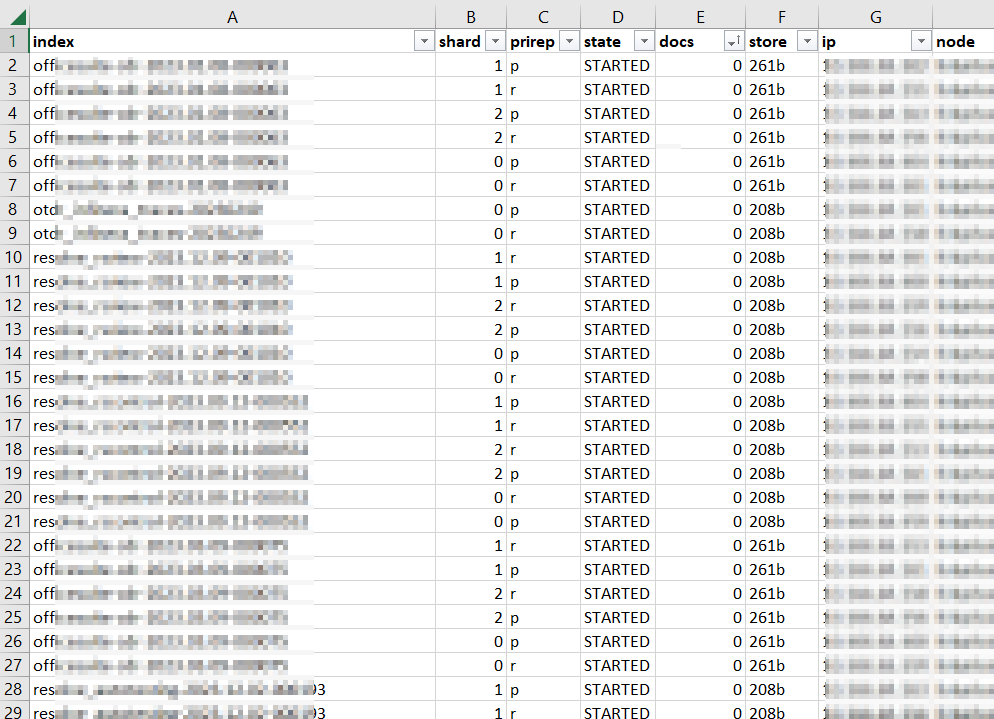

The second request: GET /_cat/shards?v=true which dumps out all of the shards that comprise the stored data. In my case, a lot of clients create a new index daily — MyApp-20231215 — and then proceeded to add absolutely nothing to that index. Literally 10% of our shards were devoted to storing zero documents! Well, that’s silly. I created a quick script to remove any zero-document index that is older than a week. A new document coming in will create the index again, and we don’t need to waste shards not storing data.

Once you’ve cleaned up the shards, it’s a good idea to drop your shard-per-node configuration down again. I’m also putting together a script to run through the allocated shards per node data to alert us when allocation is unbalanced or when total shards approach our limit. Hopefully this will allow us to proactively reduce shards instead of having the entire cluster fall over one night.

DIFF’ing JSON

While a locally processed web tool like https://github.com/zgrossbart/jdd can be used to identify differences between two JSON files, regular diff can be used from the command line for simple comparisons. Using jq to sort JSON keys, diff will highlight (pipe bars between the two columns, in this example) where differences appear between two JSON files. Since they keys are sorted, content order doesn’t matter much — it’s possible you’d have a list element 1,2,3 in one and 2,1,3 in another, which wouldn’t be sorted.

[lisa@fedorahost ~]# diff -y <(jq --sort-keys . 1.json) <(jq --sort-keys . 2.json )

{ {

"glossary": { "glossary": {

"GlossDiv": { "GlossDiv": {

"GlossList": { "GlossList": {

"GlossEntry": { "GlossEntry": {

"Abbrev": "ISO 8879:1986", "Abbrev": "ISO 8879:1986",

"Acronym": "SGML", | "Acronym": "XGML",

"GlossDef": { "GlossDef": {

"GlossSeeAlso": [ "GlossSeeAlso": [

"GML", "GML",

"XML" "XML"

], ],

"para": "A meta-markup language, used to create m "para": "A meta-markup language, used to create m

}, },

"GlossSee": "markup", "GlossSee": "markup",

"GlossTerm": "Standard Generalized Markup Language" "GlossTerm": "Standard Generalized Markup Language"

"ID": "SGML", "ID": "SGML",

"SortAs": "SGML" | "SortAs": "XGML"

} }

}, },

"title": "S" "title": "S"

}, },

"title": "example glossary" "title": "example glossary"

} }

} }

Bulk Download of YouTube Videos from Channel

Several years ago, I started recording our Township meetings and posting them to YouTube. This was very helpful — even our government officials used the recordings to refresh their memory about what happened in a meeting. But it also led people to ask “why, exactly, are we relying on some random citizen to provide this service? What if they are busy? Or move?!” … and the Township created their own channel and posted their meeting recordings. This was a great way to promote transparency however they’ve got retention policies. Since we have absolutely been at meetings where it would be very helpful to know what happened five, ten, forty!! years ago … my expectation is that these videos will be useful far beyond the allotted document retention period.

We decided to keep our channel around with the historic archive of government meeting recordings. There’s no longer time criticality — anyone who wants to see a current meeting can just use the township’s channel. We have a script that lists all of the videos from the township’s channel and downloads them — once I complete back-filling our archive, I will modify the script to stop once it reaches a video series we already have. But this quick script will list all videos published to a channel and download the highest quality MP4 file associated with that video.

# API key for my Google Developer project

strAPIKey = '<CHANGEIT>'

# Youtube account channel ID

strChannelID = '<CHANGEIT>'

import os

from time import sleep

import urllib

from urllib.request import urlopen

import json

from pytube import YouTube

import datetime

from config import dateLastDownloaded

os.chdir(os.path.dirname(os.path.abspath(__file__)))

print(os.getcwd())

strBaseVideoURL = 'https://www.youtube.com/watch?v='

strSearchAPIv3URL= 'https://www.googleapis.com/youtube/v3/search?'

iStart = 0 # Not used -- included to allow skipping first N files when batch fails midway

iProcessed = 0 # Just a counter

strStartURL = f"{strSearchAPIv3URL}key={strAPIKey}&channelId={strChannelID}&part=snippet,id&order=date&maxResults=50"

strYoutubeURL = strStartURL

while True:

inp = urllib.request.urlopen(strYoutubeURL)

resp = json.load(inp)

for i in resp['items']:

if i['id']['kind'] == "youtube#video":

iDaysSinceLastDownload = datetime.datetime.strptime(i['snippet']['publishTime'], "%Y-%m-%dT%H:%M:%SZ") - dateLastDownloaded

# If video was posted since last run time, download the video

if iDaysSinceLastDownload.days >= 0:

strFileName = (i['snippet']['title']).replace('/','-').replace(' ','_')

print(f"{iProcessed}\tDownloading file {strFileName} from {strBaseVideoURL}{i['id']['videoId']}")

# Need to retrieve a youtube object and filter for the *highest* resolution otherwise we get blurry videos

if iProcessed >= iStart:

yt = YouTube(f"{strBaseVideoURL}{i['id']['videoId']}")

yt.streams.filter(progressive=True, file_extension='mp4').order_by('resolution').desc().first().download(filename=f"{strFileName}.mp4")

sleep(90)

iProcessed = iProcessed + 1

try:

next_page_token = resp['nextPageToken']

strYoutubeURL = strStartURL + '&pageToken={}'.format(next_page_token)

print(f"Now getting next page from {strYoutubeURL}")

except:

break

# Update config.py with last run date

f = open("config.py","w")

f.write("import datetime\n")

f.write(f"dateLastDownloaded = datetime.datetime({datetime.datetime.now().year},{datetime.datetime.now().month},{datetime.datetime.now().day},0,0,0)")

f.close