One giant whiteboard is now hanging in our office — it’s 10′ long! It took all three of us to hold the board and get it mounted. The brackets are hidden behind the board, making installation more challenging but aesthetically improved over brackets jutting up from the board.

Author: Lisa

Ohio Nursery Licensing

Anyone growing plants for sale in Ohio needs to have their plants inspected for pests — the idea is similar to not moving firewood to prevent the spread of insects … if you are going to be sending plants elsewhere, it is a good idea to ensure you are not also exporting ecosystem destroying bugs!

Relevant definitions are found in ORC 927.51— including what constitutes ‘nursery stock’ — and ORC 927.55 lists exceptions where a license/inspection is not required. It appears that you do not need a license to sell plants that cannot overwinter in Ohio (I see the logic there — if a bug or disease impacts PlantX and PlantX is only going to last a few months … we probably don’t need to worry about rampant spread of that bug or disease) or plants in bloom (that’s an odd exception — but explains how the folks I see selling chrysanthemums in the Autumn do so without a license). While there is a dealer license, that is for resellers and nurseries do not appear to need a dealer license. A nursery, instead, can get a license for additional sales locations.

The nursery license is about $100 a year (plus $11 per acre of production space), and you can apply for a permit online at https://www.apps.agri.ohio.gov/NILS

There is an annual inspection of the growing facility and plants — presuming the inspection doesn’t identify any serious pest or disease infestations, a certificate is issued. The certificate must be displayed in the nursery.

If selling plants for resale (wholesale or resale), then a copy of the certificate must be included on each box/package sent out. If you plan to ship plants outside of Ohio, other states may require a phytosanitary certificate from the Ohio Department of Agriculture. If you plan to ship outside of the United States, there’s an additional federal phytosanitary certificate process through the US Department of Agriculture.

Happy February — and duck eggs

Happy February! We got our first duck eggs yesterday — I wasn’t expecting them to be laying again so soon. Anya and I took a break after lunch to cut dried grasses for the ducks’ bedding. As we opened the coop to put in the new bedding, there were two duck eggs in the farthest, darkest corner of the coop.

Anya’s Posting Challenge — Again — And Pie

Anya said I have too much “computer stuff” on my website again — not enough kittens and pie and fun stuff. So I am not allowed to post anything about computers for ALL OF FEBRUARY! And, if I do post about it, I get March added on. And another month for the next infraction, and so on. So … umm … pie!

Anya made a peach pie — I had extra pie crust dough from making a quiche a few nights ago, and we had frozen sliced peaches over the summer when we picked up a big pile of fresh peaches. I made a maple sugar crumble (maple sugar instead of brown sugar in a crumble recipe) for the topping. Anya used cookie cutters to decorate the pie with maple leaves and a snowflake. It was very tasty!

Python Script: Checking Certificate Expiry Dates

A long time ago, before the company I work for paid for an external SSL certificate management platform that does nice things like clue you into the fact your cert is expiring tomorrow night, we had an outage due to an expired certificate. One. I put together a perl script that parsed output from the openssl client and proactively alerted us of any pending expiration events.

That script went away quite some time ago — although I still have a copy running at home that ensures our SMTP and web server certs are current. This morning, though, our K8s environment fell over due to expired certificates. Digging into it, the certs are in the management platform (my first guess was self-signed certificates that wouldn’t have been included in the pending expiry notices) but were not delivered to those of us who actually manage the servers. Luckily it was our dev k8s environment and we now know the prod one will be expiring in a week or so. But I figured it was a good impetus to resurrect the old script. Unfortunately, none of the modules I used for date calculation were installed on our script server. Seemed like a hint that I should rewrite the script in Python. So … here is a quick Python script that gets certificates from hosts and calculates how long until the certificate expires. Add on a “if” statement and a notification function, and we shouldn’t come in to failed environments needing certificate renewals.

from cryptography import x509

from cryptography.hazmat.backends import default_backend

import socket

import ssl

from datetime import datetime, timedelta

# Dictionary of hosts:port combinations to check for expiry

dictHostsToCheck = {

"tableau.example.com": 443 # Tableau

,"kibana.example.com": 5601 # ELK Kibana

,"elkmaster.example.com": 9200 # ELK Master

,"kafka.example.com": 9093 # Kafka server

}

for strHostName in dictHostsToCheck:

iPort = dictHostsToCheck[strHostName]

datetimeNow = datetime.utcnow()

# create default context

context = ssl.create_default_context()

# Do not verify cert chain or hostname so we ensure we always check the certificate

context.check_hostname = False

context.verify_mode = ssl.CERT_NONE

with socket.create_connection((strHostName, iPort)) as sock:

with context.wrap_socket(sock, server_hostname=strHostName) as ssock:

objDERCert = ssock.getpeercert(True)

objPEMCert = ssl.DER_cert_to_PEM_cert(objDERCert)

objCertificate = x509.load_pem_x509_certificate(str.encode(objPEMCert),backend=default_backend())

print(f"{strHostName}\t{iPort}\t{objCertificate.not_valid_after}\t{(objCertificate.not_valid_after - datetimeNow).days} days")

Visualizing GeoIP Information in Kibana

Before we can use map details in Kibana visualizations, we need to add fields with the geographic information. The first few steps are something the ELK admin staff will need to do in order to map source and/or destination IPs to geographic information.

First update the relevant index template to map the location information into geo-point fields – load this JSON (but, first, make sure there aren’t existing mappings otherwise you’ll need to merge the existing JSON in with the new elements for geoip_src and geoip_dst

{

"_doc": {

"_meta": {},

"_source": {},

"properties": {

"geoip_dst": {

"dynamic": true,

"type": "object",

"properties": {

"ip": {

"type": "ip"

},

"latitude": {

"type": "half_float"

},

"location": {

"type": "geo_point"

},

"longitude": {

"type": "half_float"

}

}

},

"geoip_src": {

"dynamic": true,

"type": "object",

"properties": {

"ip": {

"type": "ip"

},

"latitude": {

"type": "half_float"

},

"location": {

"type": "geo_point"

},

"longitude": {

"type": "half_float"

}

}

}

}

}

}

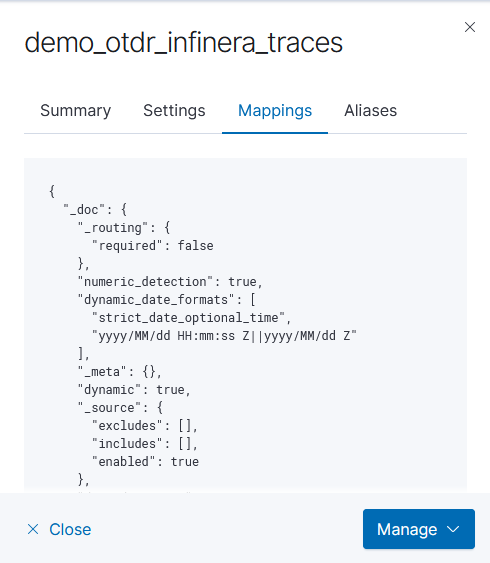

First, click on the index template name to view the settings. Click to the ‘mappings’ tab and copy what is in there

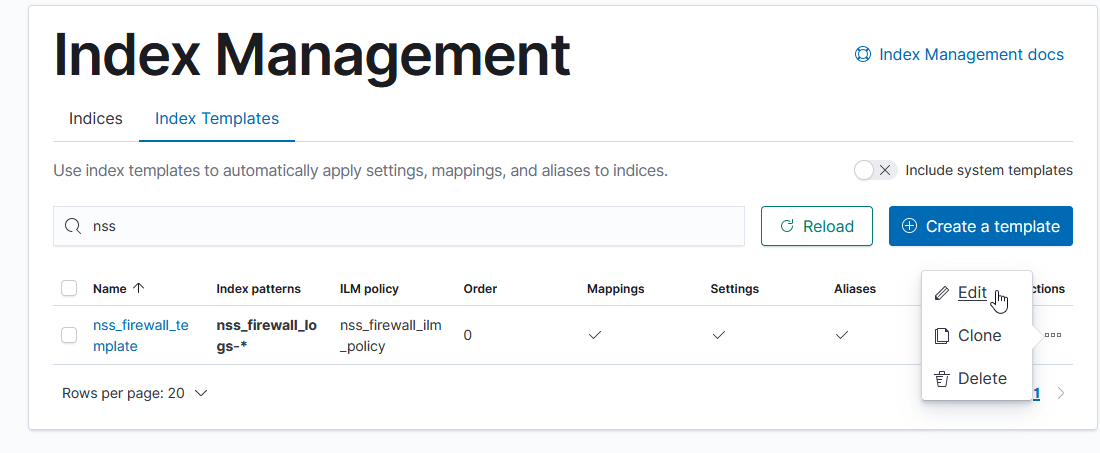

Munge in the two ‘properties’ in the above JSON. Edit the index template

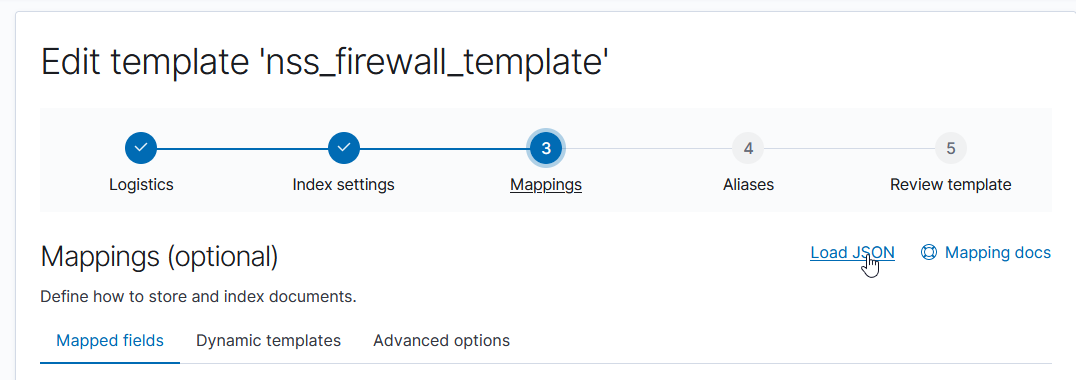

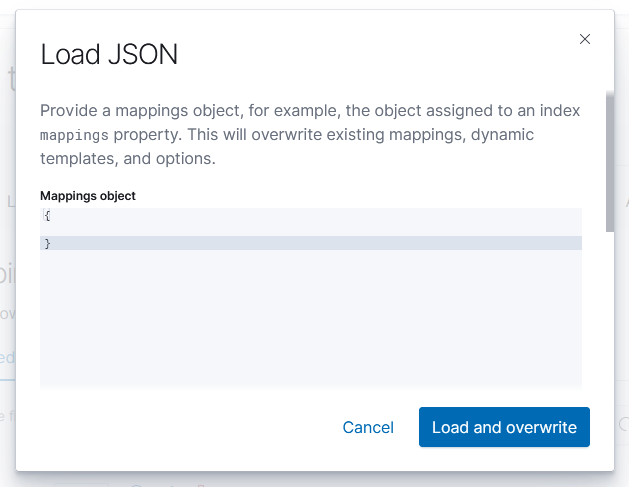

Click to the “Mappings” section and use “Load JSON” to import the new mapping configuration

Paste in your JSON & click to “Load & Overwrite”

Voila – you will have geo-point items in the template.

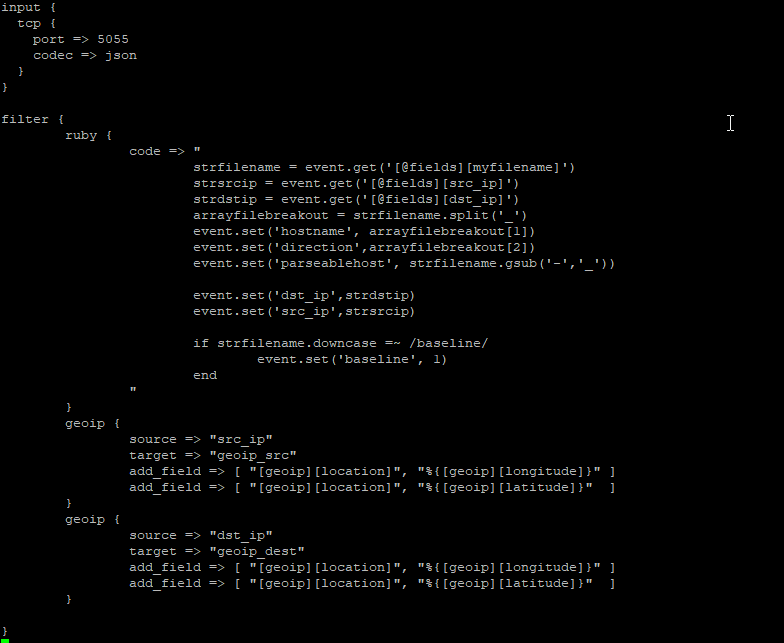

Next, the logstash pipeline needs to be configured to enrich log records with geoip information. There is a geoip filter available, which uses the MaxMind GeoIP database (this is refreshed automatically; currently, we do not merge in any geoip information for the private network address spaces) . You just need to indicate what field(s) have the IP address and where the location information should be stored. You can have multiple geographic IP fields – in this example, we map both source and destination IP addresses.

geoip {

source => "src_ip"

target => "geoip_src"

add_field => [ "[geoip][location]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][location]", "%{[geoip][latitude]}" ]

}

geoip {

source => "dst_ip"

target => "geoip_dest"

add_field => [ "[geoip][location]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][location]", "%{[geoip][latitude]}" ]

}

E.G.

One logstash is restarted, the documents stored in Kibana will have geoip_src and geoip_dest fields:

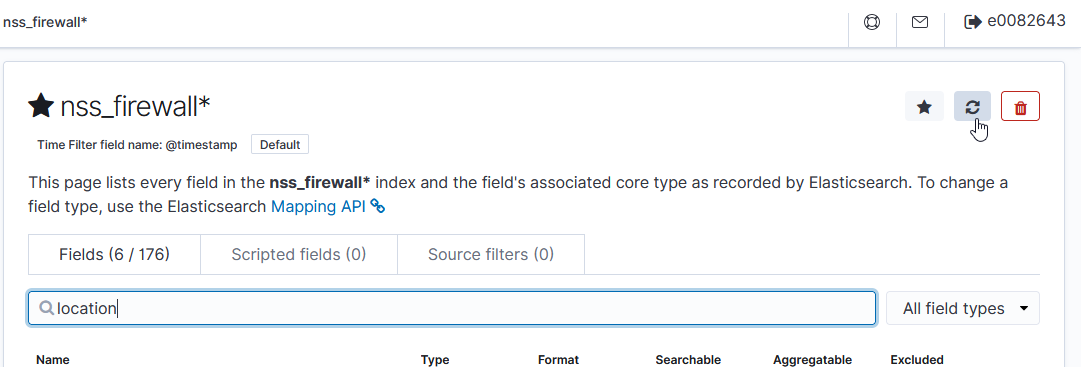

Once relevant data is being stored, use the refresh-looking button on the index pattern(s) to refresh the field list from stored data. This will add the geo-point items into the index pattern.

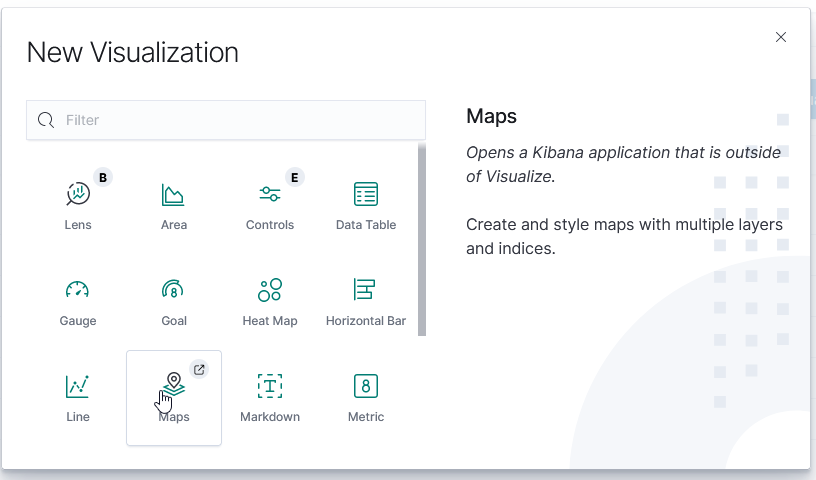

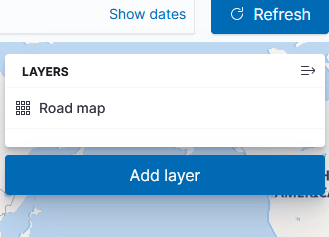

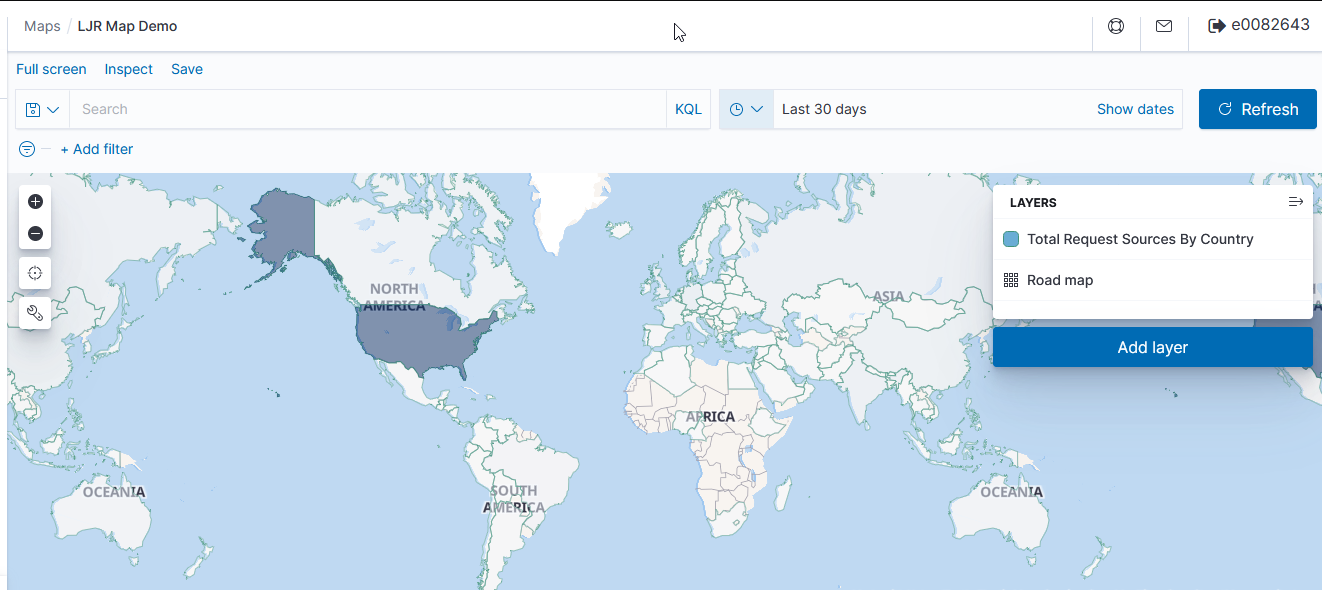

Once GeoIP information is available in the index pattern, select the “Maps” visualization

Leave the road map layer there (otherwise you won’t see the countries!)

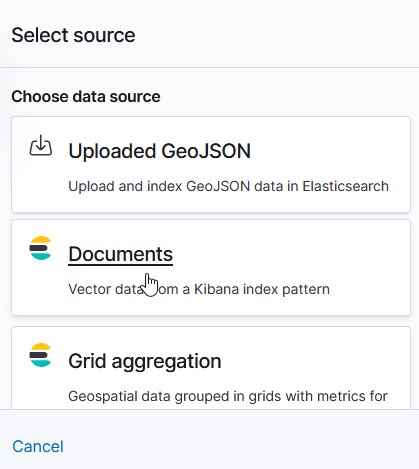

Select ‘Documents’ as the data source to link in ElasticSearch data

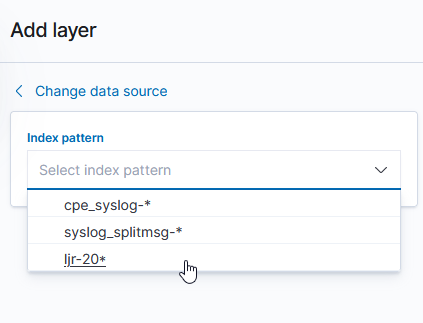

Select the index pattern that contains your data source (if your index pattern does not appear, then Kibana doesn’t recognize the pattern as containing geographic fields … I’ve had to delete and recreate my index pattern so the geographic fields were properly mapped).

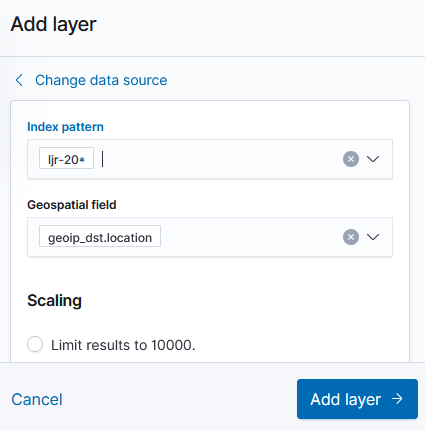

And select the field(s) that contain geographic details:

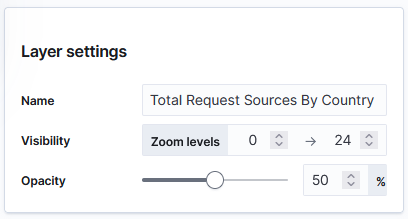

You can name the layer

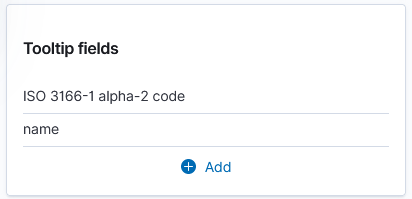

And add a tool tip that will include the country code or name

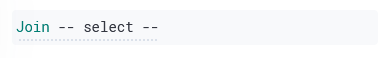

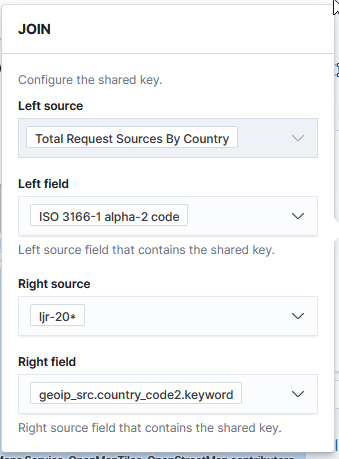

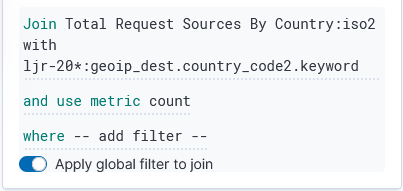

Under “Term joins”, add a new join. Click on “Join –select–” to link a field from the map to a field in your dataset.

In this case, I am joining the two-character country codes —

Normally, you can leave the “and use metric count” in place (the map is color coded by the number of requests coming from each country). If you want to add a filter, you can click the “where — add filter –” link to edit the filter.

In this example, I don’t want to filter the data, so I’ve left that at the default.

Click “Save & close” to save the changes to the map visualization. To view your map, you won’t find it under Visualizations – instead, click “Maps” along the left-hand navigation menu.

Voila – a map where the shading on a country gets darker the more requests have come from the country.

Internal Addresses

If we want to (and if we have information to map IP subnets to City/State/Zip/LatLong, etc), we can edit the database used for GeoIP mappings — https://github.com/maxmind/getting-started-with-mmdb provides a perl module that interacts with the database file. That isn’t currently done, so internal servers where traffic is sourced primarily from private address spaces won’t have particularly thrilling map data.

Executive Defenses

I often think of how chaotic the criminal justice would be if people could use any defense that a chief executive tries to float … I mean, yeah, I robbed that bank. But not a single person there stopped me to point out that robbing a bank is illegal. So, obvs, robbing banks is a perfect banking trip. Top notch, did nothing wrong.

Winter Wonderland

First Buzzard Sighting – 2023

We were building our log archway this evening, and I thought there were a few geese coming in off the lake … but they were flying strangely (well, strangely for geese anyway). Gliding through the air, I saw five buzzards! They are really early this year (although they were also flying south … so maybe they thought better of hanging out this far north in January?).

Kafka Manager SSL Issue

We renewed the certificate on our Kafka Manager (now called CMAK, but we haven’t upgraded yet so it’s still ‘manager’), but the site wouldn’t come up. It did, however, dump a bunch of java ick into the log file

Jan 16 14:01:52 kafkamanager kafka-manager: [^[[31merror^[[0m] p.c.s.NettyServer$PlayPipelineFactory - cannot load SSL context Jan 16 14:01:52 kafkamanager kafka-manager: java.lang.reflect.InvocationTargetException: null Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.ServerSSLEngine$.createScalaSSLEngineProvider(ServerSSLEngine.scala:96) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.ServerSSLEngine$.createSSLEngineProvider(ServerSSLEngine.scala:32) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.liftedTree1$1(NettyServer.scala:113) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.sslEngineProvider$lzycompute(NettyServer.scala:112) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.sslEngineProvider(NettyServer.scala:111) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.getPipeline(NettyServer.scala:90) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: Caused by: java.lang.Exception: Error loading HTTPS keystore from /path/to/kafkamgr.example.net.jks Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.DefaultSSLEngineProvider.createSSLContext(DefaultSSLEngineProvider.scala:47) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.DefaultSSLEngineProvider.<init>(DefaultSSLEngineProvider.scala:21) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.ServerSSLEngine$.createScalaSSLEngineProvider(ServerSSLEngine.scala:96) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.ssl.ServerSSLEngine$.createSSLEngineProvider(ServerSSLEngine.scala:32) ~[com.typesafe.play.play-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.liftedTree1$1(NettyServer.scala:113) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: at play.core.server.NettyServer$PlayPipelineFactory.sslEngineProvider$lzycompute(NettyServer.scala:112) [com.typesafe.play.play-netty-server_2.11-2.4.6.jar:2.4.6] Jan 16 14:01:52 kafkamanager kafka-manager: Caused by: java.security.UnrecoverableKeyException: Cannot recover key Jan 16 14:01:52 kafkamanager kafka-manager: at sun.security.provider.KeyProtector.recover(KeyProtector.java:315) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.security.provider.JavaKeyStore.engineGetKey(JavaKeyStore.java:141) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.security.provider.JavaKeyStore$JKS.engineGetKey(JavaKeyStore.java:56) ~[na:1.8.0_251] Jan 16 14:01:52 kafkamanager kafka-manager: at sun.security.provider.KeyStoreDelegator.engineGetKey(KeyStoreDelegator.java:96) ~[na:1.8.0_251]

Elsewhere in the log file, we got output that looks like not-decrypted stuff …

Jan 16 14:01:52 kafkamanager kafka-manager: java.lang.IllegalArgumentException: invalid version format:  ̄G^H▒~A�▒~Zᆴ▒~@▒~A:U▒~HP▒~W5▒~W▒D¬ᄡ^K/↓▒ ^S▒L Jan 16 14:01:52 kafkamananger kafka-manager: "^S^A^S^C^S^B▒~@+▒~@/▒~Lᄅ▒~Lᄄ▒~@,▒~@0▒~@

Which led me to hypothesize that either the keystore password wasn’t right (it was, I could use keytool to view the jks file) or the key password wasn’t right. It wasn’t — there isn’t actually a way to configure the key password in Kafka Manager, just a parameter to configure the keystore password. You’ve got to re-use that password for the key password.

To change the key password in a JKS file, use keytool, enter the keystore and key password when prompted, then enter the new key password when prompted.

keytool --keypasswd -alias kafkamanager.example.net -keystore ljr.jks

Voila — once both the key and keystore matched the password configured in play.server.https.keyStore.password … the Kafka Manager service started up and worked properly.