Our buckwheat is starting to form seeds!

Author: Lisa

Indigo Bunting

I like watching the goldfinches eating the ornamental grass seeds. Today, though, this blue bird showed up too. Looking up small blue birds, we found a rare blue bird native to, like, Venezuela … seemed rather surprising to see one here. And then I scrolled to the next small blue bird — the Indigo Bunting — which is fairly common and native to our area. So … yeah, I’m going to go with Indigo Bunting.

Arguing with the science

A week or so ago, I came across an article referencing a book about how climate impact will be inequitable — and, while reading the article, I rather disagreed with some of their assumptions. I later encountered an online discussion about the article — which included, among a few other dissenters, an admonishment not to “argue with the science”. Problem, there, is arguing with the science is the whole point of the scientific method. The point of peer-review publications. And, really, modeling socio-economic impact of climate change (or even modeling climate change itself) isn’t a science like modeling gravity or radioactive decay. These kind of models usually involve a lot of possible outcomes with associated probabilities. And ‘argue with the science’ I will!

Certainly, some of the rich will move out first. You can air condition your house and car into being habitable. Companies can set up valet services for everything. But your chosen location is becoming very limiting – no outdoor concerts, no outdoor sports games. You can make it habitable, but you could also spend some money, live elsewhere, and have oh so many more options. Most likely you’d see an increase in second homes – Arizona for the winter and a place up north for summers. Which might not show up as ‘migration’ depending on which they use as their ‘permanent’ address.

People with fewer resources, though, face obstacles to moving. Just changing jobs is challenging. It’s one thing to transfer offices in a large company or be a remote employee who can live anywhere. But can a cashier at Walmart ask their manager to get transferred from Phoenix to Boston? What about employees of smaller businesses that don’t have a more northern location? Going a few weeks without pay on top of moving expense (that rental deposit is a huge one – I’ve known many people stuck in a crappy apartment because they have to save the deposit to move. Sure you get your previous deposit back, but that takes weeks)? Really makes me question the reality of mass migration of poor people.

Adding Sony SNC-DH220T Camera to Zoneminder

We recently picked up a mini dome IP camera — much better resolution than the old IP cams we got when Anya was born — and it took a little trial-and-error to get it set up in Zoneminder. The first thing we did was update the firmware using Sony’s SNCToolbox, configure the camera as we wanted it, and add a “Viewer” user for zoneminder.

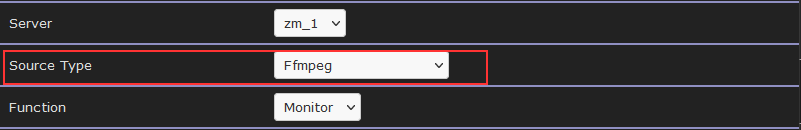

With all that done, the trick is to add an FFMPEG source with the right RTSP address. On the ‘General’ tab, select “Ffmpeg” as the source type:

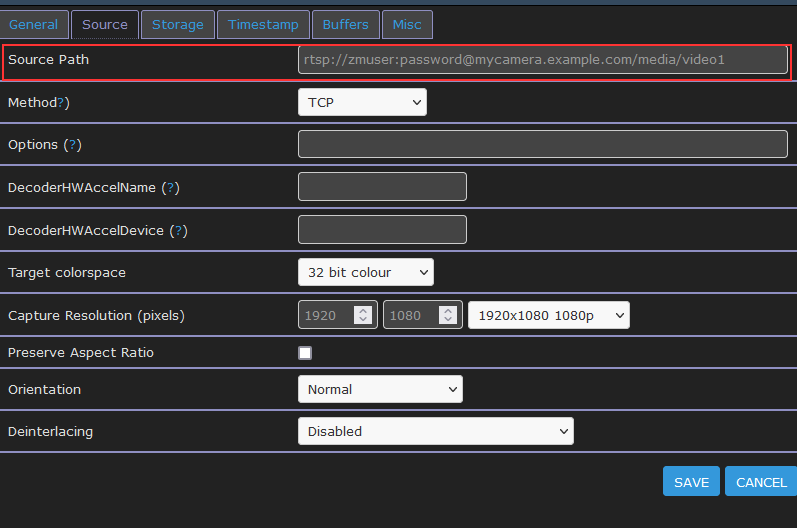

On the ‘Source’ tab, you need to use the right source path. For video stream one, that is rtsp://zmuser:password@mycamera.example.com/media/video1 — change video1 to video2 for the second video stream, if available. And, obviously, use the account you created on your camera for zoneminder and whatever password. Since it’s something that gets stored in clear text, I make a specific zmuser account with a password we don’t use elsewhere. We’ve used both ‘TCP’ and ‘UDP’ successfully, although there was a lot of streaking with UDP.

Save, give it a minute, and voila … you’ve got a Sony SNC-DH220T camera in Zoneminder!

Maple Pecan Pie Filling

- 2 1/2 cups pecan halves

- 5 Tbsp melted butter, cooled

- 1/2 cup brown sugar

- 1 Tbsp all-purpose flour

- 1 Tbsp vanilla extract

- 1/2 tsp salt

- 3 eggs

- 1 cup maple syrup

This recipe requires the pie crust to be blind baked — do that first and allow the crust to cool! Preheat oven to 350F.

Lay the pecan halves over the bottom of the pie crust.

Whisk together the butter, brown sugar, flour, vanilla, salt, eggs, and maple syrup. Pour over the pecans.

Put a pie crust shield on to protect the edge of the pie from overcooking. Bake for 45 minutes until the top is browned slightly.

Using Screen to Access Console Port

We needed to console into some Cisco access points — RJ45 to USB to plug into the device console port and the laptop’s USB port? Check! OK … now what? Turns out you can use the screen command as a terminal emulator. The basic syntax is screen <port> <baud rate> — since the documentation said to use 9600 baud and the access point showed up on /dev/ttyUSB0, this means running:

screen /dev/ttyUSB0 9600More completely, screen <port> <baud rate>,<7 or 8 bits per byte>,<enable or disable sending flow control>,<enable or disable rcving flow control>,<keep or clear the eight bit in each byte>

screen /dev/ttyUSB0 9600,cs8,ixon,ixoff,istrip- or -screen /dev/ttyUSB0 9600,cs7,-ixon,-ixoff,-istrip

Macrame Project – Hanging Plant Basket

Scott got a hoya earlier this year, and it is about time to transplant it into a larger pot. He wants to be able to hang it in the window to get plenty of light — so I’m making a basket to hold the plant.

The main part of the planter is 16x 18 feet strands that will be folded in half an arranged as four sets of four strands. Additionally, I need a 6.5 foot strand to wrap the hanging loop and another three foot section for gathering at the base of the loop. Wow, it takes a lot of cord to make a plant hanger.

Hanging loop followed by four groups knotted as: 7″ of spiral knot, 4.5″ straight then single knot, and

10″ of square knot. Then the groups will be changed to form a diamond shaped net that will hold our planter.

I got all of the cords cut, taped off the ends so they don’t fray, and am starting to make the hanging loop.

Since this is such a huge pile of strings, I grouped the strings that will be knotted together. Once they were grouped, I coiled each group up and used a bread time to hold the coil. I’ve still got a big pile of strings, but only the four I am actively working on are eight feet of hanging strands.

PostgreSQL Wraparound

We had a Postgres server go into read-only mode — which provided a stressful opportunity to learn more nuances of Postgres internals. It appears this “read only mode” something Postgres does to save it from itself. Transaction IDs are assigned to each row in the database — the ID values are used to determine what transactions can see. For each transaction, Postgres increments the last transaction ID and assigns the incremented value to the current transaction. When a row is written, the transaction ID is stored in the row and used to determine whether a row is visible to a transaction.

Inserting a row will assign the last transaction ID to the xmin column. A transaction can see all rows where xmin is less than its transaction ID. Updating a row actually creates a new row — the old row then has an xmax value and the new row has the same number as its xmin — transactions with IDs newer than the xmax value will not see the row. Similarly, deleting a row updates the row’s xmax value — older transactions will still be able to see the row, but newer ones will not.

You can even view the xmax and xmin values by specifically asking for them in a select statement: select *, xmin, xmax from TableName;

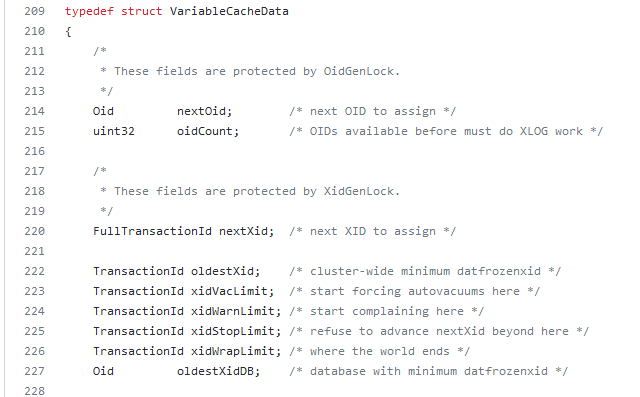

The transaction ID is stored in a 32-bit number — making the possible values 0 through 4,294,967,295. Which can become a problem for a heavily I/O or long-running database (i.e. even if I only get a couple of records an hour, that adds up over years of service) because … what happens when we get to 4,294,967,295 and need to write another record? To combat this, Postgres does something that reminds me of the “doomsday” Mayan calendar — this number range isn’t aligned on a straight line where one eventually runs into a wall. The numbers are arranged in a circle, so there’s always a new cycle and numbers are issued all over again. In the Postgres source, the wrap limit is “where the world ends”! But, like the Mayan calendar … this isn’t actually the end as much as it’s a new beginning.

How do you know if transaction 5 is ‘old’ or ‘new’ if the number can be reissued? The database considers half of the IDs in the real past and half for future use. When transaction ID four billion is issued, ID number 5 is considered part of the “future”; but when the current transaction ID is one billion, ID number 5 is considered part of the “past”. Which could be problematic if one of the first records in the database has never been updated but is still perfectly legitimate. Reserving in-use transaction IDs would make the re-issuing of transaction IDs more resource intensive (not just assign ++xid to this transaction, but xid++;is xid assigned {if so, xid++ and check again until the answer is no}; assign xid to this transaction). Instead of implementing more complex logic, rows can be “frozen” — this is a special flag that basically says “I am a row from the past and ignore my transaction ID number”. In versions 9.4 and later, both committed and aborted hint bits are set to freeze a row — in earlier versions, used a special FrozenTransactionId index.

There is a minimum age for freezing a row — it generally doesn’t make sense to mark a row that’s existed for eight seconds as frozen. This is configured in the database as the vacuum_freeze_min_age. But it’s also not good to let rows sit around without being frozen for too long — the database could wrap around to the point where the transaction ID is reissued and the row would be lost (well, it’s still there but no one can see it). Since vacuuming doesn’t look through every page of the database on every cycle, there is a vacuum_freeze_table_age which defines the age of a transaction where vacuum will look through an entire table to freeze rows instead of relying on the visibility map. This combination, hopefully, balances the I/O of freezing rows with full scans that effectively freeze rows.

What I believe led to our outage — most of our data is time-series data. It is written, never modified, and eventually deleted. Auto-vacuum will skip tables that don’t need vacuuming. In our case, that’s most of the tables. The autovacuum_freeze_max_age parameter sets an ‘age’ at which vacuuming is forced. If these special vacuum processes don’t complete fully … you eventually get into a state where the server stops accepting writes in order to avoid potential data loss.

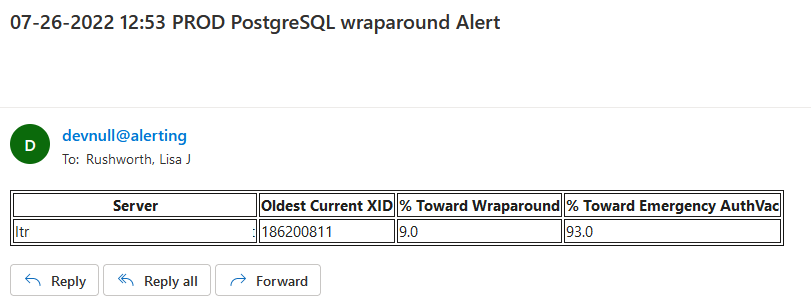

So monitoring for transaction IDs approaching the wraparound and emergency vacuum values is important. I set up a task that alerts us when we approach wraparound (fortunately, we’ve not gotten there again) as well as when we approach the emergency auto-vacuum threshold — a state which we reach a few times a week.

Using the following query, we monitor how close each of our databases is to both the auto-vacuum threshold and the ‘end of the world’ wrap-around point.

WITH max_age AS ( SELECT 2000000000 as max_old_xid

, setting AS autovacuum_freeze_max_age FROM pg_catalog.pg_settings

WHERE name = 'autovacuum_freeze_max_age' )

, per_database_stats AS ( SELECT datname , m.max_old_xid::int

, m.autovacuum_freeze_max_age::int

, age(d.datfrozenxid) AS oldest_current_xid

FROM pg_catalog.pg_database d

JOIN max_age m ON (true) WHERE d.datallowconn )

SELECT max(oldest_current_xid) AS oldest_current_xid

, max(ROUND(100*(oldest_current_xid/max_old_xid::float))) AS percent_towards_wraparound

, max(ROUND(100*(oldest_current_xid/autovacuum_freeze_max_age::float))) AS percent_towards_emergency_autovac FROM per_database_stats

If we are approaching either point, e-mail alerts are sent.

When a database approaches the emergency auto-vacuum threshold, we freeze data manually — vacuumdb --all --freeze --jobs=1 --echo --verbose --analyze (or –jobs=3 if I want the process to hurry up and get done).

Pumpkin Butter

Ingredients

- 3 lbs pumpkin puree

- 1 cup maple sugar

- 1/4 tsp salt

- 2 Tbsp lemon juice

- 2 tsp ground cinnamon

- 1 tsp ground ginger

- 1/2 tsp ground cloves

- 1/4 tsp ground nutmeg

- 1/2 cup water

Method

- Add water to slow cooker.

- Place remaining ingredients into slow cooker and mix to combine.

- Cook on low for 5 hours.

- Can in glass jars and seal.

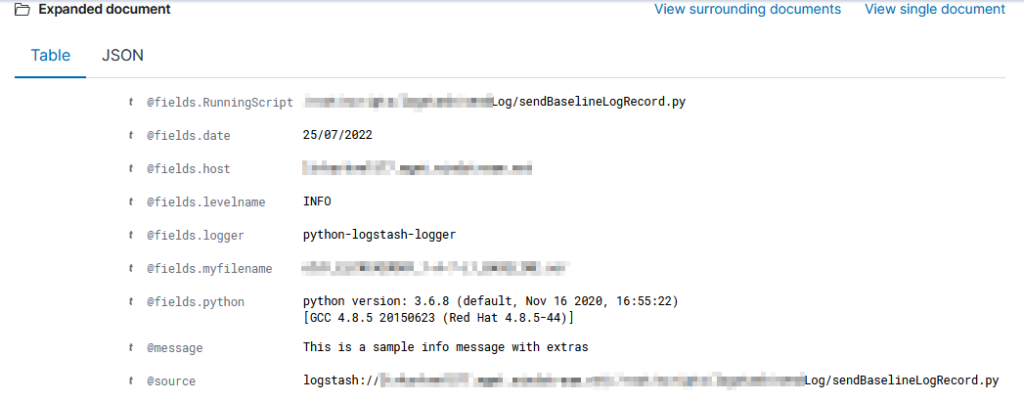

Logstash – Filtering data with Ruby

I’ve been working on forking log data into two different indices based on an element contained within the record — if the filename being sent includes the string “BASELINE”, then the data goes into the baseline index, otherwise it goes into the scan index. The data being ingested has the file name in “@fields.myfilename”

It took a while to figure out how to get the value from the current data — event.get(‘[@fields][myfilename]’) to get the @fields.myfilename value.

The following logstash config accepts JSON inputs, parses the underscore-delimited filename into fields, replaces the dashes with underscores as KDL doesn’t handle dashes and wildcards in searches, and adds a flag to any record that should be a baseline. In the output section, that flag is then used to publish data to the appropriate index based on the baseline flag value.

input {

tcp {

port => 5055

codec => json

}

}

filter {

# Sample file name: scan_ABCDMIIWO0Y_1-A-5-L2_BASELINE.json

ruby { code => "

strfilename = event.get('[@fields][myfilename]')

arrayfilebreakout = strfilename.split('_')

event.set('hostname', arrayfilebreakout[1])

event.set('direction',arrayfilebreakout[2])

event.set('parseablehost', strfilename.gsub('-','_'))

if strfilename.downcase =~ /baseline/

event.set('baseline', 1)

end" }

}

output {

if [baseline] == 1 {

elasticsearch {

action => "index"

hosts => ["https://elastic.example.com:9200"]

ssl => true

cacert => ["/path/to/logstash/config/certs/My_Chain.pem"]

ssl_certificate_verification => true

# Credentials go here

index => "ljr-baselines"

}

}

else{

elasticsearch {

action => "index"

hosts => ["https://elastic.example.com:9200"]

ssl => true

cacert => ["/path/to/logstash/config/certs/My_Chain.pem"]

ssl_certificate_verification => true

# Credentials go here

index => "ljr-scans-%{+YYYY.MM.dd}"

}

}

}