A little after 1PM today, we inspected our beehive. Scott set appointments to remind us to inspect the bees on Sunday afternoon every week for the first few weeks. I filled the second frame feeder with a gallon of 1:1 sugar water. Figured it would be quicker and easier to swap feeders rather than try refilling the feeder outside.

We opened the hive — there aren’t as many bees on the top entrance board now that the hive has a larger entrance, but they did build some burr comb between the frames and the top board. Scott scraped off the burr comb, and Anya held onto it. There were a lot of bees across six of the frames. The bees have started working on the north-most two frames, so they were ready for the second deep hive body.

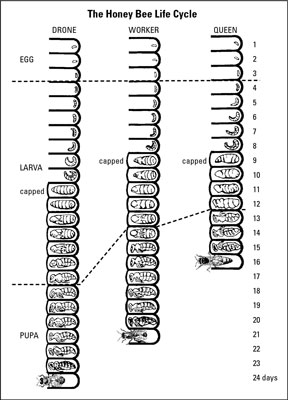

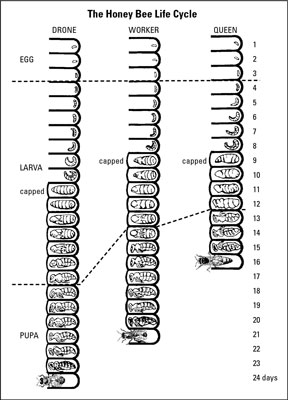

We removed one of the frames from the lower hive body — looking at the frame, we saw both capped brood and larvae. That’s great — we know the queen is laying! I found a cool picture of the bee lifecycle that made me think we wouldn’t see any capped larva yet (we released the queen 8 days ago … and “capped” shows up on day 9.

They are collecting pollen (we saw some pollen on the bottom board) and nectar (there’s already capped honey as well as cells with not-yet-finished honey). The frame was placed into the new deep to encourage the bees to move up into the new box. We removed the empty feeder, replaced it with the filled feeder, and placed one new frame into the lower hive body so it has eight frames plus the feeder.

We then put the new deep on top of the hive — it has the one frame we pulled from the hive plus nine clean, new frames. There’s a little gap between the two hive bodies that shrinks up when weight is put on the top. We put the top entrance board on the deep, set the lid in place, and placed a large cinder block on top of it all.