RedHat is phasing out ZFS – there are several reasons for this move, but primarily ZFS is a closed source Solaris (now Oracle) codebase. While OpenZFS exists, it’s not quite ‘the same’. RedHat’s preferred solution is Virtual Data Optimizer (VDO). This page walks through the process of installing PostgreSQL and creating a database cluster on VDO and installing TimescaleDB extension on the database cluster for RedHat Enterprise 8 (RHEL8)

Before we create a VDO disk, we need to install it

yum install vdo kmod-kvdo

|

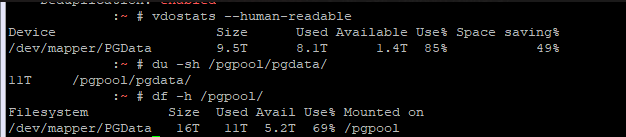

Then we need to create a vdo – here a VDO named ‘PGData’ is created on /dev/sdb – a 9TB volume on which we will hold 16TB

vdo create --name=PGData --device=/dev/sdb --vdoLogicalSize=16T

|

Check to verify that the object was created – it is /dev/mapper/PGData in this instance

Now format the volume using xfs.

mkfs.xfs /dev/mapper/PGData

|

And finally add a mount point

mkdir /pgpool

cat /etc/fstab

/dev/mapper/PGData /pgpool xfs defaults,x-systemd.requires=vdo.service 0 0

systemctl daemon-reload

mount -a

|

it should be mounted at ‘/pgpool/’

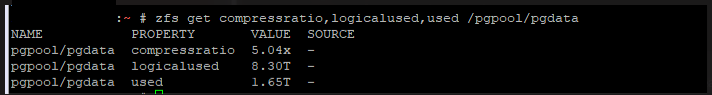

The main reason for using VDO with Postgres is because of its compression feature – this is automatically enabled, although we may need to tweak settings as we test it.

We now have a place in our pool where we want our Postgres database to store its data. So let’s go ahead and install PostgreSQL,

here we are using RHEL8 and installing PostgreSQL 12

dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm

dnf clean all

dnf -qy module disable postgresql

dnf install -y postgresql12-server

|

Once the installation is done we need to initiate the database cluster and start the server . Since we want our Postgres to store data in our VDO volume we need to initialize it into our custom directory, we can do that in many ways,

In all cases we need to make sure that the mount point of our zpool i.e., ‘/pgpool/pgdata/’ is owned by the ‘postgres’ user which is created when we install PostgreSQL. We can do that by running the below command before running below steps for starting the postgres server

mkdir /pgpool/pgdata

chown -R postgres:postgres /pgpool

|

Customize the systemd service by editing the postgresql-12 unit file and updateding the PGDATA environment variable

vdotest-uos:pgpool

Environment=PGDATA=/pgpool/pgdata

|

and then initialize, enable and start our server as below

/usr/pgsql-12/bin/postgresql-12-setup initdb

systemctl enable postgresql-12

systemctl start postgresql-12

|

Here ‘/usr/pgsql-12/bin/’ is the bin directory of postgres installation you can substitute it with your bin directory path.

or

We can also directly give the data directory value while initializing db using below command

/usr/pgsql-12/bin/initdb -D /pgpool/pgdata/

|

and then start the server using

systemctl start postgresql-12

|

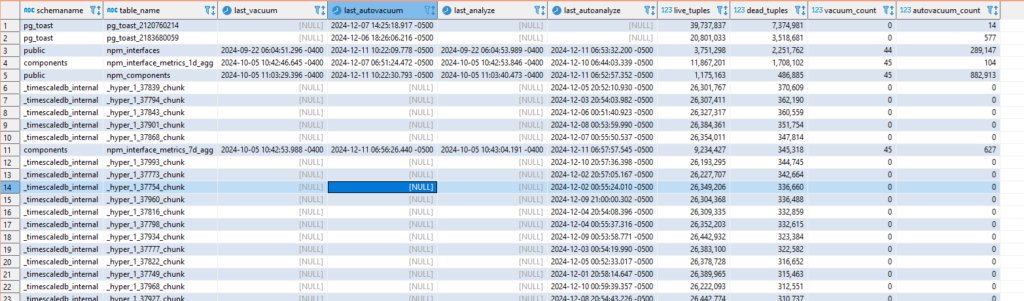

Now we have installed postgreSQL and started the server, we will install the Timescale extension for Postgres now.

add the time scale repo with below command

tee /etc/yum.repos.d/timescale_timescaledb.repo <<EOL

[timescale_timescaledb]

name=timescale_timescaledb

baseurl=https://packagecloud.io/timescale/timescaledb/el/8/\$basearch

repo_gpgcheck=1

gpgcheck=0

enabled=1

gpgkey=https://packagecloud.io/timescale/timescaledb/gpgkey

sslverify=1

sslcacert=/etc/pki/tls/certs/ca-bundle.crt

metadata_expire=300

EOL

sudo yum update -y

|

then install it using below command

yum install -y timescaledb-postgresql-12

|

After installing we need to add ‘timescale’ to shared_preload_libraries in our postgresql.conf, Timescale gives us ‘timescaledb-tune‘ which can be used for this and also configuring different settings for our database. Since we initialize our PG database cluster in a custom location we need to point the direction of postgresql.conf to timescaledb-tune it also requires a path to our pg_config file we can do both by following command.

timescaledb-tune --pg-config=/usr/pgsql-12/bin/pg_config --conf-path=/pgpool/pgdata/postgresql.conf

|

After running above command we need to restart our Postgres server, we can do that by one of the below commands

systemctl restart postgresql-12

|

After restarting using one of the above commands connect to the database you want to use Timescale hypertables in and run below statement to load Timescale extension

CREATE EXTENSION IF NOT EXISTS timescaledb CASCADE;

|

you can check if Timescale is loaded by passing ‘\dx’ command to psql which will load the extension list.

in order to configure PostgreSQL to allow remote connection we need to do couple of changes as below