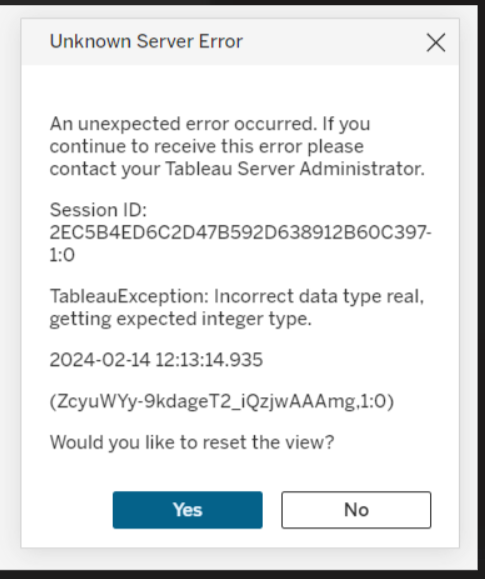

I started upgrading our Tableau servers to 2023.3 this week. Several dashboards no longer rendered after the upgrade — throwing an error “TableauException: Incorrect data type real, getting expected integer type.” … resetting or not resetting the view did not help.

This is evidently a known issue (although the documentation prior to my reporting the issue seemed to go out of its way to say it is just the cloud platform being impacted)

https://issues.salesforce.com/issue/a028c00000iwtkXAAQ/~

https://kb.tableau.com/articles/Issue/error-incorrect-data-type-real-getting-expected-integer-type-occurs-intermittently-during-view-rendering

Both the server and desktop client are impacted — and, unlike their documentation that says it is intermittent? Not all workbooks are impacted, but the ones that are? A broken workbook is broken and will not render for anyone, anywhere, any time.

There is a workaround:

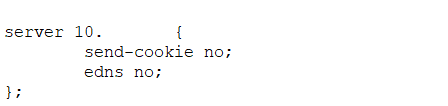

Server:

tsm configuration set -k features.EnableLogicalQueryBatchProcessor -v false

tsm pending-changes apply

Desktop:

“\Program Files\Tableau\Tableau 2023.3\bin\tableau.exe” -DOverride=EnableLogicalQueryBatchProcessor:off