I have no idea why I am so pleased with this simple HTML code, but I am! My current project is to move all of our Tableau servers to different servers running a newer version of Windows. When I first got involved with the project, it seemed rather difficult (there was talk of manually recreating all of the permissions on each item!!) … but, some review of the vendors documentation let me to believe one could build a same-version server elsewhere (newer Windows, out in magic cloudy land, but the same Tableau version), back up the data from the old server, restore it to the new one, and be done. It’s not quite that simple — I had to clear out the SAML config & manually reconfigure it so the right elements get added into the Java keystore, access to the local Postgresql database needed to be manually configured, a whole bunch of database drivers needed to be installed, and the Windows registry of ODBC connections needed to be exported/imported. But the whole process was a lot easier than what I was first presented.

Upgrading the first production server was mostly seamless — except users appear to have had the server’s actual name. Instead of accessing https://tableau.example.com, they were typing abcwxy129.example.com. And passing that link around as “the link” to their dashboard. And, upon stopping the Tableau services on the old server … those links started to fail. Now, I could have just CNAMED abcwxy129 over to tableau and left it at that. But letting users continue to do the wrong thing always seems to come back and haunt you (if nothing else, the OS folks own the namespace of servers & are allowed to re-use or delete those hostnames at will). So I wanted something that would take whatever https://tableau.example.com/#/site/DepartMent/workbooks/3851/Views kind of URL a user provided and give them the right address. And, since this was Windows, to do so with IIS without the hassle of integrating PHP or building a C# project. Basically, I wanted to do it within basic HTML. Which meant JavaScript.

And I did it — using such a basic IIS installation that the file is named something like iisstart.htm so I didn’t have to change the default page name. I also redirected 404 to / so any path under the old server’s name will return the redirection landing page.

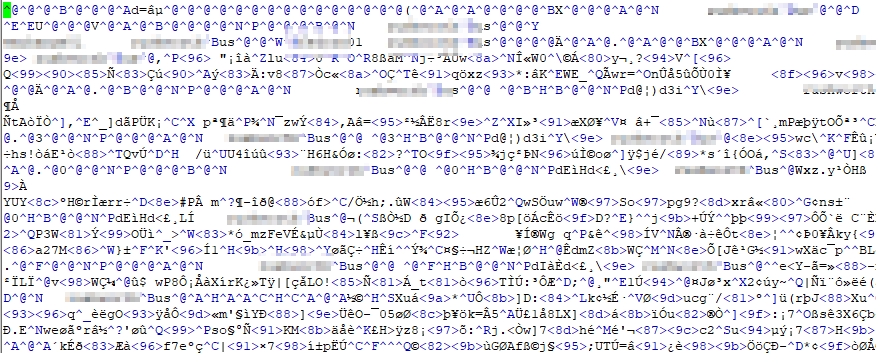

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd">

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta http-equiv="Content-Type" content="text/html; charset=iso-8859-1" />

<title>This Tableau server has moved</title>

<style type="text/css">

<!--

body {

color:#000000;

background-color:#eeeeee;

margin:0;

}

-->

</style>

</head>

<body>

<P><ul>

<h2>The Tableau server has moved. </h2>

<P>The location you accessed, <span style="white-space: nowrap" id="oldurl"></span>, is no longer available.<br><br> Please update your link to use <span style="white-space: nowrap" id="newurl"></span></p>

</ul></P>

<script>

let strOldURL = window.location.href;

let strNewURL = strOldURL.replace(/hostname.example.com/i,"tableau.example.com");

strNewURL = strNewURL.replace(/otherhostname.example.com/i,"tableau.example.com");

document.getElementById("oldurl").innerHTML = window.location.href;

document.getElementById("newurl").innerHTML = "<a href=" + strNewURL + ">" + strNewURL + "</a>";

</script>

</body>

</html>