The quick way is to read the MANIFEST.MF file — look for the Main-Class and Start-Class lines. You can also run “jar tf path/to/my.jar” to list out the class files.

Category: Technology

Maven Build Certificate Error

Attempting to build some Java code, I got a lot of errors indicating a trusted certificate chain was not available:

Could not transfer artifact org.springframework.boot:spring-boot-starter-parent:pom:2.2.0.RELEASE from/to repo.spring.io (<redacted>): sun.security.validator.ValidatorException: PKIX path building failed: sun.security.provider.certpath.SunCertPathBuilderException: unable to find valid certification path to requested target

And

[ERROR] Failed to execute goal on project errorhandler: Could not resolve dependencies for project com.example.npm:errorhandler:jar:0.0.1-SNAPSHOT: The following artifacts could not be resolved: org.springframework.boot:spring-boot-starter-data-jpa:jar:2.3.7.BUILD-SNAPSHOT, org.springframework.boot:spring-boot:jar:2.3.7.BUILD-SNAPSHOT, org.springframework.boot:spring-boot-configuration-processor:jar:2.3.7.BUILD-SNAPSHOT: Could not transfer artifact org.springframework.boot:spring-boot-starter-data-jpa:jar:2.3.7.BUILD-20201211.052207-37 from/to spring-snapshots (https://repo.spring.io/snapshot): transfer failed for https://repo.spring.io/snapshot/org/springframework/boot/spring-boot-starter-data-jpa/2.3.7.BUILD-SNAPSHOT/spring-boot-starter-data-jpa-2.3.7.BUILD-20201211.052207-37.jar: Certificate for <repo.spring.io> doesn't match any of the subject alternative names: [] -> [Help 1]

Ideally, you could just add whatever cert(s) needed to be trusted into the cacerts file for the Java instance using keytool (.\keytool.exe -import -alias digicert-intermed -cacerts -file c:\tmp\digi-int.cer) however the work computers are locked down such that I am unable to import certs into the Java trust store. The second error makes me think it wouldn’t work anyway — if there’s no matching SAN on the cert, trusting the cert wouldn’t do anything.

Fortunately, there are a few flags you can add to mvn to ignore certificate errors — thus allowing the build to complete without requiring access to the cacerts file. There is, of course, a possibility that the trust failure is because your connection is being redirected maliciously … but I see enough other people getting trust failures for this spring-boot stuff (and visiting the site doesn’t show anything suspect) that I’m happy to bypass the security validation this once and just be done with the build 🙂

mvn package -DskipTests -Dmaven.wagon.http.ssl.insecure=true -Dmaven.wagon.http.ssl.allowall=true -Dmaven.wagon.http.ssl.ignore.validity.dates=true jib:build

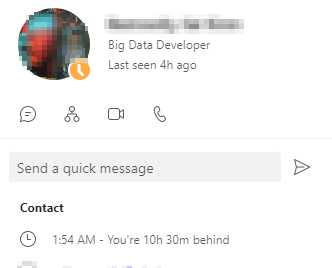

Did you know … Teams shows timezone offsets for individuals

Teams now shows the timezone offset and local time for individuals — because it’s always 2AM somewhere!

The contact card that comes up when you click on a user in Microsoft Teams now includes the current local time and time zone offset information for the individual — very useful to avoid ringing someone up at 2AM.

PostgreSQL Matching Functions

Queries using POSIX regex

-- Case insensitive match SELECT * FROM mytable WHERE columnName ~* 'this|that';

-- Case sensitive match SELECT * FROM mytable WHERE columnName ~ 'this|that';

Queries Using ANY

SELECT * FROM mytable WHERE columnName like any (array['%this%', '%that%']);

Queries Using SIMILAR TO

-- This is translated to a regex query internally, so not effectively different than constructing the regex query yourself SELECT * FROM mytable WHERE columnName SIMILAR TO '%(this|that)%';

Postgresql Through an SSH Tunnel in Python

Our production Postgresql servers have a fairly restrictive IP access control list — which means you cannot VPN in and query the server. We’ve been using DBeaver with an SSH tunnel to connect, but it’s a bit time consuming to run a query across all of the servers for monitoring and troubleshooting. To work around the restriction, I built a python script that uses an SSH tunnel to relay communications to the Postgresql servers.

import psycopg2

from sshtunnel import SSHTunnelForwarder

from config import strSSHRelayHost, iSSHRelayPort, strSSHRelayUser, strSSHAuthKeyFile, dictHost

# In the config.py, dictHost should contain the following information

# dictHost = {"host":"dbserver.example.com","port":5432,"database": "dbname", "username":"dbuser", "password":"S3cr3tPhr@5e"}

# Example query -- listing out locks

sqlQuery = "WITH RECURSIVE l AS ( SELECT pid, locktype, mode, granted, ROW(locktype,database,relation,page,tuple,virtualxid,transactionid,classid,objid,objsubid) obj FROM pg_locks ), pairs AS ( SELECT w.pid waiter, l.pid locker, l.obj, l.mode FROM l w JOIN l ON l.obj IS NOT DISTINCT FROM w.obj AND l.locktype=w.locktype AND NOT l.pid=w.pid AND l.granted WHERE NOT w.granted ), tree AS ( SELECT l.locker pid, l.locker root, NULL::record obj, NULL AS mode, 0 lvl, locker::text path, array_agg(l.locker) OVER () all_pids FROM ( SELECT DISTINCT locker FROM pairs l WHERE NOT EXISTS (SELECT 1 FROM pairs WHERE waiter=l.locker) ) l UNION ALL SELECT w.waiter pid, tree.root, w.obj, w.mode, tree.lvl+1, tree.path||'.'||w.waiter, all_pids || array_agg(w.waiter) OVER () FROM tree JOIN pairs w ON tree.pid=w.locker AND NOT w.waiter = ANY ( all_pids )) SELECT (clock_timestamp() - a.xact_start)::interval(3) AS ts_age, replace(a.state, 'idle in transaction', 'idletx') state, (clock_timestamp() - state_change)::interval(3) AS change_age, a.datname,tree.pid,a.usename,a.client_addr,lvl, (SELECT count(*) FROM tree p WHERE p.path ~ ('^'||tree.path) AND NOT p.path=tree.path) blocked, repeat(' .', lvl)||' '||left(regexp_replace(query, 's+', ' ', 'g'),100) query FROM tree JOIN pg_stat_activity a USING (pid) ORDER BY path"

with SSHTunnelForwarder( (strSSHRelayHost, iSSHRelayPort), ssh_username=strSSHRelayUser, ssh_private_key=strSSHAuthKeyFile, local_bind_address=("localhost",55432), remote_bind_address=(dictHost.get('host'), dictHost.get('port'))) as server:

# Alternately, you can use password authentication

#with SSHTunnelForwarder( (strSSHRelayHost, iSSHRelayPort), ssh_username=strSSHRelayUser, ssh_password=strSSHRelayUserPass, local_bind_address=("localhost",55432), remote_bind_address=(dictHost.get('host'), dictHost.get('port'))) as server:

if server is not None:

server.start()

server._check_is_started()

#print("Tunnel server connected")

params = {'database': dictHost.get('database'),'user': dictHost.get('username'),'password': dictHost.get('password'), 'host': server.local_bind_host, 'port': server.local_bind_port}

conn = psycopg2.connect(**params)

cursor = conn.cursor()

cursor.execute(sqlQuery)

column_names = [desc[0] for desc in cursor.description]

print(column_names)

rows = cursor.fetchall()

for row in rows:

print(row)

cursor.close()

if conn is not None:

conn.close()

server.stop()

else:

print("Unable to establish SSH tunnel")

Postgresql Replication Lag

Replication involves sending records from the master, receiving the record on the remote replica, writing the record on the remote replica, and flushing the record to persistent storage, and finally replaying the record. Replication lag can occur when a large amount of data hasn’t been fully replayed into the remote replica. Identifying where the lag occurs can help in rectifying the underlying problem.

select client_addr, usename, application_name, state, sync_state, (pg_wal_lsn_diff(pg_current_wal_lsn(),sent_lsn) / 1024)::bigint as PendingLag, (pg_wal_lsn_diff(sent_lsn,write_lsn) / 1024)::bigint as WriteLag, (pg_wal_lsn_diff(write_lsn,flush_lsn) / 1024)::bigint as FlushLag, (pg_wal_lsn_diff(flush_lsn,replay_lsn) / 1024)::bigint as ReplayLag, (pg_wal_lsn_diff(pg_current_wal_lsn(),replay_lsn))::bigint / 1024 as TotalLag FROM pg_stat_replication;

Commented to explain what each column means:

select client_addr , usename , application_name, state, sync_state, (pg_wal_lsn_diff(pg_current_wal_lsn(),sent_lsn) / 1024)::bigint as PendingLag, -- The amount of WAL data that hasn't been sent ... check network stuff if lag persists (pg_wal_lsn_diff(sent_lsn,write_lsn) / 1024)::bigint as WriteLag, -- The amount of replayed log data that isn't applied ... check iostat stuff if lag persists (pg_wal_lsn_diff(write_lsn,flush_lsn) / 1024)::bigint as FlushLag, -- similar to write lag, and often these two numbers are high in conjunction (pg_wal_lsn_diff(flush_lsn,replay_lsn) / 1024)::bigint as ReplayLag, -- The amount of log data that is waiting to be replayed ... check iostat stuff but could also be high CPU or memory utilization (pg_wal_lsn_diff(pg_current_wal_lsn(),replay_lsn))::bigint / 1024 as TotalLag -- Basically a sum of the previous values FROM pg_stat_replication;

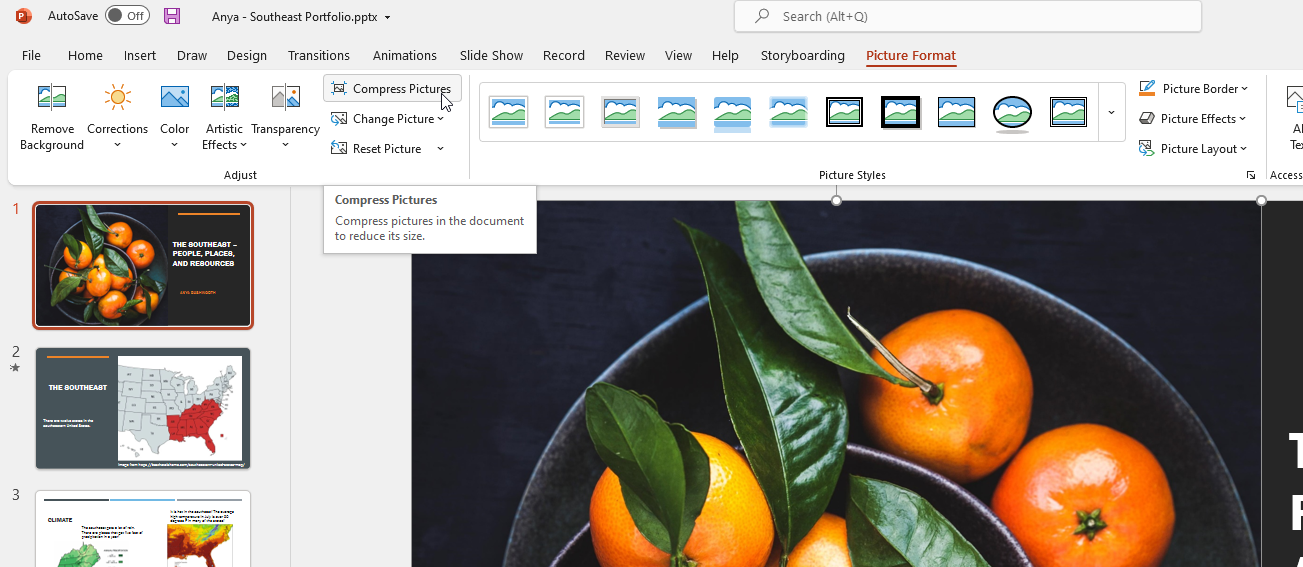

Reducing the Size of a PowerPoint File

Anya’s school work submission platform limits files to ten meg – when she embedded a dozen 3d images in a single presentation to create a seventy meg file? That was an easy fix – drop the 3d object down to a PNG. But her most recent presentation was just photos from the web, and it was just over the ten meg limit. Fortunately (or unfortunately in this case) the more recent Office document formats are already compressed … so you cannot just zip up the file to shrink it. We learned a quick way to reduce the size of a PowerPoint presentation.

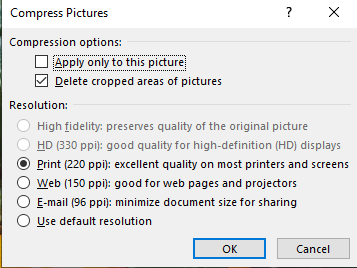

Select one of the pictures in the presentation. On the “Picture Format” tab, find the “Compress Pictures” button.

If you know there is one really high-resolution picture (or a single picture where you cropped out most of it), selecting just that picture and leaving “apply only to this picture” checked makes sense. But, generally, I apply the compression to all images. Select a resolution that’s reasonable – we’ve used “Print” and reduced an eleven meg file to just over four meg. Using “Web” as the resolution reduced the file to just over a meg.

Useful Bash Commands

Viewing Log Files

Tailing the File

When the same file name is used when logs are rotated (i.e. app.log is renamed to app.yyyymmdd.log and a new app.log is created), use the -F flag to follow the name instead of the file descriptor

tail -F /var/log/app.log

Tailing with Filtering

When you are looking for something specific in the log file, it often helps to run the log output through grep. This example watches a sendmail log for communication with the host 10.5.5.5

tail -F /var/log/maillog | grep "10.5.5.5"

Handling Log Files with Date Specific Naming

I alias out commands for viewing commonly read log files. This is easy enough when the current log file is always /var/log/application/content.log, but some active log files have date components in the file name. As an example, our Postgresql servers have the short day-of-week string in the log. Use command substitution to get the date-specific elements from the date executable. Here, I tail a file named postgresql-Tue.log on Tuesday. Since logs rotate to a new name, tail -F doesn’t really do anything. You’ll still need to ctrl-c the tail and restart it for the next day.

tail -f /pgdata/log/postgresql-$(date +%a).log

Typing Unicode Characters

Found an interesting way to enter Unicode characters in Windows (beyond finding it in charmap and then copy/pasting the character!). There’s the technique where you hold alt, hit the plus on your numeric keypad, enter the hex code for the character, then release the alt key. Since a lot of laptops don’t have numeric keypads … this approach isn’t always feasible.

But there’s another way — once you’ve typed the hex code and your cursor is immediately after the code, press Alt-x …

The code magics itself into a Unicode character. You can even put your cursor immediately after a Unicode character, press Alt-x, and the character will turn back into the hex code.

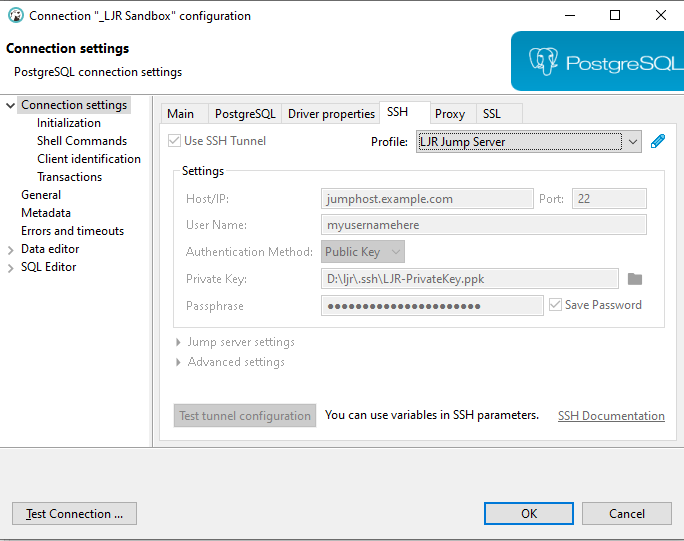

Accessing Postgresql Server Through SSH Tunnel

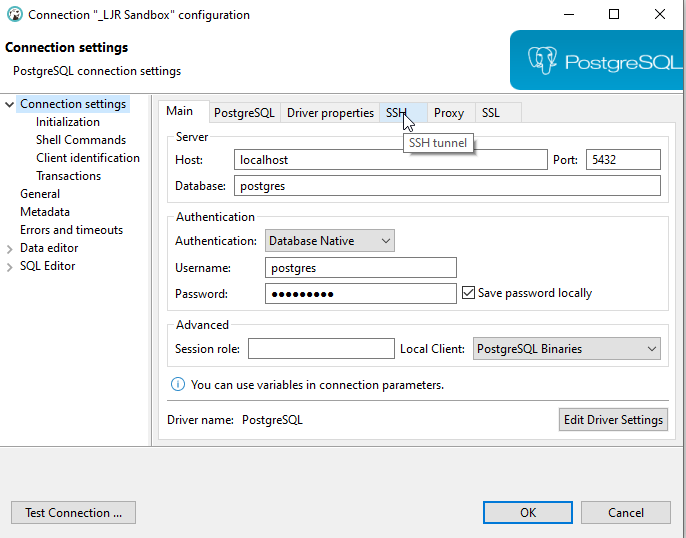

Development servers can be accessed directly, but access to our production Postgresql servers is restricted. To use a SQL client from the VPN network, you need to connect through an SSH tunnel.

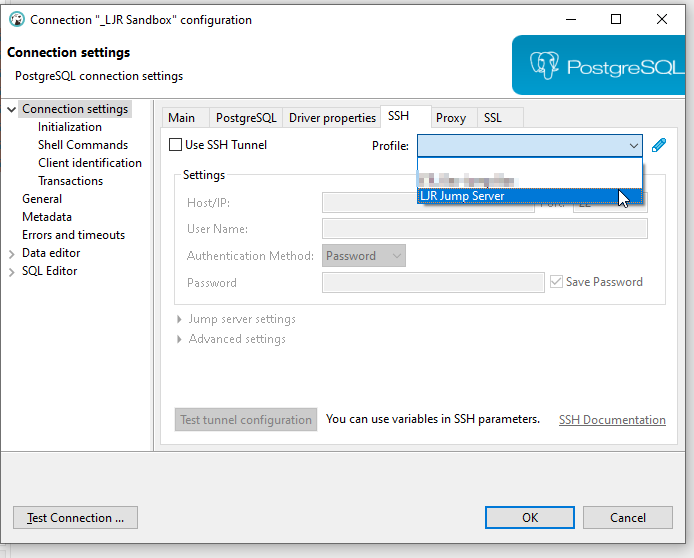

In the connection configuration for a database connect, click the “SSH” tab.

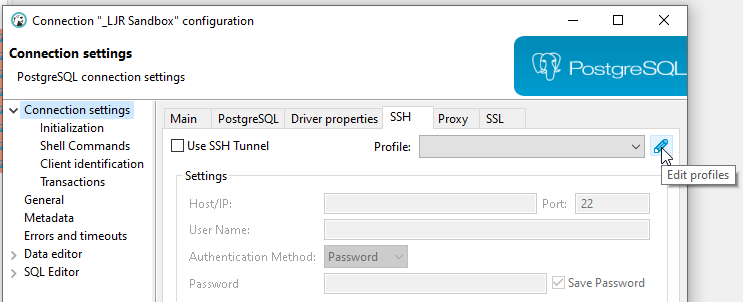

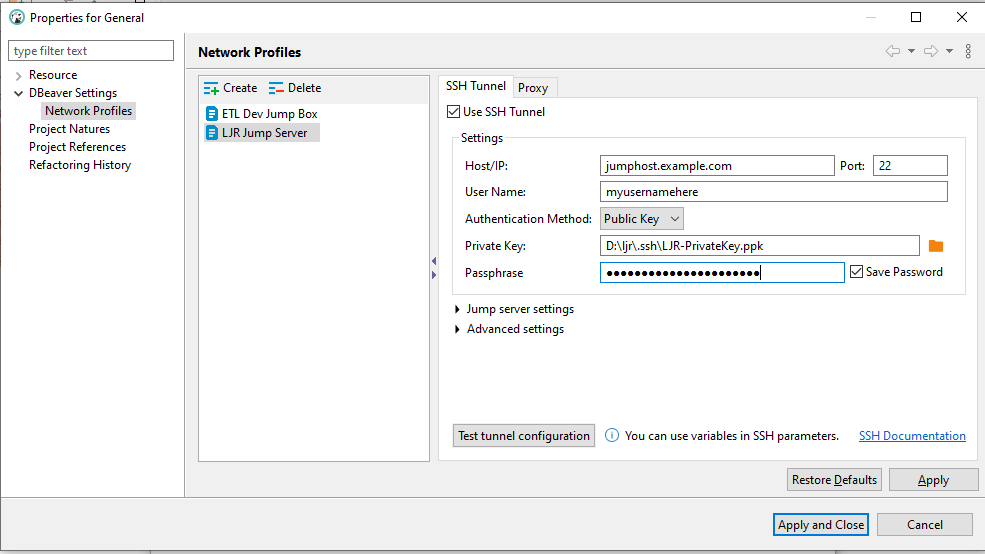

You can one-off configure an SSH tunnel for a connection, but the most efficient approach for setting up the SSH tunnel used to connect to all of our production databases is to create a tunnel profile. The profile lets you type connection info in one time and use it for multiple servers; and, when you need to update a setting, you only have one configuration set to update.

To create a profile, click the pencil to the right of the profile drop-down box.

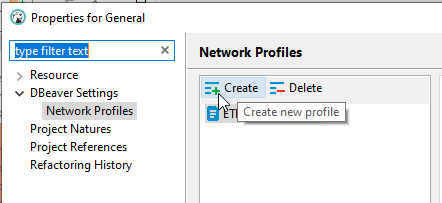

Under the “Network Profiles” section, click “Create” to create a new profile.

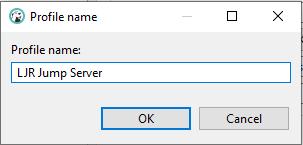

Give the profile a descriptive name and click “OK”.

Check the “Use SSH Tunnel” box, enter the hostname (one of the ETL dev boxes is a good choice in our case – ltrkarkvm553.mgmt.windstream.net. Supply the username for the connection. You can use password authentication or, if you have a key exchange set up for authentication, select public key authentication. Click “Apply and Close” to save the profile.

Your profile will appear in the profile drop-down – select the profile …

And all of the settings will pop in – they’re grayed out here, if you need to update the SSH tunnel profile settings, click the little pencil again.