I lost access to all of my Linux servers at work. And, unlike the normal report where nothing changed but xyz is now failing, I knew exactly what happened. A new access request had been approved about ten minutes previously. Looking at my ID, for some reason adding a new group membership changed account gid number to that new group. Except … that shouldn’t have actually dropped my access. If I needed the group to be my primary ID, I should have been able to use newgrp to switch contexts. Instead, I got prompted for a group password (which, yes, is a thing. No, no one uses it).

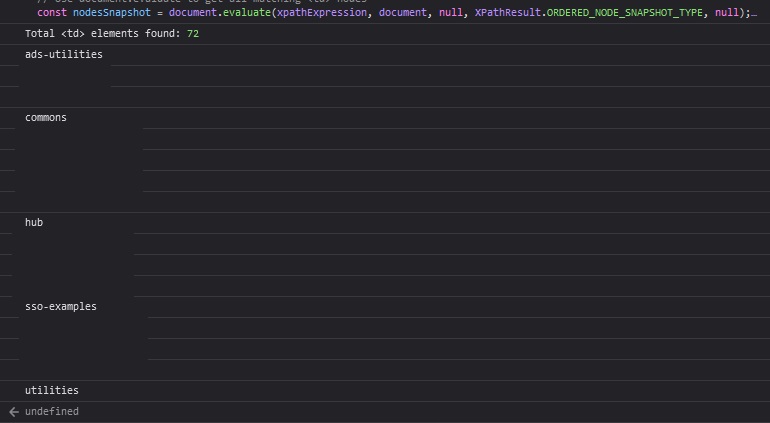

The hosts were set up to authenticate to AD using LDAP, and very successfully let me log in (or not, if I mistyped my password). They, however, would only see me as a member of my primary group. Well, today, I finally got a back door with sufficient access to poke around.

Turns out I was right — something was improperly configured so groups were not being read from the directory but rather implied from the gid value. I added the configuration parameter ldap_schema to instruct the server to use member instead of memberUid for memberships. I used rfc2307bis as that’s the value I was familiar with. I expect “AD” could be used as well, but figured we were well beyond AD 2008r2 and didn’t really want to dig farther into the nuanced differences between the two settings.

ldap_schema (string)

Specifies the Schema Type in use on the target LDAP server. Depending on the selected schema, the default attribute names retrieved from the servers may vary. The way that some attributes are handled may also differ.

Four schema types are currently supported:

- rfc2307

- rfc2307bis

- IPA

- AD

The main difference between these schema types is how group memberships are recorded in the server. With rfc2307, group members are listed by name in the memberUid attribute. With rfc2307bis and IPA, group members are listed by DN and stored in the member attribute. The AD schema type sets the attributes to correspond with Active Directory 2008r2 values.