You’ve used Microsoft Teams to collaborate with individuals assigned to a project, and even created Team spaces for ad hoc groups to work on a problem. But what if you want to discuss the office’s community outreach program or plan the next employee engagement activity? Microsoft Teams is a perfect place to host that collaboration too.

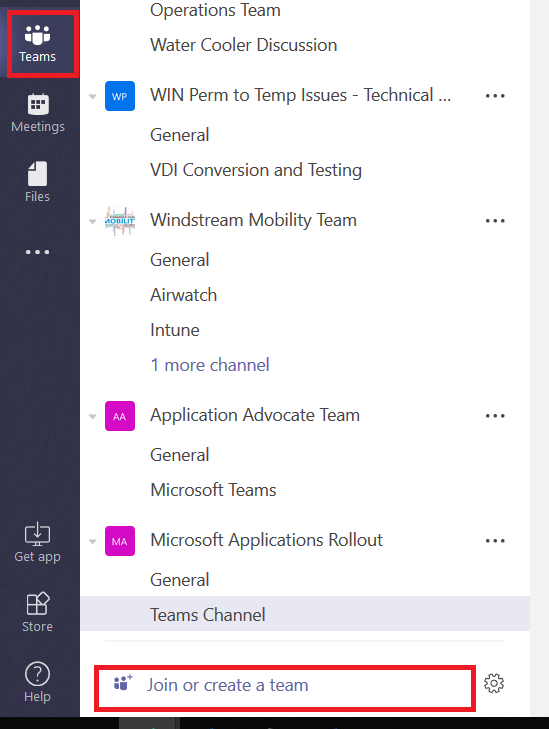

You can create a public Team space – a public Team allows anyone to join without requiring approval. This is great if you do not need to verify qualifications before allowing someone to join a Team. From the “Teams” tile, click on “Join or create a team” at the bottom of your Team list.

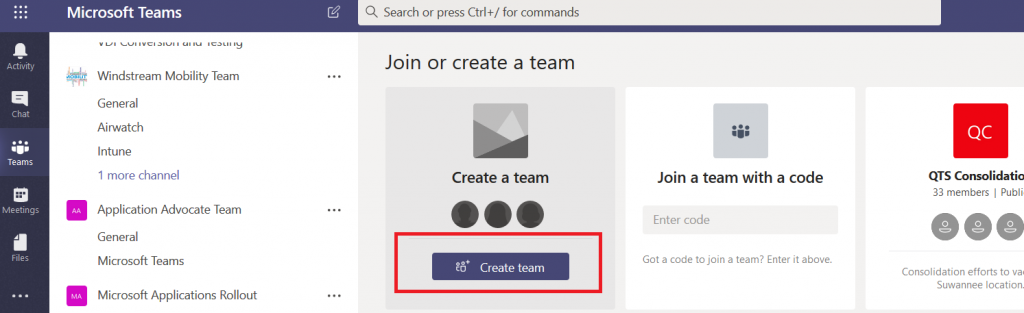

Click “Create team”

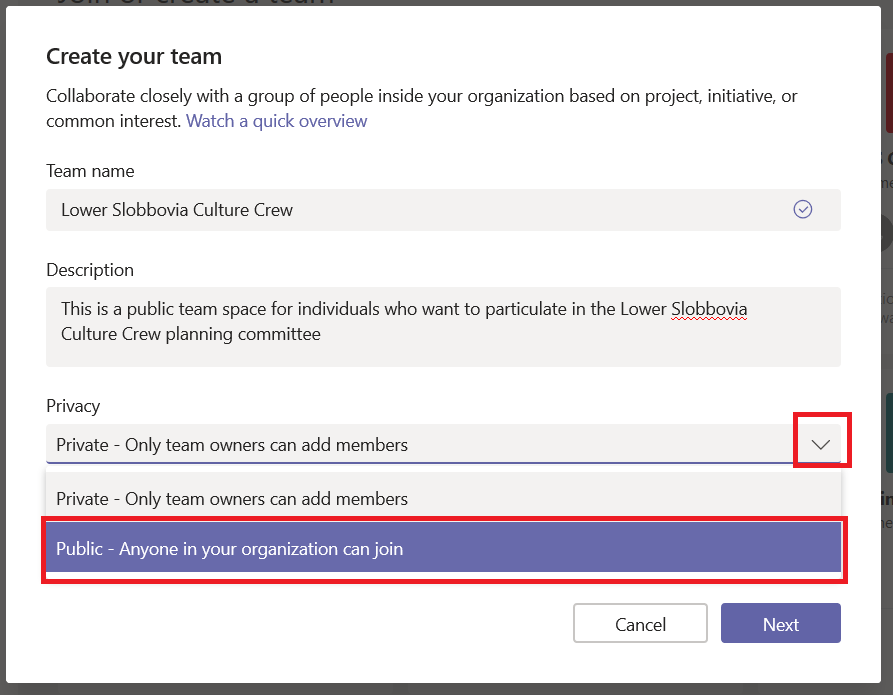

Provide a team name and description so potential members know it’s something that interests them. Now under “Privacy”, click the down arrow and select “Public – Anyone in your organization can join”.

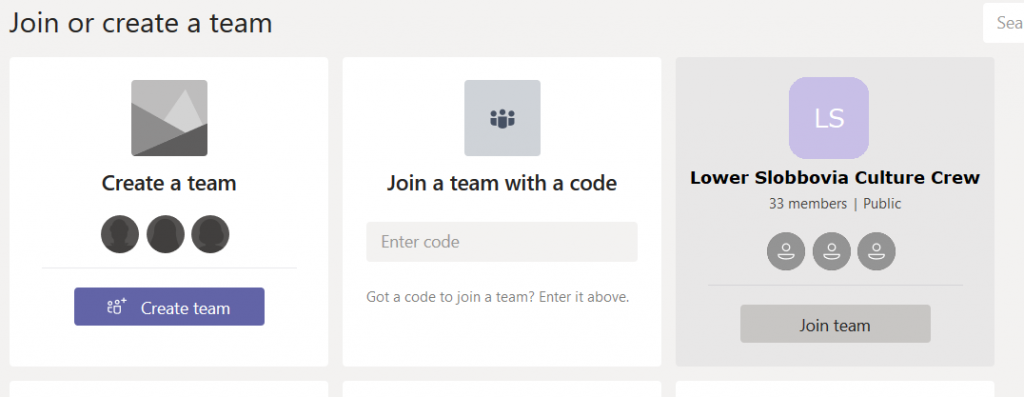

Click Next. You can start your team by adding some members, or you can click “Skip” and let members add themselves. Voila, you’ve got a public team. (Well, give it a few minutes … there’s some background magic that needs to complete). When people click “Join or create a team”, your team will show up in the Team gallery (they may need to search for it – and as of 25 October 2018 it’s a “begins with” search … so Slobbovia will not find my team, but “Lower” or “Lower Slobbovia” will). As soon as they click “Join team”, they will be added as a member of your team.

What if you don’t want your team to be that public? Say we only want people who actually work in East Slobbovia to join up. Then leave the Privacy setting at “Private – Only team owners can add members”. This means your new Team won’t appear on the “Create or join a team” Team list …

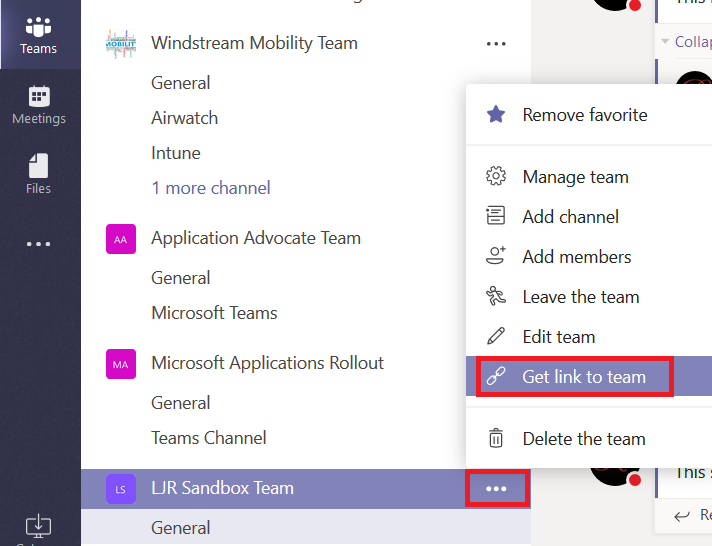

From the hamburger menu next to the team name, select “Get link to team”

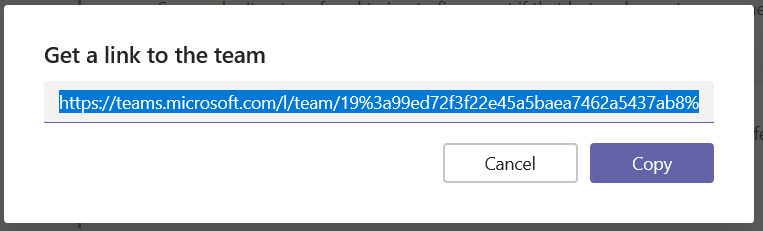

The URL will be displayed in a new pane – click “Copy” and you’ll have the URL in your clipboard.

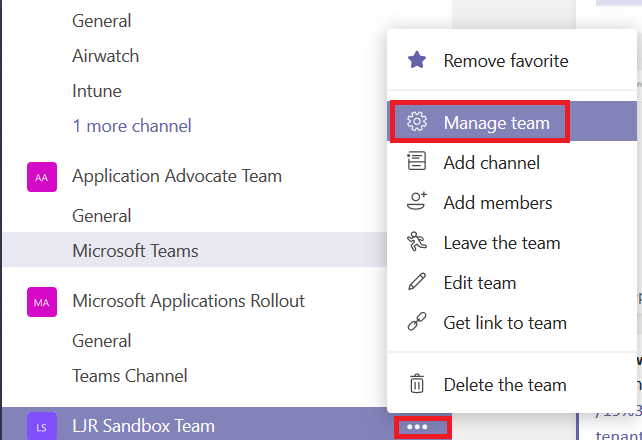

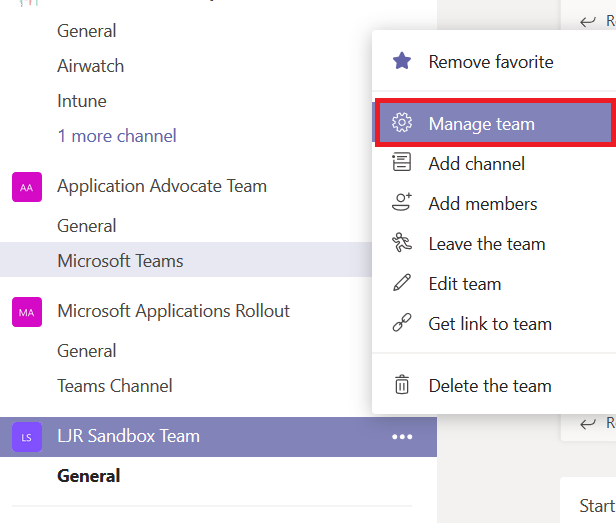

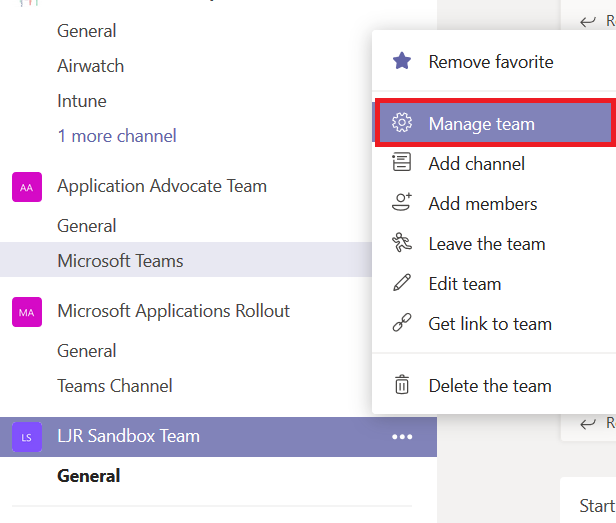

Publicize your URL — you could post it to Stream, have the link included in a local newsletter message, e-mail it to potential Team members … however you let people know about the URL. When someone follows the URL, a membership request will appear on the Team. From the hamburger menu next to the team name, select “Manage team”

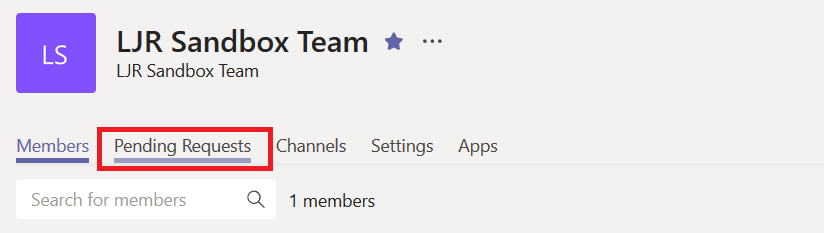

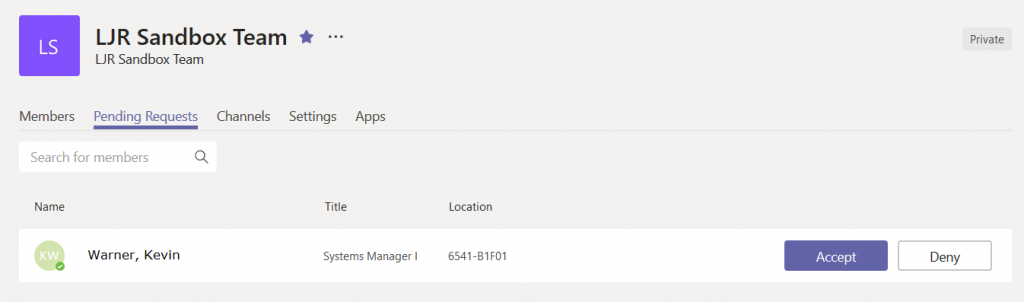

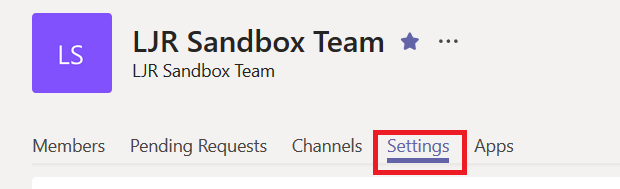

Click on the “Pending Requests” tab

You’ll see, well, the pending requests. Click accept to add the person to your team, or click deny if you prefer.

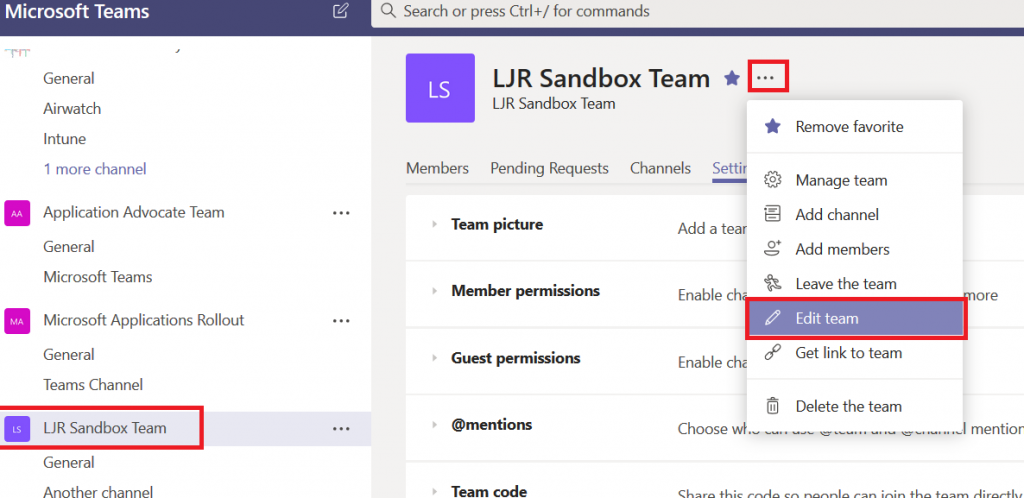

Changing the settings – If you change your mind, that’s OK too. In your Teams list, click on the Team itself (not the hamburger menu next to it, and not one of the channels under it. Then click on the hamburger menu next to the team name in the right-hand pane. Select “Edit team”.

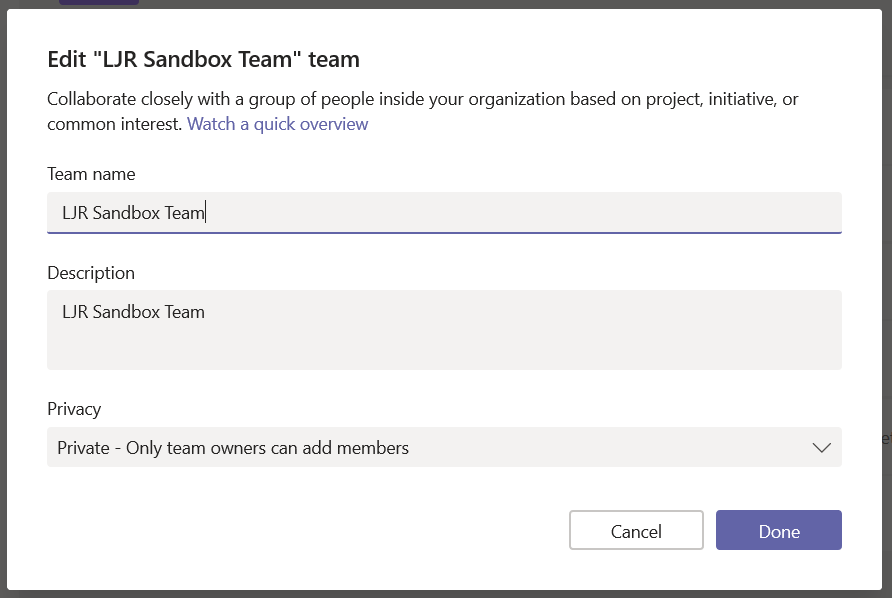

You can change the privacy settings and click “Done”

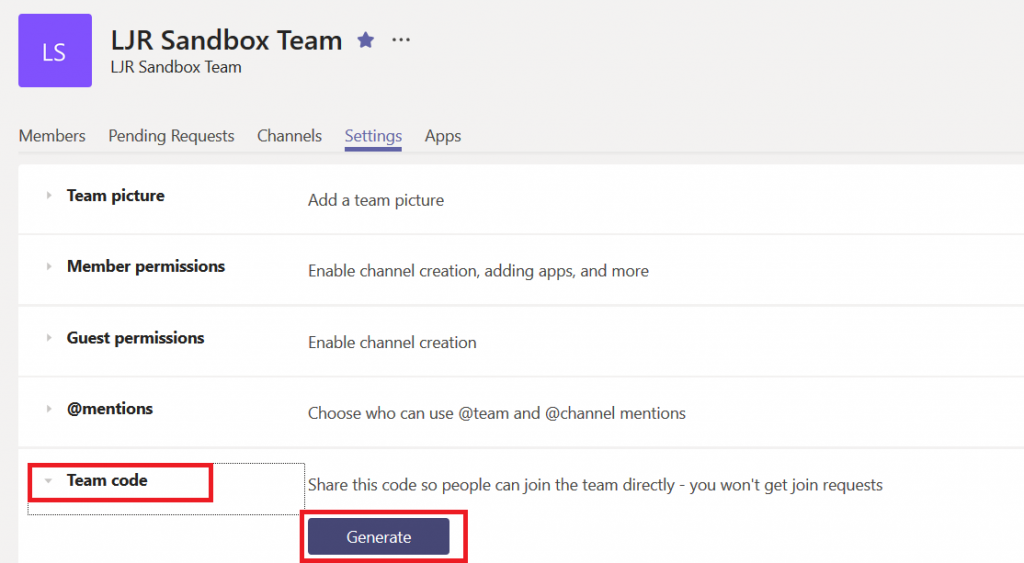

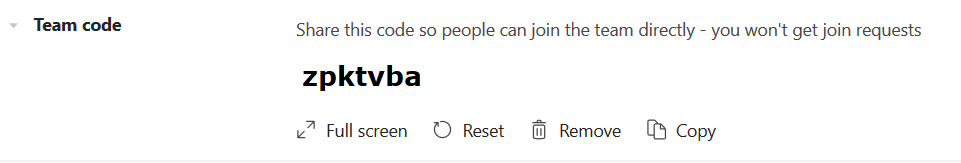

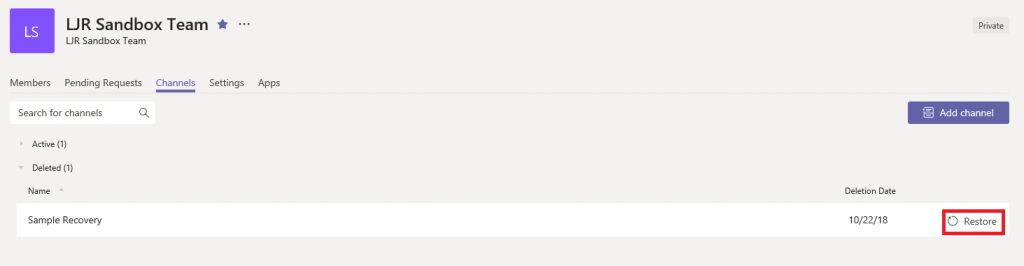

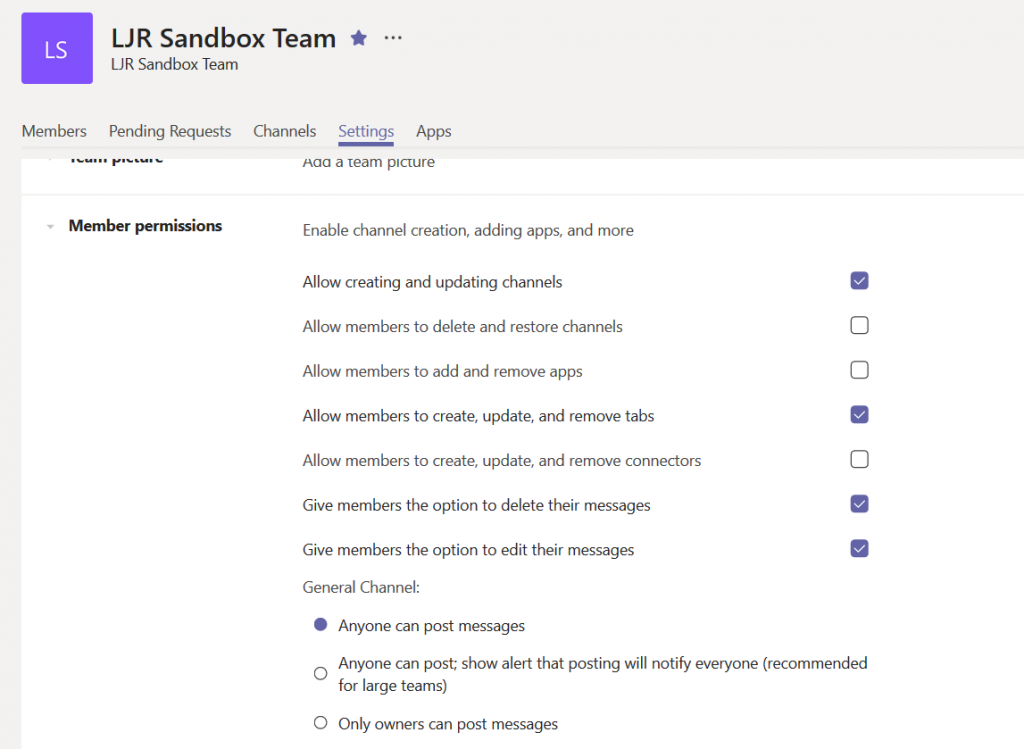

Bonus option … for those of you who have stuck with me this far! You can create a code for your team that allows invited individuals to join the team without waiting for your approval. Click the hamburger menu next to the team name and select “Manage Team”. On the “Settings” tab, expand the “Team code” section. Click “Generate”

Now there’s a code associated with your team.

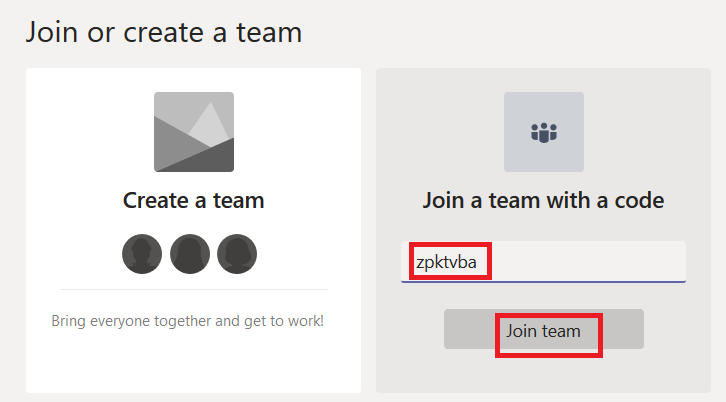

Individuals who have the code can go to the Teams gallery and enter the code in the tile that says “Join a team with a code”