A few years ago, I implemented a custom password filter in Active Directory. At some point, it began accepting passwords that should be rejected. The updated code is available at https://github.com/ljr55555/OpenPasswordFilter and the following is the approach I used to isolate the cause of the failure.

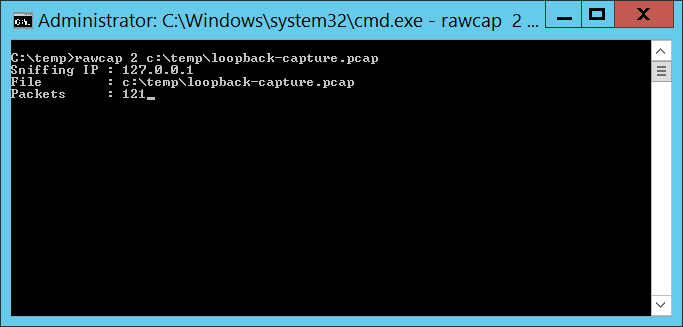

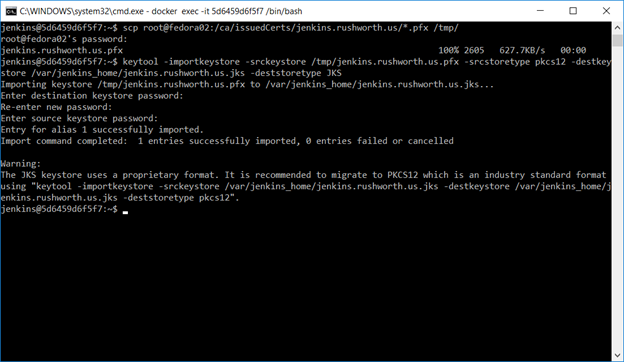

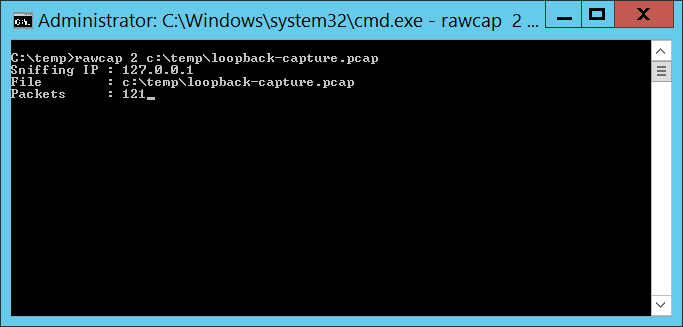

Technique #1 — Netcap on the loopback There are utilities that allow you to capture network traffic across the loopback interface. This is helpful in isolating problems in the service binary or inter-process communication. I used RawCap because it’s free for commercial use. There are other approaches too – or consult the search engine of your choice.

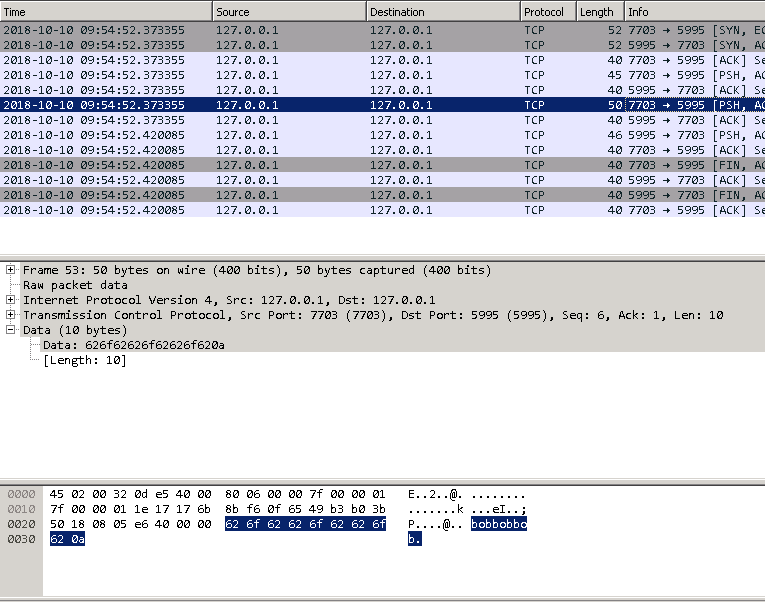

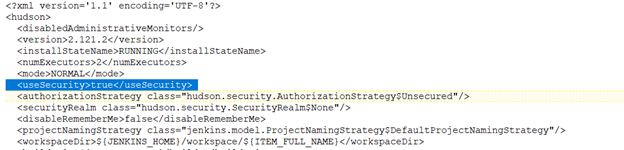

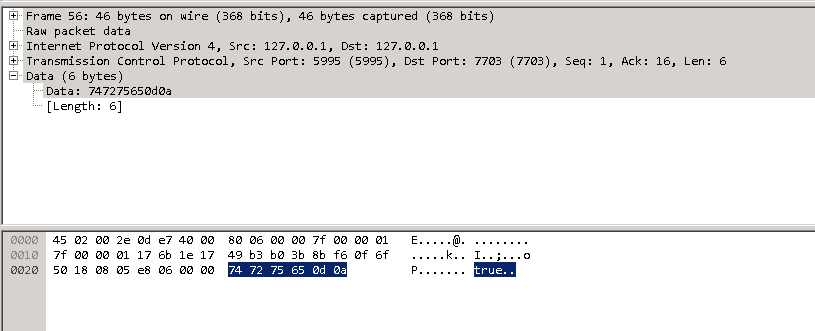

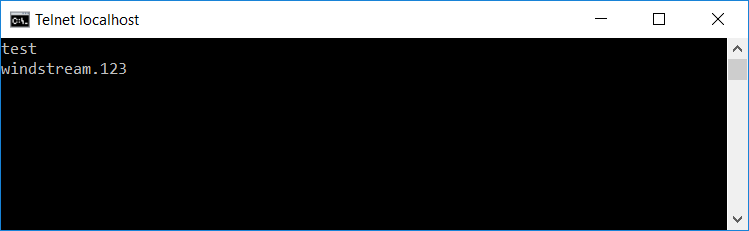

The capture file can be opened in Wireshark. The communication is done in clear text (which is why I bound the service to localhost), so you’ll see the password:

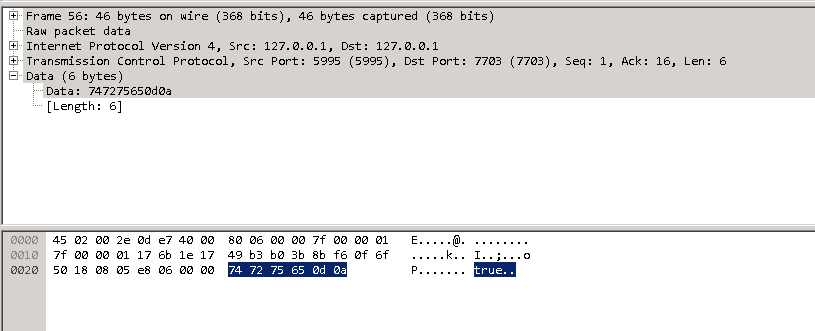

And response

To ensure process integrity, the full communication is for the client to send “test\n” then “PasswordToTest\n”, after which the server sends back either true or false.

Technique #2 — Debuggers Attaching a debugger to lsass.exe is not fun. Use a remote debugger — until you tell the debugger to proceed, the OS is pretty much useless. And if the OS is waiting on you to click something running locally, you are quite out of luck. A remote debugger allows you to use a functional operating system to tell the debugger to proceed, at which time the system being debugged returns to service.

Install the SDK debugging utilities on your domain controller and another box. Which SDK debugging tool? That’s going to depend on your OS. For Windows 10 and Windows Server 2012 R2, the Windows 10 SDK (Debugging Tools For Windows 10) work. https://docs.microsoft.com/en-us/windows-hardware/drivers/debugger/debugger-download-tools or Google it.

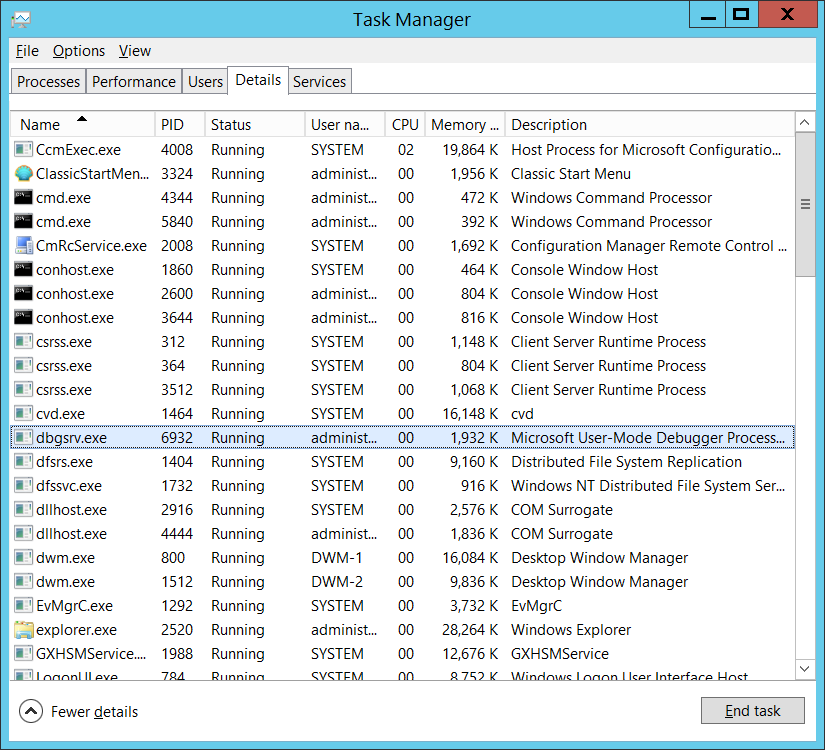

On the domain controller, find the PID of LSASS and write it down (472 in my example). Check the IP address of the domain controller (10.104.164.110 in my example).

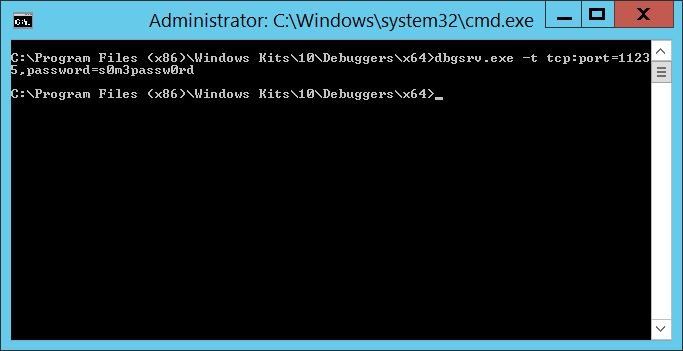

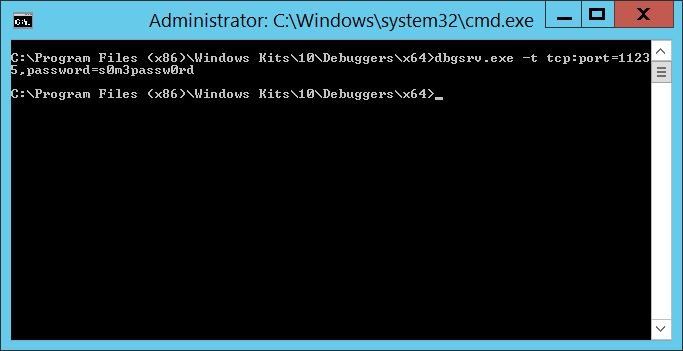

From the domain controller, run:

dbgsrv.exe -t tcp:port=11235,password=s0m3passw0rd

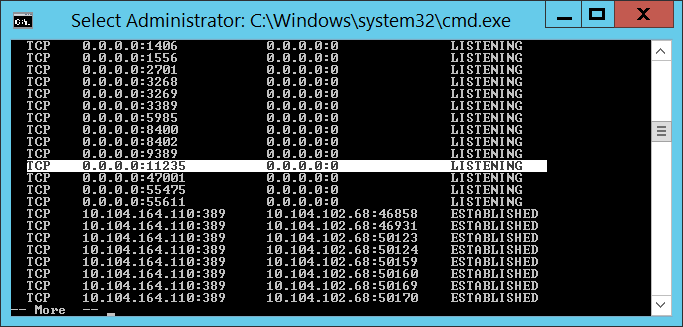

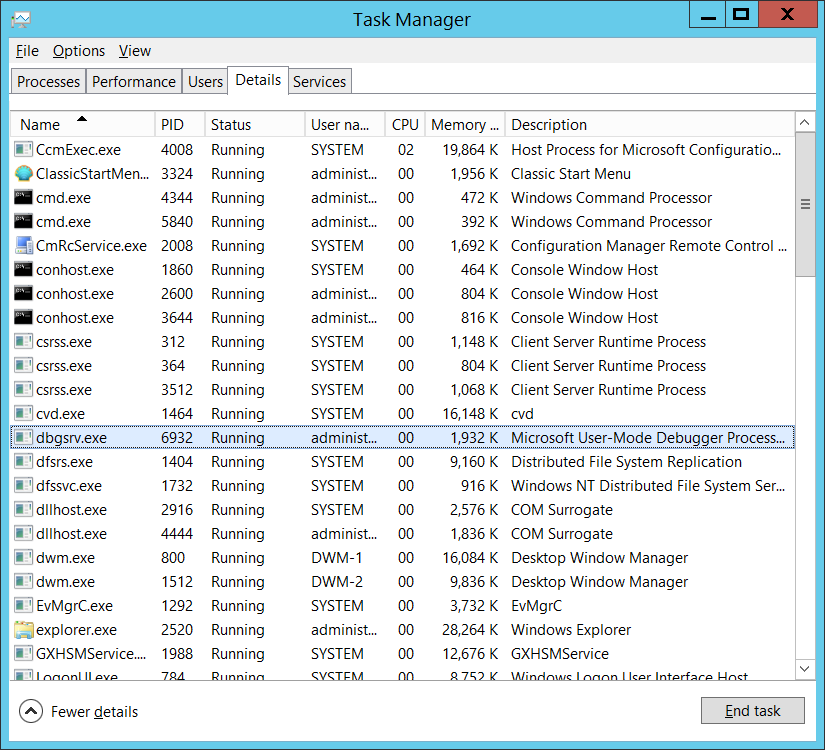

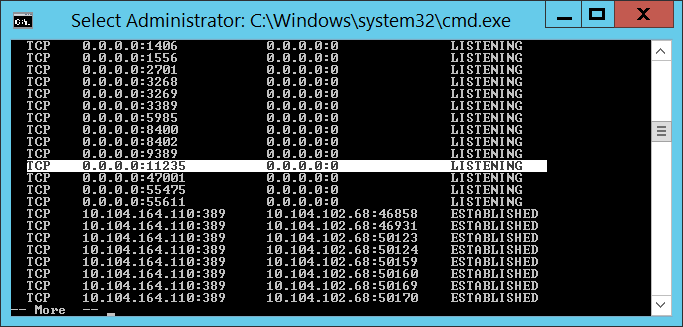

Where port=11235 can be any un-used port and password=s0m3passw0rd can be whatever string you want … you’ve just got to use the same values when you connect from the client. Hit enter and you’ve got a debugging server. It won’t look like it did anything, but you’ll see the port bound on netstat

And the binary running in taskman

From the other box, run the following command (substituting the correct server IP, port, password, and process ID):

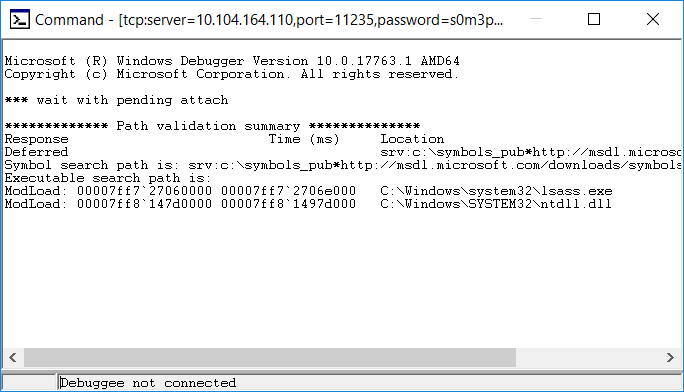

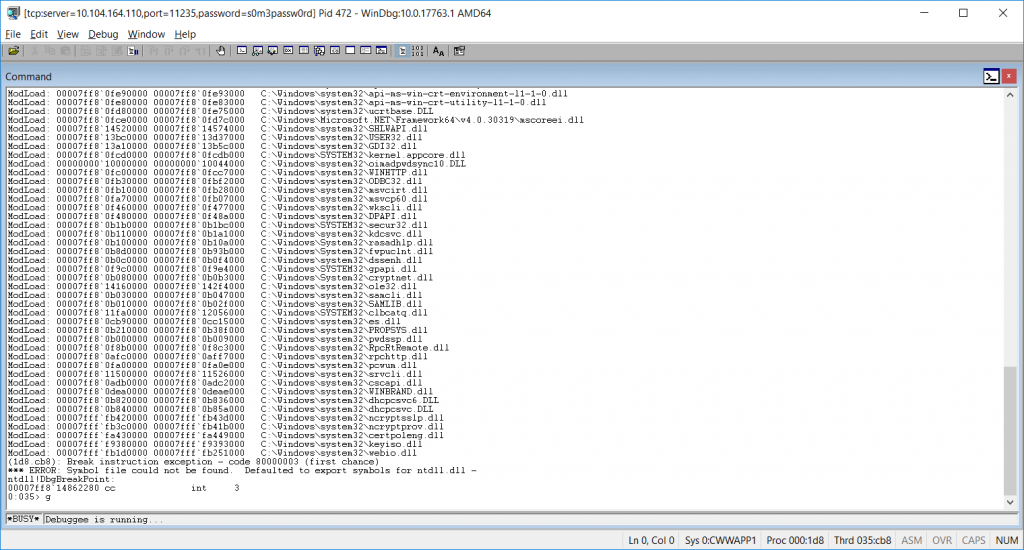

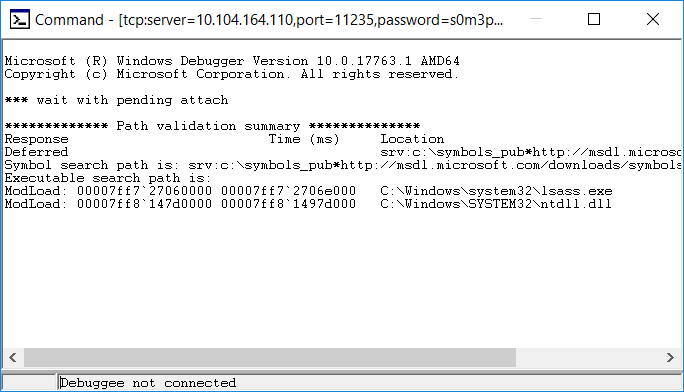

windbg.exe -y “srv:c:\symbols_pub*http://msdl.microsoft.com/downloads/symbols” -premote tcp:server=10.104.164.110,port=11235,password=s0m3passw0rd -p 472

This attaches your WinDBG to the debugging server & includes an internet-hosted symbol path. Don’t worry when it says “Debugee not connected” at the bottom – that just means the connection has not completed. If it didn’t connect at all (firewall, bad port number, bad password), you’d get a pop-up error indicating that the initial connection failed.

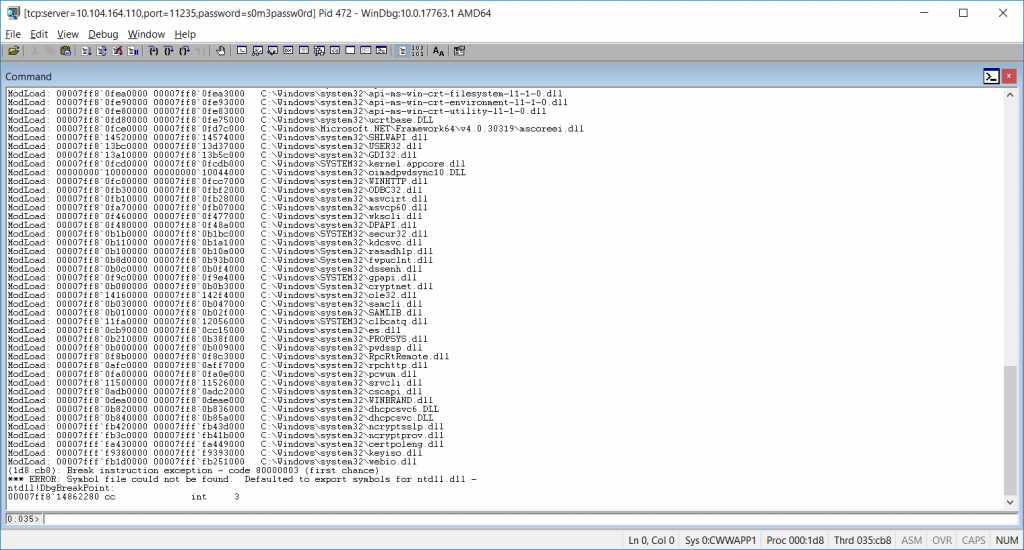

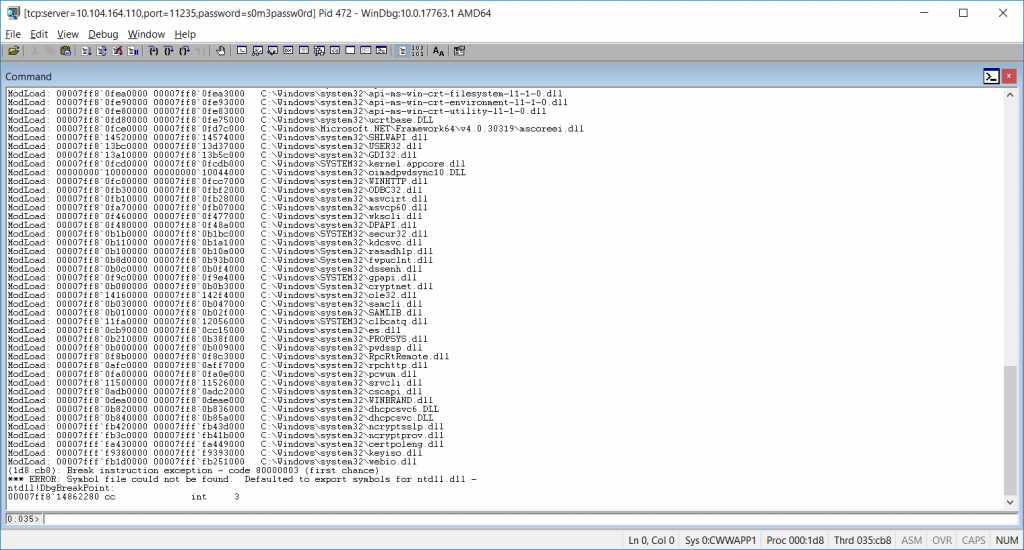

Wait for it … this may take a long time to load up, during which time your DC is vegged. But eventually, you’ll be connected. Don’t try to use the DC yet – it will just seem hung, and trying to get things working just make it worse. Once the debugger is connected, send ‘g’ to the debugger to commence – and now the DC is working again.

Down at the bottom of the command window, there’s a status (0:035> below) followed by a field where you enter commands. Type the letter g in there & hit enter.

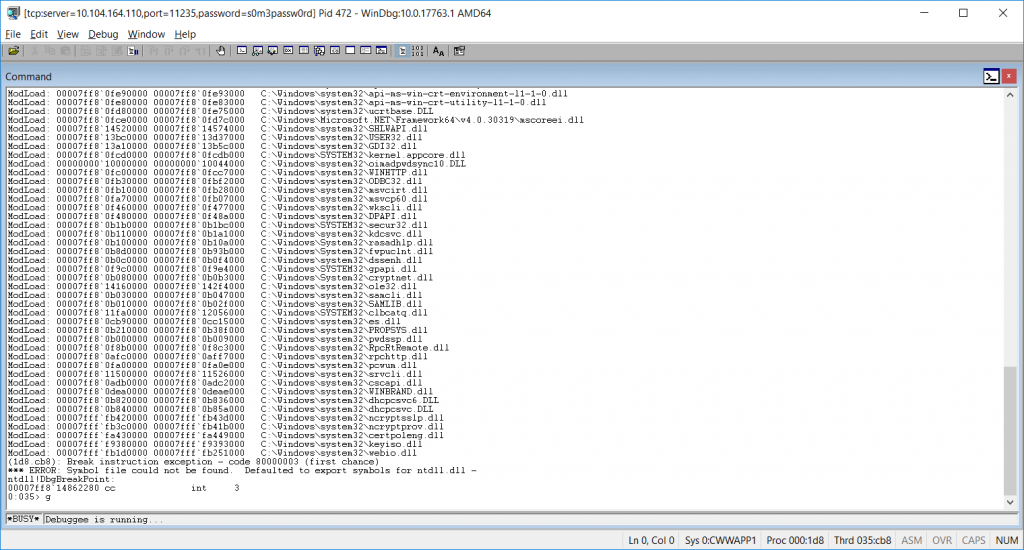

The status will then say “Debuggee is running …” and you’re server is again responsive to user requests.

When you reach a failing test, pause the debugger with a break command (Debug=>Break, or Ctrl-Break) which will veg out the DC again. You can view the call stack, memory, etc.

To search the address space for an ASCII string use:

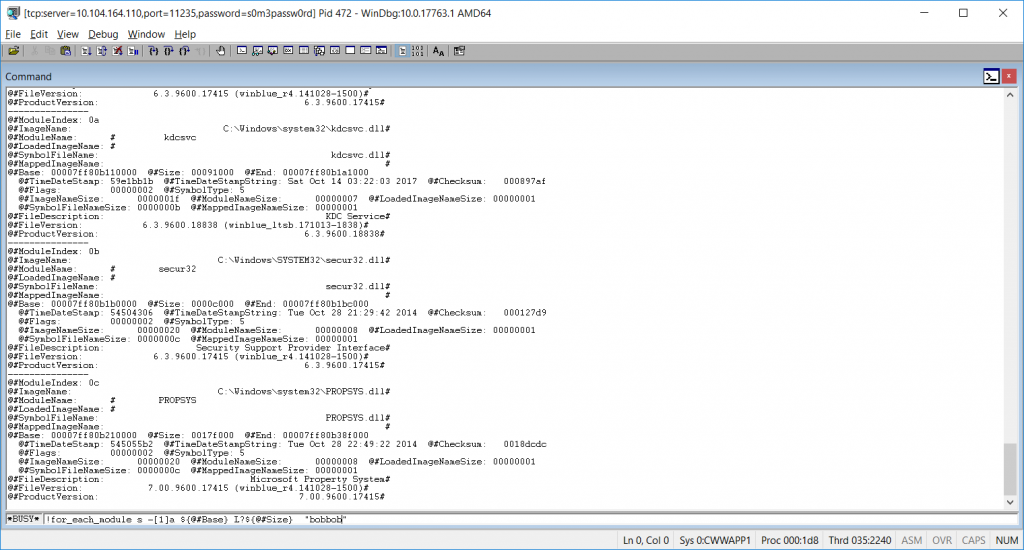

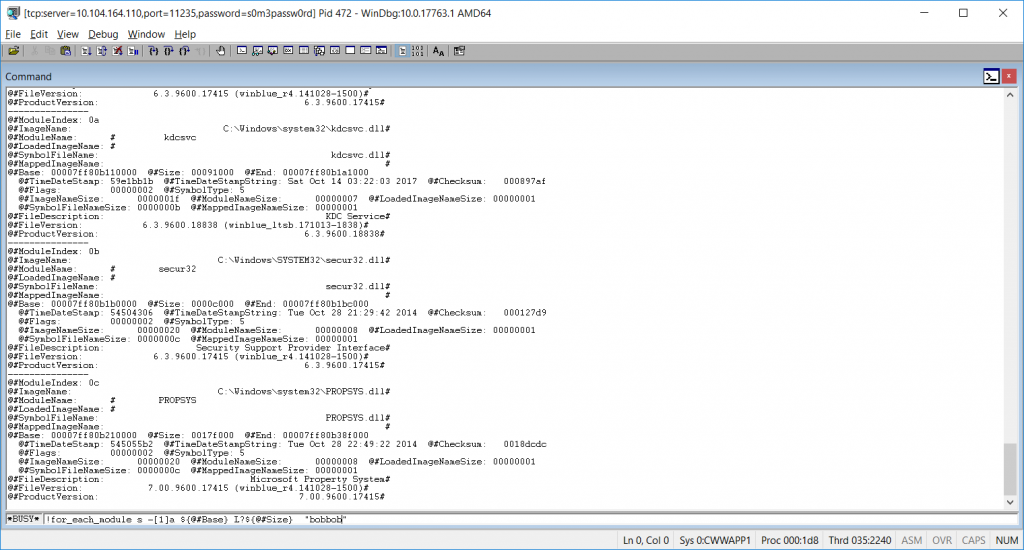

!for_each_module s -[1]a ${@#Base} L?${@#Size} "bobbob"

Where “bobbob” is the password I had tested.

Alternately, run the “psychodebug” build where LARGEADDRESSAWARE is set to NO and you can search just the low 2-gig memory space (32-bit process memory space):

s -a 0 L?80000000 "bobbob"

* The true/false server response is an ASCII string, not a Boolean. *

Once you have found what you are looking for, “go” the debugger (F5, Debug=>Go, or ‘g’) to restore the server to an operational state. Break again when you want to look at something.

To disconnect, break and send “qd” to the debugger (quit and detach). If you do not detach with qd, the process being debugged terminates. Having lsass.exe terminate really freaks out the server, and it will go into an auto-recovery “I’m going to reboot in one minute” mode. It’ll come back, but detaching without terminating the process is a lot nicer.

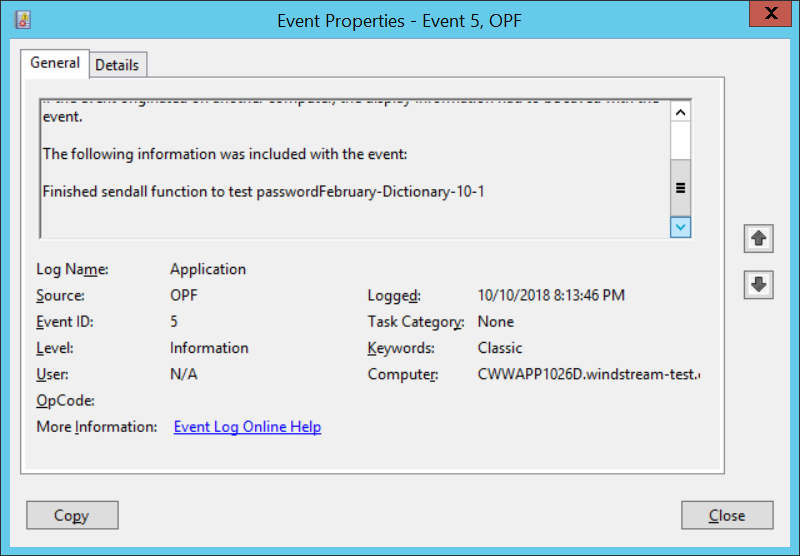

Technique #3 – Compile a verbose version. I added a number of event log writes within the DLL (obviously, it’s not a good idea in production to log out candidate passwords in clear text!). While using the debugger will get you there eventually, half an hour worth of searching for each event (the timing is tricky so the failed event is still in memory when you break the debugger) … having each iteration write what it was doing to the event log was FAAAAAR simpler.

And since I’m running this on a dev DC where the passwords coming across are all generated from a load sim script … not exactly super-secret stuff hitting the event log.

Right now, I’ve got an incredibly verbose DLL on APP556 under d:\tempcsg\ljr\2\debugbuild\psychodebug\ … all of the commented out event log writes from https://github.com/ljr55555/OpenPasswordFilter aren’t commented out.

Stop the OpenPasswordFilter service, put the verbose DLL and executables in place, and reboot. Change some passwords, then look in the event viewer.

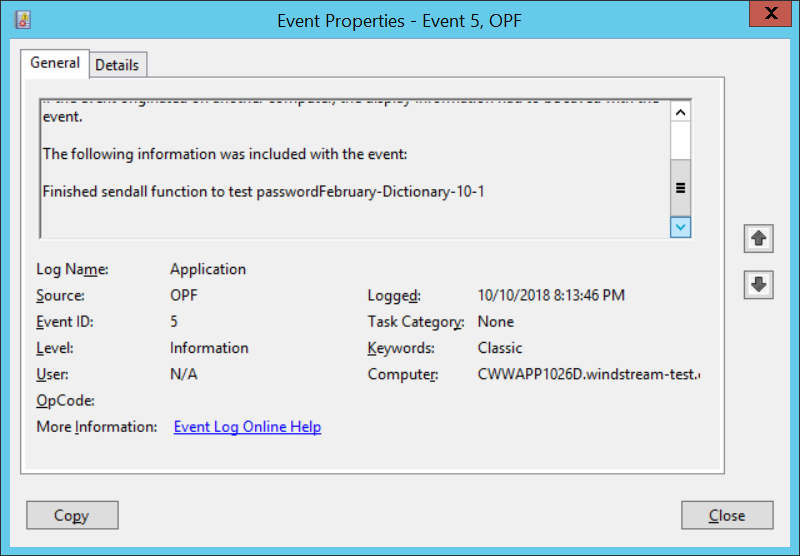

ERROR events are actual problems that would show up either way. INFORMATION events are extras. I haven’t bothered to learn how to properly register event sources in Windows yet 🙂 You can find the error content at the bottom of the “this isn’t registered” complaint:

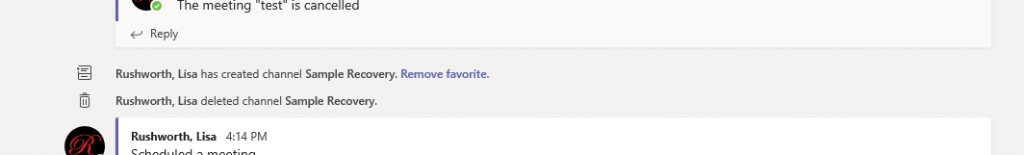

You will see events for the following steps:

DLL starting CreateSocket

About to test password 123paetec123-Dictionary-1-2

Finished sendall function to test password123paetec123-Dictionary-1-2

Got t on test of paetec123-Dictionary-1-2

The final line will either say “Got t” for true or “Got f” for false.

Technique #4 – Running the code through the debugger. Whilst there’s no good way to get the “Notification Package” hook to run the DLL through the debugger, you can install Visual Studio on a dev domain controller and execute the service binary through the debugger. This allows you to set breakpoints and watch variable values as the program executes – which makes it a whole lot easier than using WinDBG to debug the production code.

Grab a copy of the source code – we’re going to be making some changes that should not be promoted to production, so I work on a temporary copy of the project and delete the copy once testing has completed.

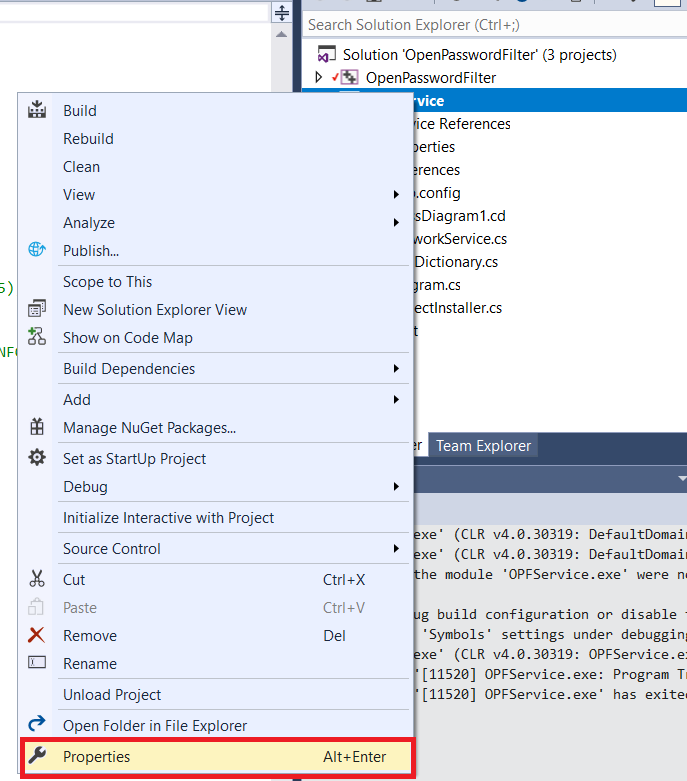

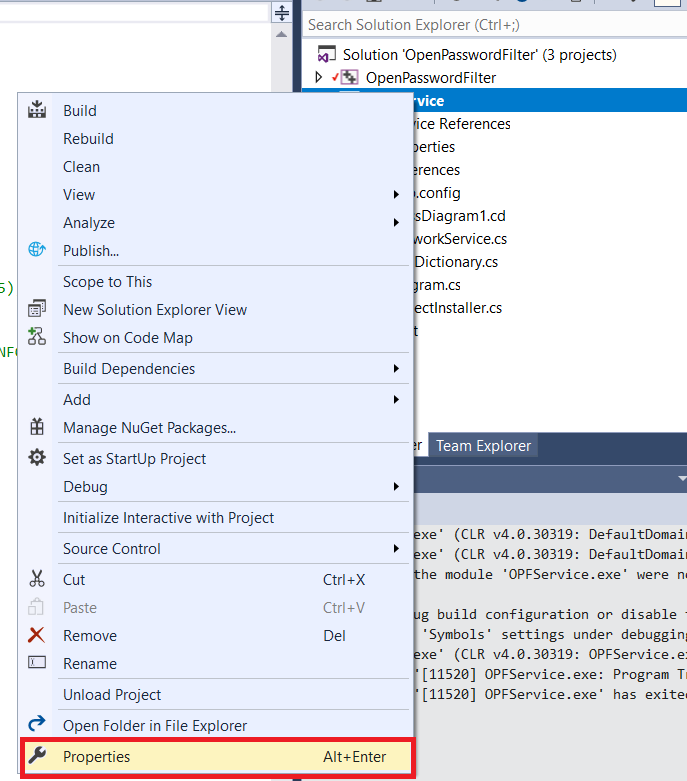

Open the project in Visual Studio. Right-click OPFService in the “Solution Explorer” and select “Properties”

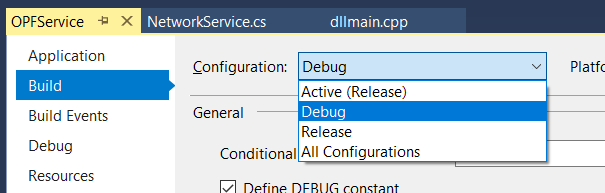

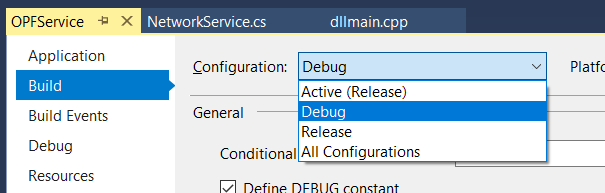

Change the build configuration to “Debug”

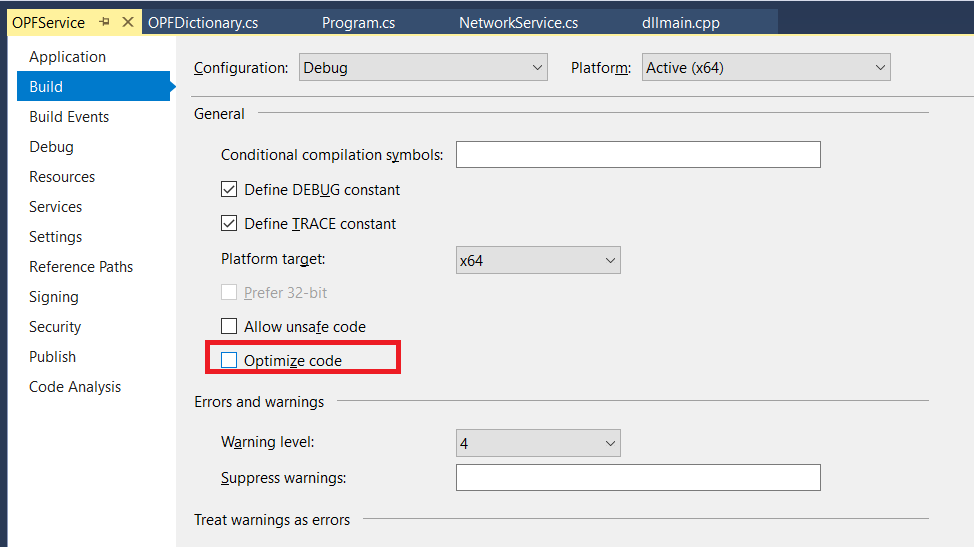

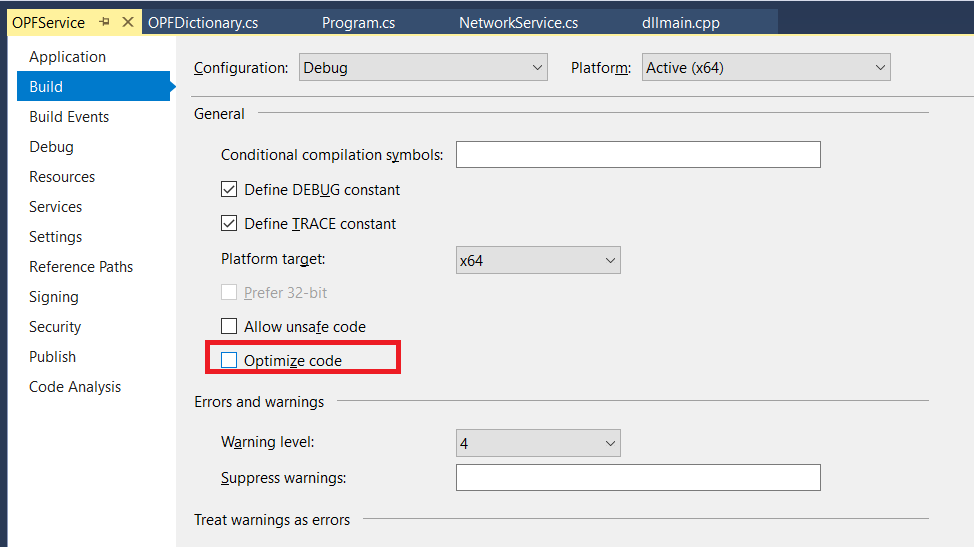

Un-check “Optimize code” – code optimization is good for production run, but it will wipe out variable values when you want to see them.

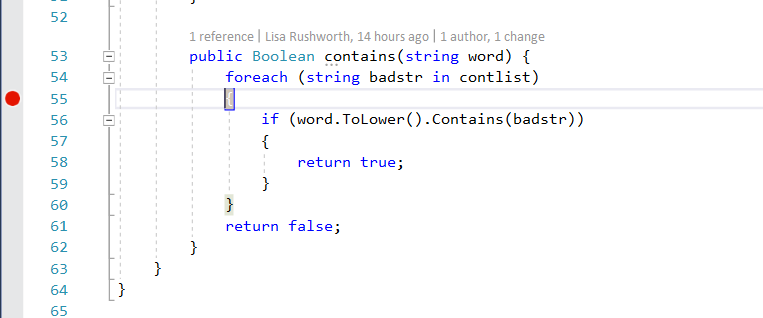

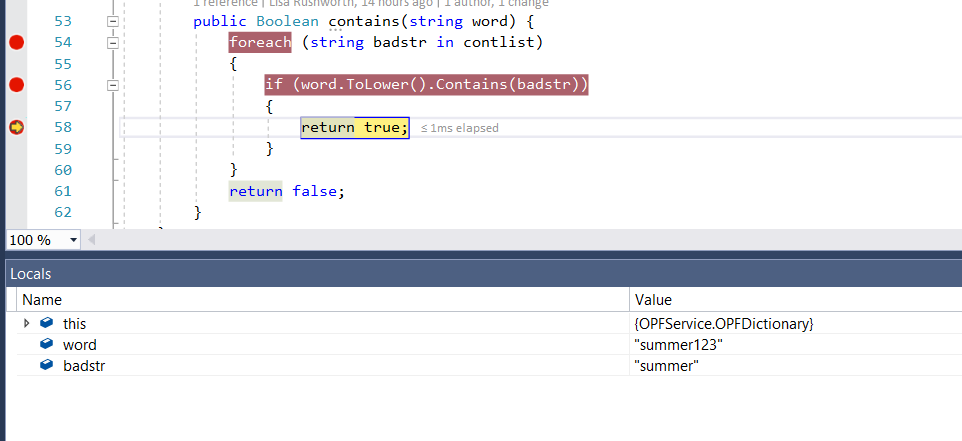

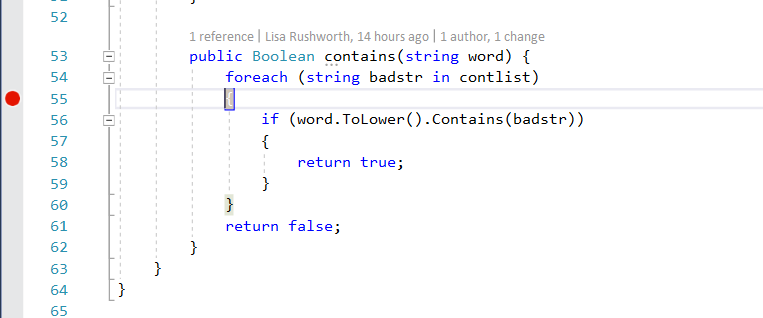

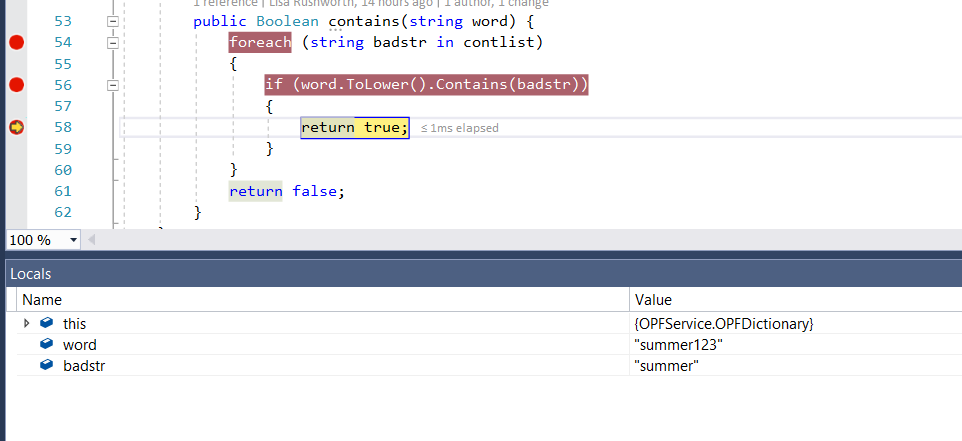

Set a breakpoint on execution – on the OPFDictionary.cs file, the loop checking to see if the proposed word is contained in the banned word list is a good breakpoint. The return statements are another good breakpoint as it pauses program execution right before a password test iteration has completed.

Build the solution (Build=>Build Solution). Stop the Windows OpenPasswordFilter service.

Launch the service binary through the debugger (Debug=>Start Debugging).

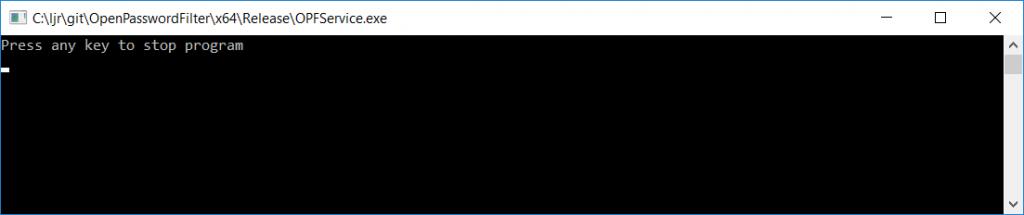

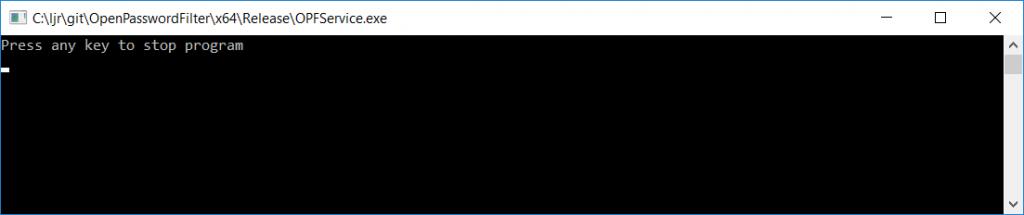

Because the program is being run interactively instead of through a service, you’ll get a command window that says “Press any key to stop the program”. Minimize this.

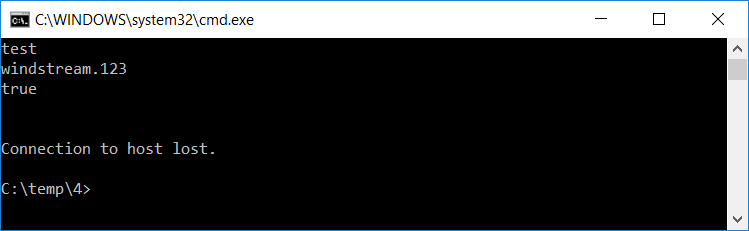

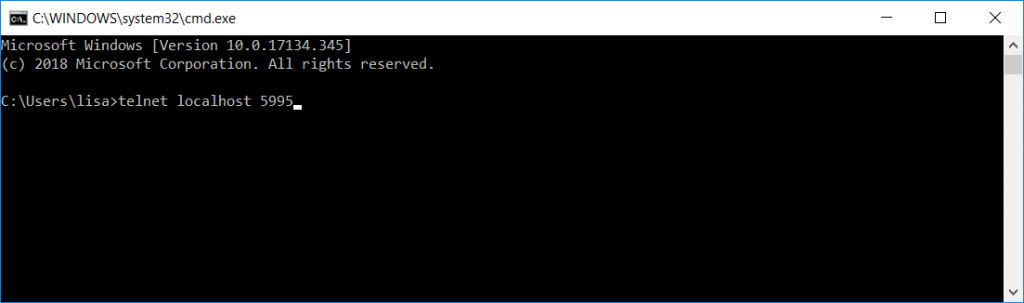

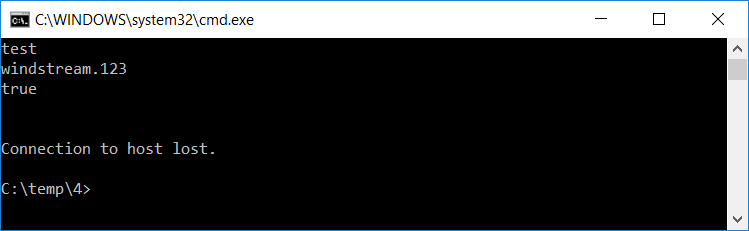

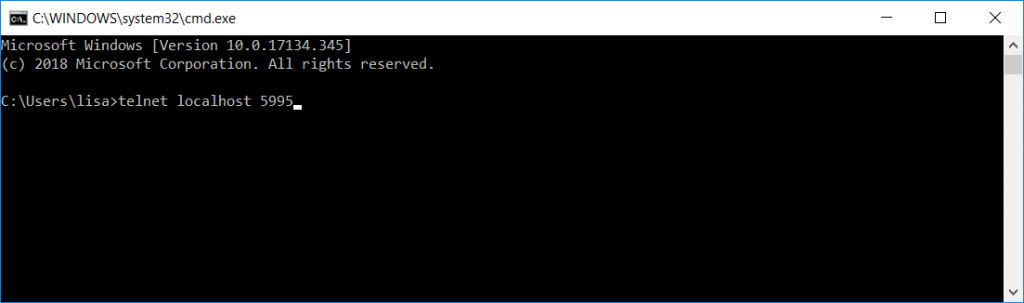

From a new command prompt, telnet to localhost on port 5995 (the telnet client is not installed by default, so you may need to use “Turn Windows features on or off” and enable the telnet client first).

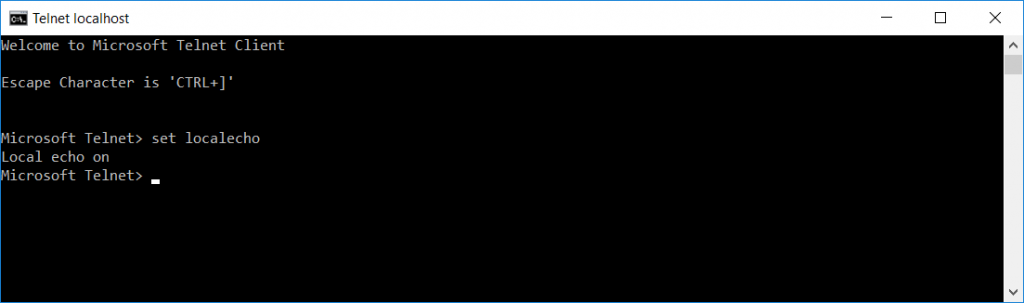

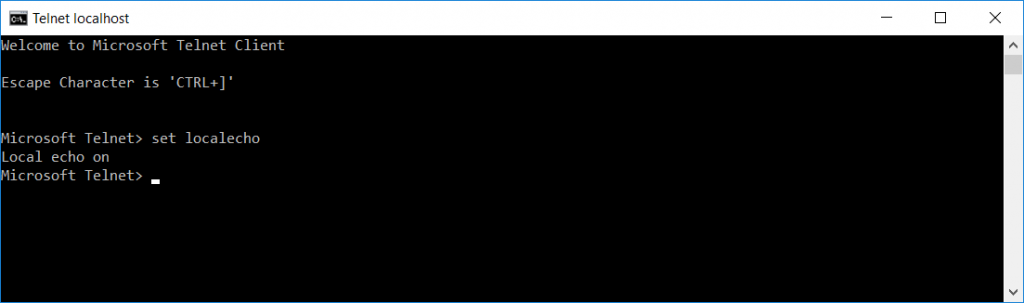

Once the connection is established, use CTRL and ] to get into the telnet command prompt. Type set localecho … now you’ll be able to see what you are typing.

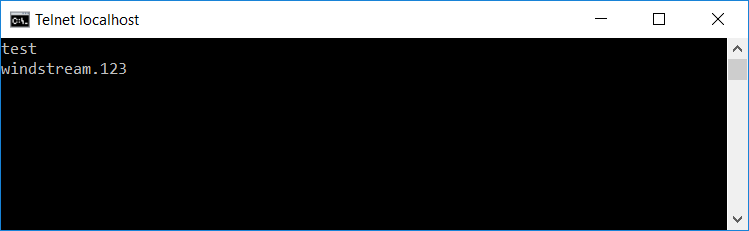

Hit enter again and you’ll return to the blank window that is your telnet client. Type test and hit enter. Then type a candidate password and hit enter.

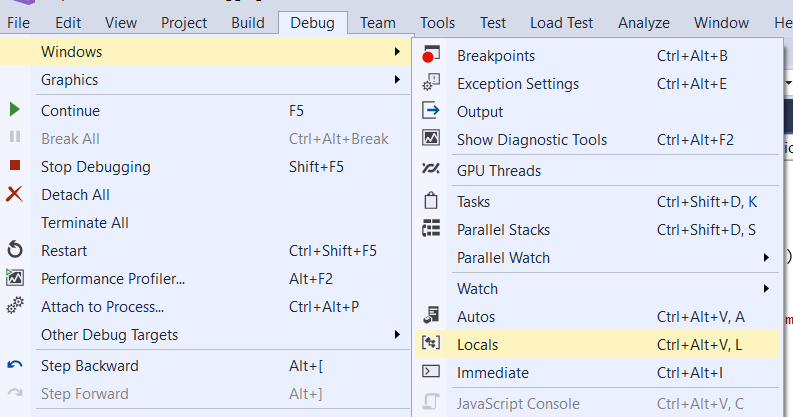

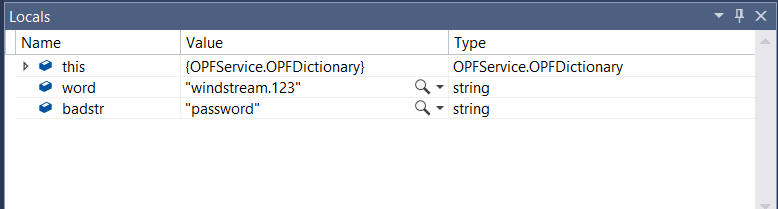

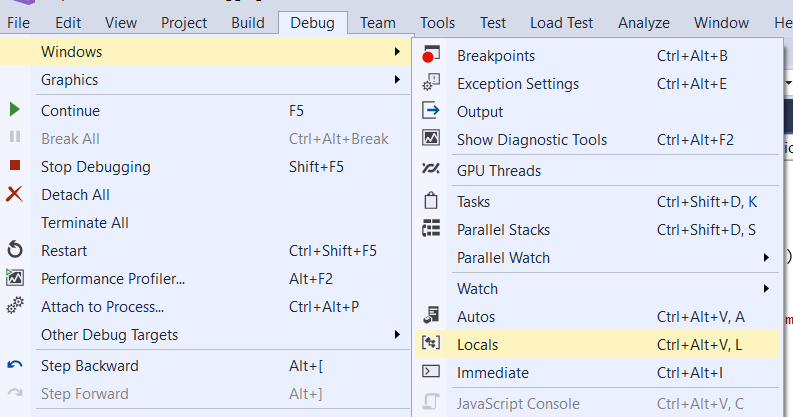

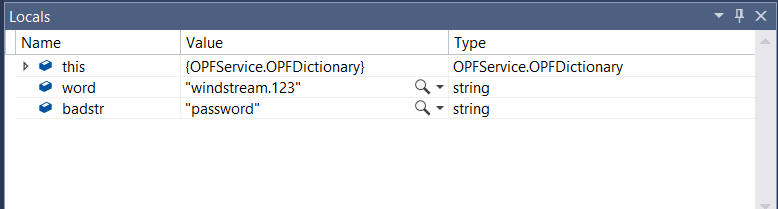

Program execution will pause at the breakpoint you’ve set. Return to Visual Studio. Select Debug =>Window=>Locals to open a view of the variable values

View the locals at the breakpoint, then hit F5 if you want to continue.

If you’re set breakpoints on either of the return statements, program execution will also pause before the return … which gives you an opportunity to see which return is being used & compare the variable values again.

In this case, I submitted a password that was in the banned word list, so the program rightly evaluated line 56 to true and returns true.