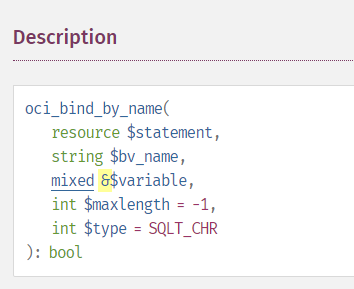

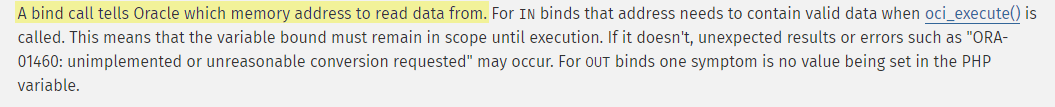

This vulnerability occurs when you accept user input and then use that input in a SQL query. The basic remediation is to use oci_bind_by_name to bind variables into placeholders.

A query using an equivalence clause

The simplest case is a query with an equivalence clause.

The code:

$strQuery = "SELECT DISTINCT EXCHANGE_CARRIER_CIRCUIT_ID, CIRCUIT_DESIGN_ID FROM circuit$strDBLink WHERE EXCHANGE_CARRIER_CIRCUIT_ID = '$strECCKT' ORDER BY CIRCUIT_DESIGN_ID"; $stmt = oci_parse($kpiprd_conn, $strQuery); oci_set_prefetch($stmt, 300); oci_execute($stmt);

Becomes:

$strQuery = "SELECT DISTINCT EXCHANGE_CARRIER_CIRCUIT_ID, CIRCUIT_DESIGN_ID FROM circuit$strDBLink WHERE EXCHANGE_CARRIER_CIRCUIT_ID IN :ecckt ORDER BY CIRCUIT_DESIGN_ID"; $stmt = oci_parse($kpiprd_conn, $strQuery); oci_bind_by_name($stmt, ':ecckt', $strECCKT); oci_set_prefetch($stmt, 300); oci_execute($stmt);

The same placeholder can be used with the like query. Use “select something from table where columnname like :placeholdername” followed by an oci_bind_by_name($stmt, “:placeholdername”, $strPlaceholderVariable).

A query using an IN clause

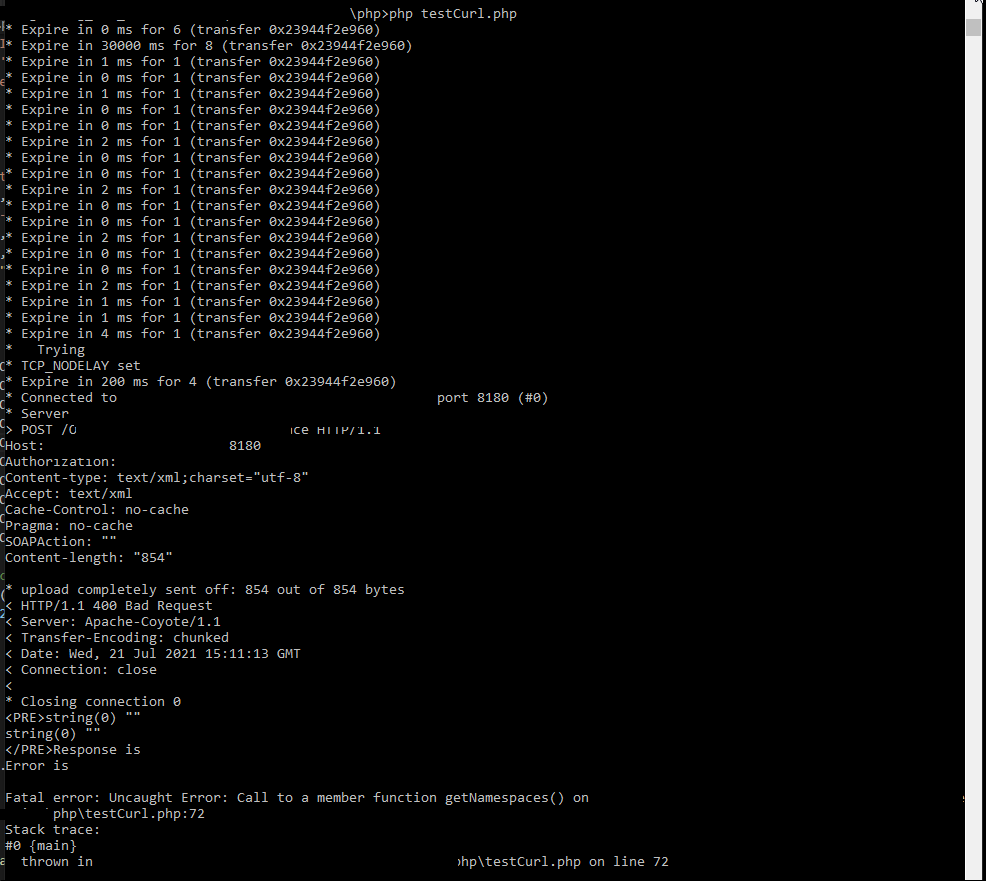

– is a little tricker. You could iterate through the array of values and build :placeholder1, :placeholder2, …, :placeholdern and then iterate through the array of values again to bind each value to its corresponding placeholder. A cleaner approach is to use an Oracle collection ($coll in this example) and binding the collection to a single placeholder.

$arrayCircuitNames = array('L101 /T1 /ELYROHU0012/ELYROHXA32C','111 /ST01 /CHMPILCPF01/CHMPILCPHH3','C102 /OC12 /PHLAPAFG-19/PHLAPAFGW22')

$strQuery = "SELECT CIRCUIT_DESIGN_ID, EXCHANGE_CARRIER_CIRCUIT_ID FROM circuit$strDBLink WHERE EXCHANGE_CARRIER_CIRCUIT_ID in (SELECT column_value FROM table(:myIds))"; $stmt = oci_parse($kpiprd_conn, $strQuery);

$coll = oci_new_collection($kpiprd_conn, 'ODCIVARCHAR2LIST','SYS');

foreach ($arrayCircuitNames as $key) {

$coll->append($key);

}

oci_bind_by_name($stmt, ':myIds', $coll, -1, OCI_B_NTY);

oci_set_prefetch($stmt, 300);

oci_execute($stmt);

Queries with multiple LIKE conditions

Queries with an OR’d group of LIKE clauses can be handled in a similar fashion – either iterate through the array twice or create a collection with strings that include the wildcard characters, then bind that collection to a single placeholder. Create a semi-join using an EXISTS predicate

$arrayLocs = array('ERIEPAXE%', 'HNCKOHXA%', 'LTRKARXK%');

$strQuery = "select location_id, clli_code from network_location$strDBLink where exists (select 1 from TABLE(:likelocs) where clli_code like column_value) order by clli_code";

$stmt = oci_parse($kpiprd_conn, $strQuery);

$coll = oci_new_collection($kpiprd_conn, 'ODCIVARCHAR2LIST','SYS');

foreach ($arrayLocs as $strLocation) {

$coll->append($strLocation);

}

oci_bind_by_name($stmt, ':likelocs', $coll, -1, OCI_B_NTY);

oci_execute($stmt);

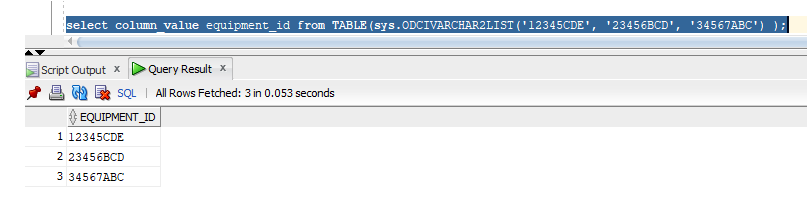

A query using DUAL

Queries where values are selected from DUAL – In some of my recursive queries, I need to include the original input in the result set (particularly, this query finds all equipment mounted under a specific equipment ID – I want to include the input equipment ID as well). Having a bunch of ‘select 12345 from dual’ is fine until I need to use placeholders. This is another place where the collection can be leveraged:

select column_value equipment_id from TABLE(sys.ODCIVARCHAR2LIST('12345CDE', '23456BCD', '34567ABC') );

Adds each of the values to my result set.

Which means I can use a query like “select column_value as equipment_id from TABLE(:myIDs)” and bind the collection to :myIDs.