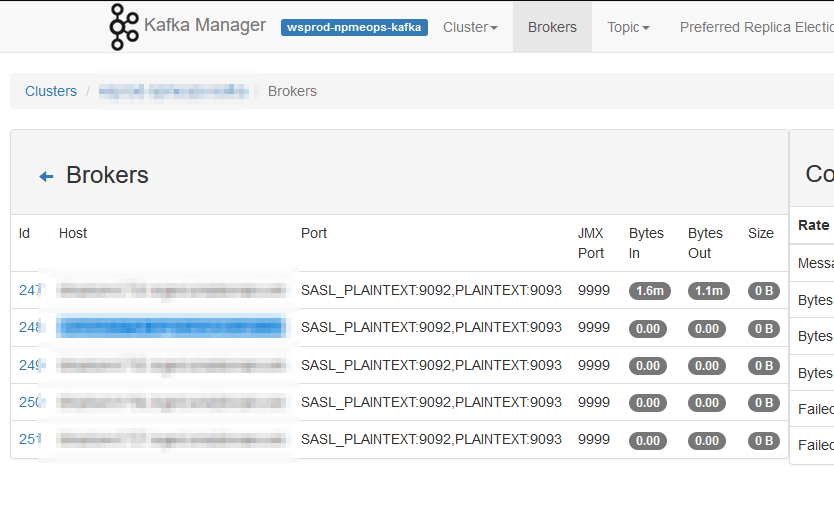

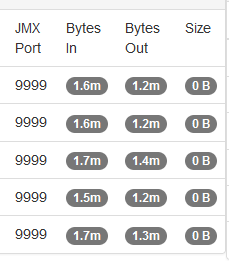

I created a few new Kafka topics for a project today — but, in testing, messages sent to the topic weren’t there. I normally echo some string into “kafka-console-producer.sh” to test messages. Evidently, STDERR wasn’t getting rendered back to my screen this way. I ran the producer script to get the “>” prompt and tried again — voila, a useful error:

[2022-10-31 15:36:23,471] ERROR Error when sending message to topic MyTopic with key: null, value: 4 bytes with error: (org.apache.kafka.clients.pro.internals.ErrorLoggingCallback)

org.apache.kafka.common.InvalidRecordException: Compacted topic cannot accept message without key in topic partition MyTopic-0.

Ohhh — that makes sense! They’ve got an existing process on a different Kafka server, and I just mirrored the configuration without researching what the configuration meant. They use “compact” as their cleanup policy — so messages don’t really age out of the topic. They age out when a newer message with that key gets posted. It’s a neat algorithm that I remember encountering when I first started reading the Kafka documentation … but it’s not something I had a reason to use. The other data we have transiting our Kafka cluster is time-series data where we want all of the info for trending. Having just the most recent, say, CPU utilization on my server isn’t terribly useful. But it makes sense — if I instruct the topic to clean up old data but retain the most recent message for each key … I need to be giving it a key!

Adding a parameter to parse the string into a key/value pair and provide the separator led to data being published to the clients:

echo “test:EchoTest” | /kafka/bin/kafka-console-producer.sh –bootstrap-server $(hostname):9092 –topic MyTopic –property “parse.key=true” –property “key.separator=:”