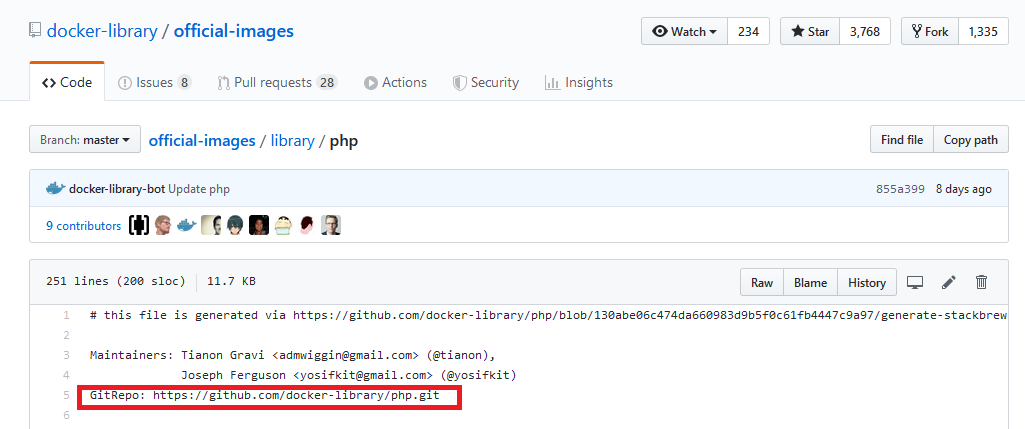

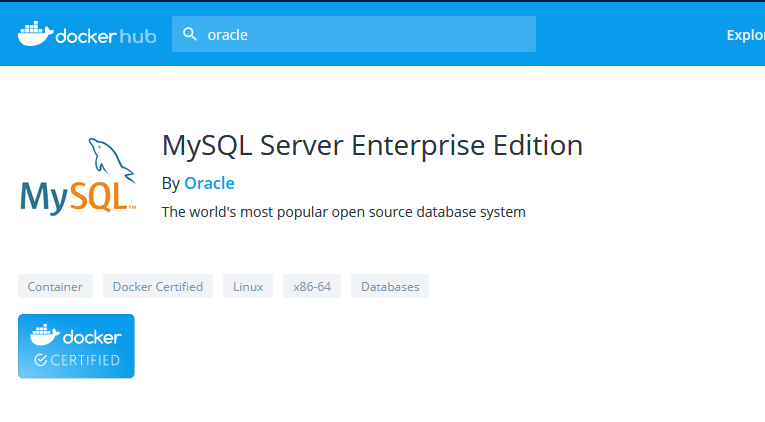

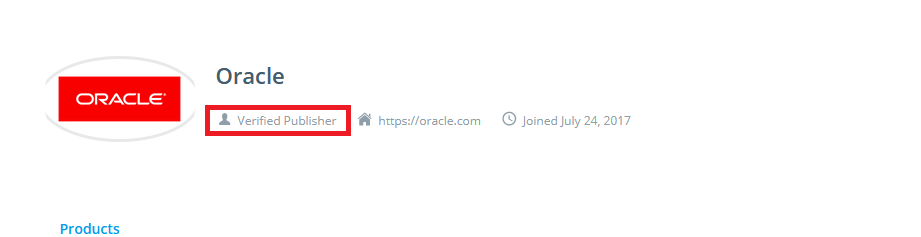

Docker maintains a registry of pre-built images that may be all you need. Put some thought into it, though – don’t just trust any image you find on the registry. When a pre-built image doesn’t meet your needs, you can make your own image based on any base image.

I create a new folder to hold the build instructions, additional configuration files, and notes about the build process. In that folder, create a file named “Dockerfile” which controls the image build.

You’ll need to specify the base image for your build using the FROM directive. I have examples here for installing sqlite3 on both a CentOS and Ubuntu base image. Add LABELs indicating the purpose of the image and who maintains it. Use the “RUN” directives to provide instructions to modify the base image to your needs – install what you want, create folders or files, clean up anything you don’t want.

The ENTRYPOINT is what runs when the container starts – it can be an executable, as in this case, or a script file.

If you need to expose ports for inter-container communication, add a list of ports to expose. SQLite is self-contained, but a microservice environment may have “EXPOSE 21443” to allow other containers to communicate with it on port 21443. Note this is different than binding the container port to a host – my Apache web server container has 443 bound; but that’s done in the “docker run” line where the container is built, not in the Dockerfile for the image.

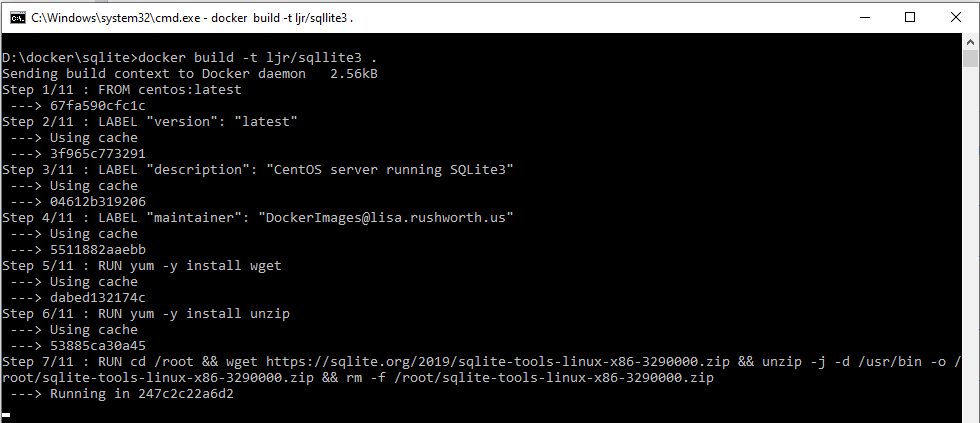

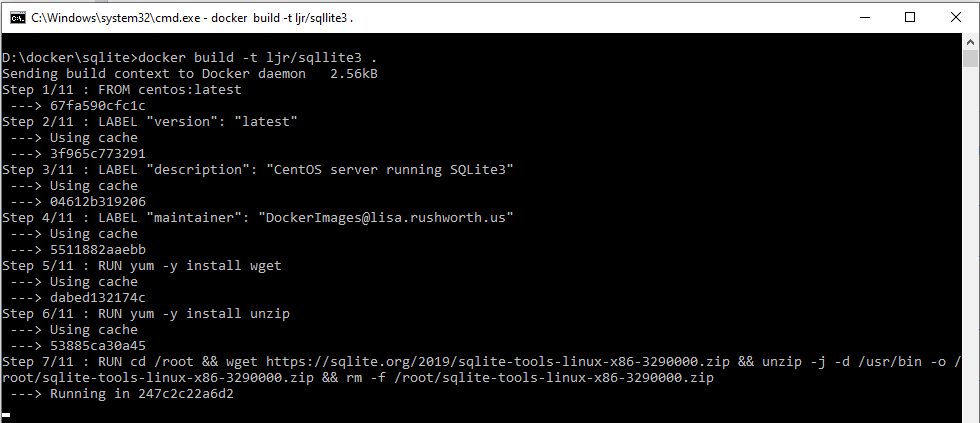

Example – SQLite3 on CentOS

FROM centos:latest

LABEL "version": "latest"

LABEL "description": "CentOS server running SQLite3"

LABEL "maintainer": "DockerImages@lisa.rushworth.us"

RUN yum -y install glibc.i686

RUN yum -y install zlib.i686

RUN yum -y install wget

RUN yum -y install unzip

RUN cd /root && wget https://sqlite.org/2019/sqlite-tools-linux-x86-3290000.zip && unzip -j -d /usr/bin -o /root/sqlite-tools-linux-x86-3290000.zip && rm -f /root/sqlite-tools-linux-x86-3290000.zip

RUN rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

RUN mkdir -p /db

WORKDIR /db

ENTRYPOINT [ "sqlite3" ]

Example – SQLite3 on Ubuntu

FROM ubuntu:latest

LABEL "version": "latest"

LABEL "description": "Ubuntu server running SQLite3"

LABEL "maintainer": "DockerImages@lisa.rushworth.us"

RUN DEBIAN_FRONTEND=noninteractive apt-get -yq update

RUN DEBIAN_FRONTEND=noninteractive apt-get -yq install sqlite3

RUN rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

RUN mkdir -p /db

WORKDIR /db

ENTRYPOINT [ "sqlite3" ]

Save your Dockerfile and build your container using “docker build -t image/name .”

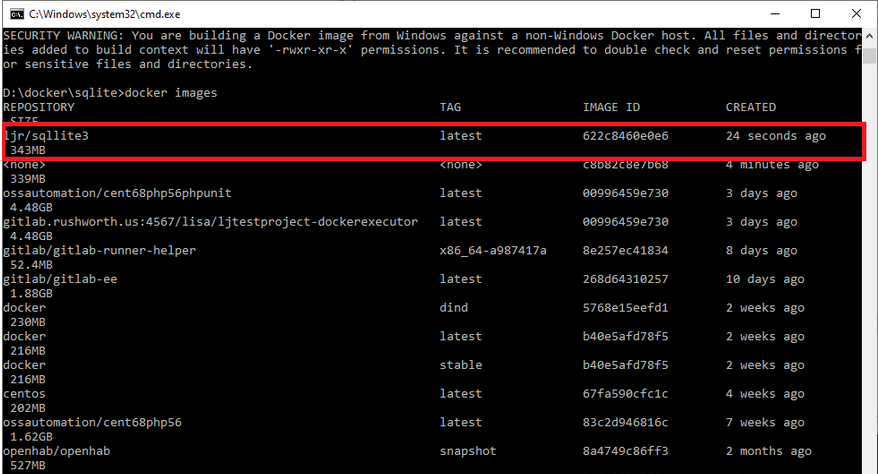

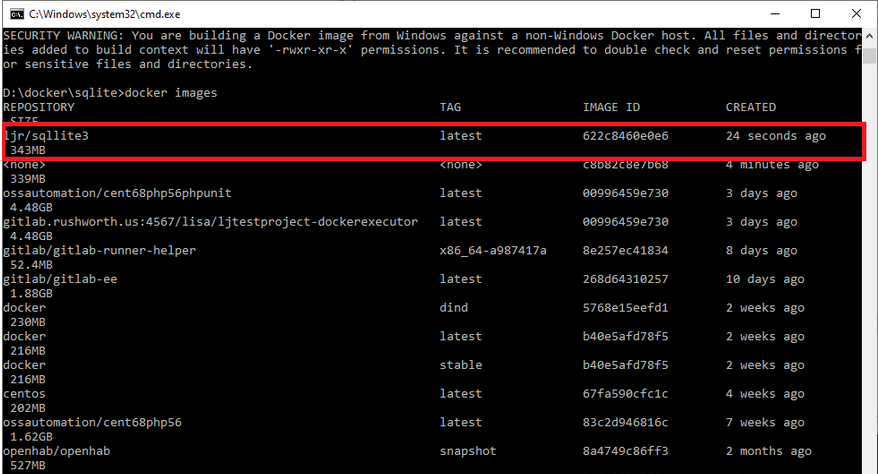

Use “docker images” to confirm the image has been successfully created.

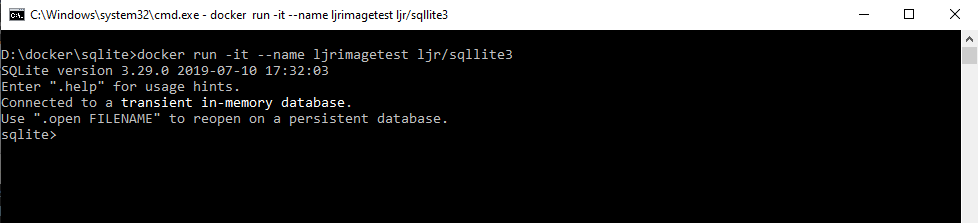

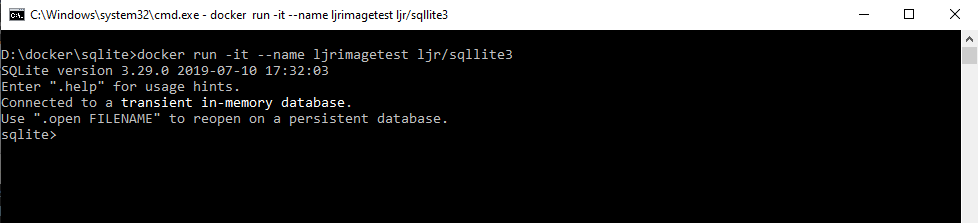

And start a container using your image:

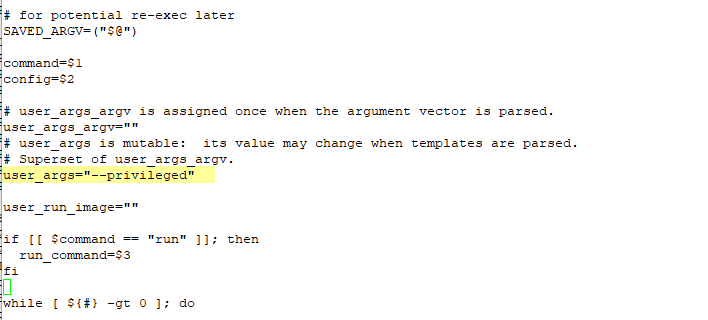

docker run -it --name ljrimagetest ljr/sqllite3

If you are having problems starting your container, change the entrypoint to something like “/bin/bash” – this will drop you to the host’s command line instead of the application. From there, you can troubleshoot your launch problems and sort the problem. The CentOS sqlite install, as an example, required glib and zlib components to run. Rather than trying something, rebuilding the image, launching a container, and looping until sqlite3 launched … I used bash as my entrypoint, installed packages until the application ran, and then modified the Dockerfile and rebuilt the image.

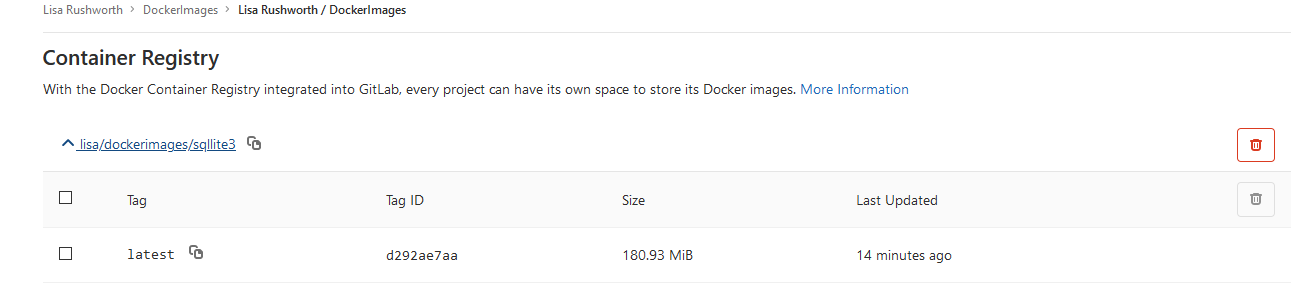

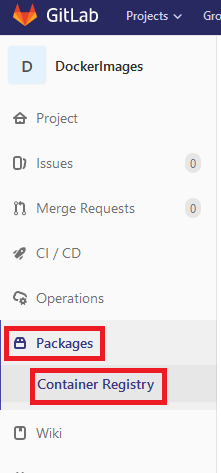

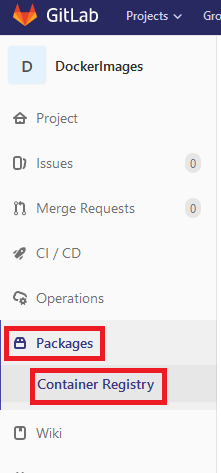

At this point, you can tag the image and upload it to a registry. I use my GitLab server to store Docker images. There is a DockerImages repository

To tag the image, use

docker tag ljr/sqllite3 gitlab.rushworth.us:4567/lisa/dockerimages/sqllite3:latest

And upload the image to your registry (you may need to authenticate first)

docker push gitlab.rushworth.us:4567/lisa/dockerimages/sqllite3:latest

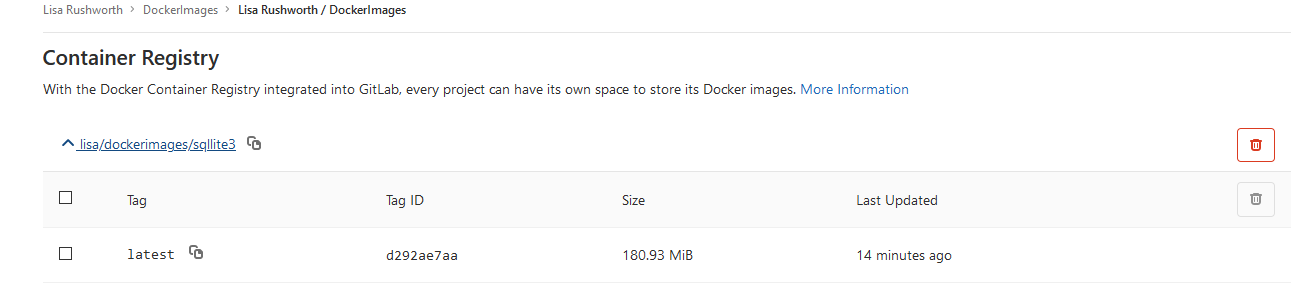

If you are using GitLab as your registry, navigate to the repository. Select Packages => Container Registry

Here’s my Sqlite3 image