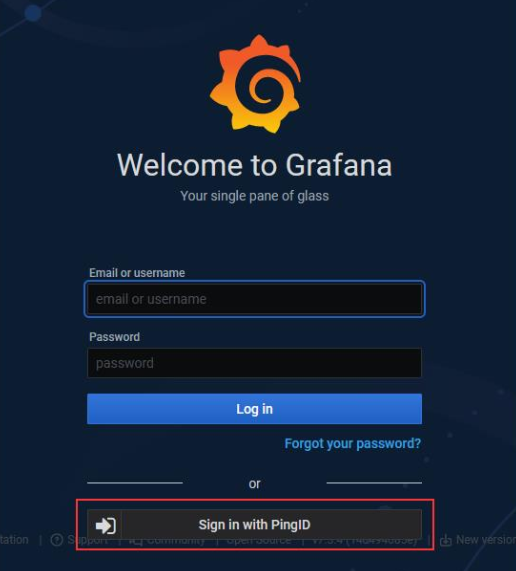

I enabled SSO in our development Grafana system today. There’s not a great user experience with SSO enabled because there is a local ‘admin’ user that has extra special rights that aren’t given to users put into the admin role. If you just enable SSO, there is a new button added under the logon dialogue that users can use to initiate an SSO authentication. That’s not great, though, since most users really should be using the SSO workflow. And people are absolutely going to be putting their login information into that really obvious set of text input fields.

Grafana has a configuration to bypass the logon form and just always go down the OAUTH authentication:

# Set to true to attempt login with OAuth automatically, skipping the login screen. # This setting is ignored if multiple OAuth providers are configured. oauth_auto_login = true

Except, now, the rare occasion we need to use the local admin account requires us to set this to false, restart the service, do our thing, change the setting back, and restart the service again. Which is what we’ll do … but it’s not a great solution either.

Config to authenticate Grafana to PingID using OAUTH

#################################### Generic OAuth ########################## [auth.generic_oauth] name = PingID enabled = true allow_sign_up = true client_id = 12345678-1234-4567-abcd-123456789abc client_secret = abcdeFgHijKLMnopqRstuvWxyZabcdeFgHijKLMnopqRstuvWxyZ scopes = openid profile email email_attribute_name = email:primary email_attribute_path = login_attribute_path = user role_attribute_path = id_token_attribute_name = auth_url = https://login.example.com/as/authorization.oauth2 token_url = https://login.example.com/as/token.oauth2 api_url = https://login.example.com/idp/userinfo.openid allowed_domains = team_ids = allowed_organizations = tls_skip_verify_insecure = true tls_client_cert = tls_client_key = tls_client_ca =