Scott climbed one of the maple trees today, and we saw some fireflies crawling on the tree trunk. We saw the first lit up fireflies of 2022 tonight — we played a game of kubb just after dark (with really bright flashlights!), and there were dozens of the little guys flying around.

Locking Adjustable Latches

We bought adjustable latches with a little extra metal bit where you can lock them. The problem, however, is that the range of adjustment is limited by the lock attachment point. Eventually, the screw bumps into the lock attachment and you cannot adjust the lock any smaller. If they had offset the lock attachment point, you could adjust the latch the full length of the threading.

Because we needed some smaller latches, we had to use a hacksaw to cut off some of the threads. Now it can be screwed down to its smallest size and our baby chickens are secured in their tractor.

Bee Inspection

A little after 1PM today, we inspected our beehive. Scott set appointments to remind us to inspect the bees on Sunday afternoon every week for the first few weeks. I filled the second frame feeder with a gallon of 1:1 sugar water. Figured it would be quicker and easier to swap feeders rather than try refilling the feeder outside.

We opened the hive — there aren’t as many bees on the top entrance board now that the hive has a larger entrance, but they did build some burr comb between the frames and the top board. Scott scraped off the burr comb, and Anya held onto it. There were a lot of bees across six of the frames. The bees have started working on the north-most two frames, so they were ready for the second deep hive body.

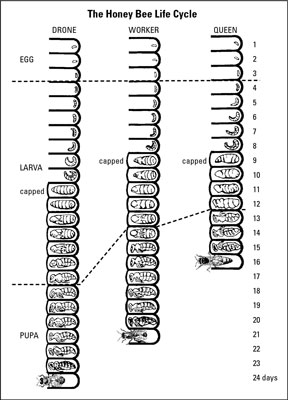

We removed one of the frames from the lower hive body — looking at the frame, we saw both capped brood and larvae. That’s great — we know the queen is laying! I found a cool picture of the bee lifecycle that made me think we wouldn’t see any capped larva yet (we released the queen 8 days ago … and “capped” shows up on day 9.

They are collecting pollen (we saw some pollen on the bottom board) and nectar (there’s already capped honey as well as cells with not-yet-finished honey). The frame was placed into the new deep to encourage the bees to move up into the new box. We removed the empty feeder, replaced it with the filled feeder, and placed one new frame into the lower hive body so it has eight frames plus the feeder.

We then put the new deep on top of the hive — it has the one frame we pulled from the hive plus nine clean, new frames. There’s a little gap between the two hive bodies that shrinks up when weight is put on the top. We put the top entrance board on the deep, set the lid in place, and placed a large cinder block on top of it all.

ELK Monitoring

We have a number of logstash servers gathering data from various filebeat sources. We’ve recently experienced a problem where the pipeline stops getting data for some of those sources. Not all — and restarting the non-functional filebeat source sends data for ten minutes or so. We were able to rectify the immediate problem by restarting our logstash services (IT troubleshooting step #1 — we restarted all of the filebeats and, when that didn’t help, moved on to restarting the logstashes)

But we need to have a way to ensure this isn’t happening — losing days of log data from some sources is really bad. So I put together a Python script to verify there’s something coming in from each of the filebeat sources.

pip install elasticsearch==7.13.4

#!/usr/bin/env python3

#-*- coding: utf-8 -*-

# Disable warnings that not verifying SSL trust isn't a good idea

import requests

requests.packages.urllib3.disable_warnings()

from elasticsearch import Elasticsearch

import time

# Modules for email alerting

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

# Config variables

strSenderAddress = "devnull@example.com"

strRecipientAddress = "me@example.com"

strSMTPHostname = "mail.example.com"

iSMTPPort = 25

listSplunkRelayHosts = ['host293', 'host590', 'host591', 'host022', 'host014', 'host135']

iAgeThreashold = 3600 # Alert if last document is more than an hour old (3600 seconds)

strAlert = None

for strRelayHost in listSplunkRelayHosts:

iCurrentUnixTimestamp = time.time()

elastic_client = Elasticsearch("https://elasticsearchhost.example.com:9200", http_auth=('rouser','r0pAs5w0rD'), verify_certs=False)

query_body = {

"sort": {

"@timestamp": {

"order": "desc"

}

},

"query": {

"bool": {

"must": {

"term": {

"host.hostname": strRelayHost

}

},

"must_not": {

"term": {

"source": "/var/log/messages"

}

}

}

}

}

result = elastic_client.search(index="network_syslog*", body=query_body,size=1)

all_hits = result['hits']['hits']

iDocumentAge = None

for num, doc in enumerate(all_hits):

iDocumentAge = ( (iCurrentUnixTimestamp*1000) - doc.get('sort')[0]) / 1000.0

if iDocumentAge is not None:

if iDocumentAge > iAgeThreashold:

if strAlert is None:

strAlert = f"<tr><td>{strRelayHost}</td><td>{iDocumentAge}</td></tr>"

else:

strAlert = f"{strAlert}\n<tr><td>{strRelayHost}</td><td>{iDocumentAge}</td></tr>\n"

print(f"PROBLEM - For {strRelayHost}, document age is {iDocumentAge} second(s)")

else:

print(f"GOOD - For {strRelayHost}, document age is {iDocumentAge} second(s)")

else:

print(f"PROBLEM - For {strRelayHost}, no recent record found")

if strAlert is not None:

msg = MIMEMultipart('alternative')

msg['Subject'] = "ELK Filebeat Alert"

msg['From'] = strSenderAddress

msg['To'] = strRecipientAddress

strHTMLMessage = f"<html><body><table><tr><th>Server</th><th>Document Age</th></tr>{strAlert}</table></body></html>"

strTextMessage = strAlert

part1 = MIMEText(strTextMessage, 'plain')

part2 = MIMEText(strHTMLMessage, 'html')

msg.attach(part1)

msg.attach(part2)

s = smtplib.SMTP(strSMTPHostname)

s.sendmail(strSenderAddress, strRecipientAddress, msg.as_string())

s.quit()

Debugging Filebeat

# Run filebeat from the command line and add debugging flags to increase verbosity of output

# -e directs output to STDERR instead of syslog

# -c indicates the config file to use

# -d indicates which debugging items you want -- * for all

/opt/filebeat/filebeat -e -c /opt/filebeat/filebeat.yml -d "*"

Python Logging to Logstash Server

Since we are having a problem with some of our filebeat servers actually delivering data over to logstash, I put together a really quick python script that connects to the logstash server and sends a log record. I can then run tcpdump on the logstash server and hopefully see what is going wrong.

import logging

import logstash

import sys

strHost = 'logstash.example.com'

iPort = 5048

test_logger = logging.getLogger('python-logstash-logger')

test_logger.setLevel(logging.INFO)

test_logger.addHandler(logstash.TCPLogstashHandler(host=strHost,port=iPort))

test_logger.info('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

test_logger.warning('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

test_logger.error('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

Using tcpdump to capture traffic

I like tshark (command line wireshark), but some of our servers don’t have it installed and won’t have it installed. So I’m re-learning tcpdump!

List data from a specific source IP

tcpdump src 10.1.2.3

List data sent to a specific port

tcpdump dst port 5048

List data sent from an entire subnet

tcpdump net 10.1.2.0/26

And add -X or -A to see the whole packet.

Bee Check-in

We checked on our bees this afternoon — it was a nice, hot day (low 80’s!). We turned the entrance reducer to give them more space to come and go. I frequently saw bee traffic jams! We removed the queen cage and some burr comb the bees had built up between the two frames where the queen cage was placed. I’d given them a gallon of sugar water when we installed the package, and there’s a bit left. But they’ve consumed a lot of the syrup. We’ll have to refill their food when it warms up again next week.

Bees, again!

I’d ordered a package of bees this year (we’ve got frames from last year that will give them a good head start), but the post office seemed to have lost them. They left Kentucky over the weekend and went into “umm … the package is on its way to the destination. Check back later” status. But, at about 6:30 this morning, the post office rang me up to let me know they had bees for me. I picked up the package and set them in the butler pantry (a nice, dark, quiet place!) and we put the bees in their hive at about 3PM.

PostgreSQL Logical Replication – Row Filter

Researching something else about logical replication, I came across a commit message about row filtering on logical replication. From the date of the commit, I expect this will be included in PostgreSQL 15.

Adding a WHERE clause after the table name limits the rows that are included in the publication — you could publish employees in Vermont or only completed transactions.