I’m using IPv6 on a server — the server wasn’t using NetworkManagement, so I’ve configured it directly in the network script file.

After restarting the network (systemctl restart network), I was able to ping other IPv6 addressed equipment.

We no longer have 22 tiny birds in the house! Stream (the new duckling) spent Monday night outside in the duck coop. We’ve been letting the little one out into the duck yard to hang out and get used to each other, but bringing it into the house at night. I got up at 6-something on Tuesday morning to make sure the little guy was OK and the ducks are now hanging out together in the yard. It was cold — mid 50’s — when I let them out of the coop this morning, and they all were napping next to the pond in the sunshine. The little one was sleeping right next to one of the big ducks.

Then, in the evening, we put the turkeys into the pasture with the big turkeys and chickens. They’ve been hanging out in the baby tractor next to the pasture, so everyone has had a chance to get used to each other. The little turkeys can fly really well — on Monday, Anya got sidetracked bringing the turkeys into the house. She left two thirds of the turkeys in the baby tractor with the zipper open! I noticed the chickens and turkeys were not in the coop, and I walked over to let them in. All of a sudden, this dark shadow comes flying at me … literally, it was a baby turkey who flew some twenty feet and cleared my shoulder. Since they were able to fly themselves out of the pasture, we trimmed feathers on their left wings. Now they look a little asymmetrical, but they mostly stay inside the pasture. Unfortunately, the mesh isn’t small enough and they can pop themselves out of the fencing.

The turkey farm where we picked up four turkeys last year — the owner said the male turkeys take turns sitting on the nest and taking care of the baby guys. I totally believe that after seeing how the big turkeys are with the little ones. They puff up and circle around the gaggle of baby turkeys. When the babies split out into multiple groups, the two big turkeys kind of round them up into two groups and each watch over his group. Anya says they even tuck the little ones around them to sleep.

Character filters are the first component of an analyzer. They can remove unwanted characters – this could be html tags (“char_filter”: [“html_strip”]) or some custom replacement – or change character(s) into other character(s). Output from the character filter is passed to the tokenizer.

The tokenizer breaks the string out into individual components (tokens). A commonly used tokenizer is the whitespace tokenizer which uses whitespace characters as the token delimiter. For CSV data, you could build a custom pattern tokenizer with “,” as the delimiter.

Then token filters removes anything deemed unnecessary. The standard token filter applies a lower-case function too – so NOW, Now, and now all produce the same token.

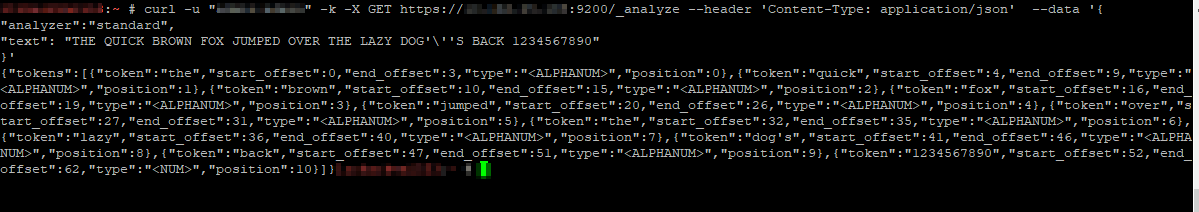

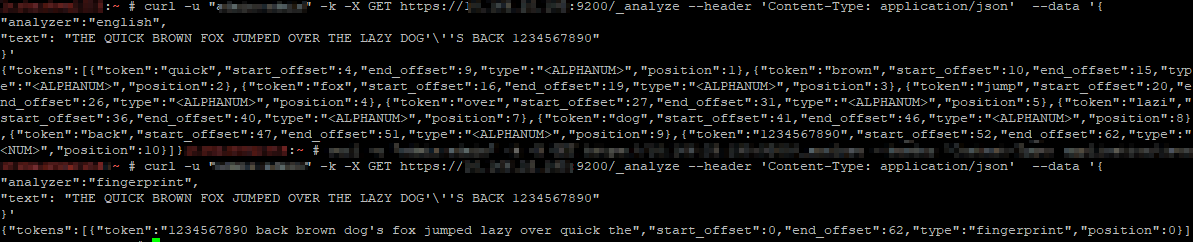

You can one-off analyze a string using any of the

curl -u “admin:admin” -k -X GET https://localhost:9200/_analyze –header ‘Content-Type: application/json’ –data ‘

“analyzer”:”standard”,

“text”: “THE QUICK BROWN FOX JUMPED OVER THE LAZY DOG’\”S BACK 1234567890″

}’

Specifying different analyzers produces different tokens

It’s even possible to define a custom analyzer in an index – you’ll see this in the index configuration. Adding character mappings to a custom filter – the example used in Elastic’s documentation maps Arabic numbers to their European counterparts – might be a useful tool in our implementation. One of the examples is turning ASCII emoticons into emotional descriptors (_happy_, _sad_, _crying_, _raspberry_, etc) that would be useful in analyzing customer communications. In log processing, we might want to map phrases into commonly used abbreviations (not a real-world example, but if programmatic input spelled out “self-contained breathing apparatus”, I expect most people would still search for SCBA if they wanted to see how frequently SCBA tanks were used for call-outs). It will be interesting to see how frequently programmatic input doesn’t line up with user expectations to see if character mappings will be beneficial.

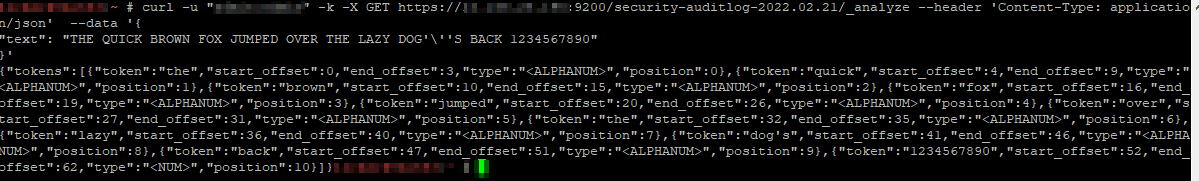

In addition to testing individual analyzers, you can test the analyzer associated to an index – instead of using the /_analyze endpoint, use the /indexname/_analyze endpoint.

Since the leaked draft overturning Roe v Wade was released, I’ve encountered a number of forums in which women are advocating we all delete any menstruation tracking apps. This seems, to me, like performance art meant as protest. Not an effective solution to the stated problem.

I get the point — people don’t want their data tracked in a place where the state can readily compel production of records. If they have reasonable suspicion that an abortion took place, they can get a warrant for your data. But deleting the app from your phone — that doesn’t actually delete the data on the cloud hosting provider’s side. Deleting the app has the same impact as ceasing to enter new data. Except you’ve inconvenienced yourself by losing access to your old data. Check if an account can be deleted — and learn what the details of ‘delete’ actually mean. In many cases, ‘delete’ means disable and then purge after some delta time elapses. What about backups? For how long would the company be able to produce data if they really needed to?

But before going to extremes to actually delete data, consider if the alternatives are actually any “safer” by your definition. If I were tracking my period on a little paper calendar in my purse or one pinned to the cork board in the rec room? They may get a warrant and seize my paper calendar too. And, really, you could continue to enter “periods” even if they’re not happening. There’s usually a field for ‘notes’ and you could put something in like ‘really painful cramping’ or ‘so many hot flashes’ whenever you actually mean “yeah, this one didn’t happen” — which would make the data the government is able to gather rather meaningless.

I made my own SCOBY without using kombucha — diced up some old apples and coated them in sugar. It worked! I just started my first batch of kombucha using tea and maple syrup.

There are a few ways to reset the password on an individual account … but they require you to have a known password. But what about when you don’t have any good passwords? (You might be able to read your kibana.yml and get a known good password, so that would be a good place to check). Provided you have OS access, just create another superuser account using the elasticsearch-users binary:

/usr/share/elasticsearch/bin/elasticsearch-users useradd ljradmin -p S0m3pA5sw0Rd -r superuser

You can then use curl to the ElasticSearch API to reset the elastic account password

curl -s --user ljradmin:S0m3pA5sw0Rd -XPUT "http://127.0.0.1:9200/_xpack/security/user/elastic/_password" -H 'Content-Type: application/json' -d'

{

"password" : "N3wPa5sw0Rd4ElasticU53r"

}

'