Issue: The multi-step process of retrieving credentials from CyberArk introduce noticeable latency on web tools that utilize multiple passwords. This occurs each execution cycle (scheduled task or user access).

Proposal: We will use a redis server to cache credentials retrieved from CyberArk. This will allow quick access of frequently used passwords and reduce latency when multiple users access a tool.

Details:

A redis server will be installed on both the production and development web servers. The redis implementation will be bound to localhost, and communication with the server will be encrypted using the same SSL certificate used on the web server.

Data stored in redis will be encrypted using libsodium. The key and nonce will be stored in a file on the application server.

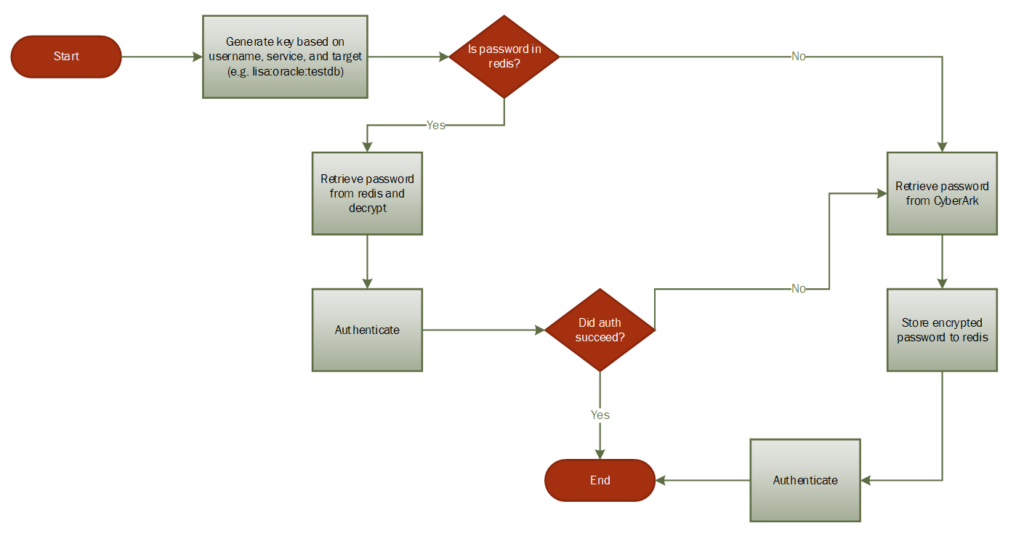

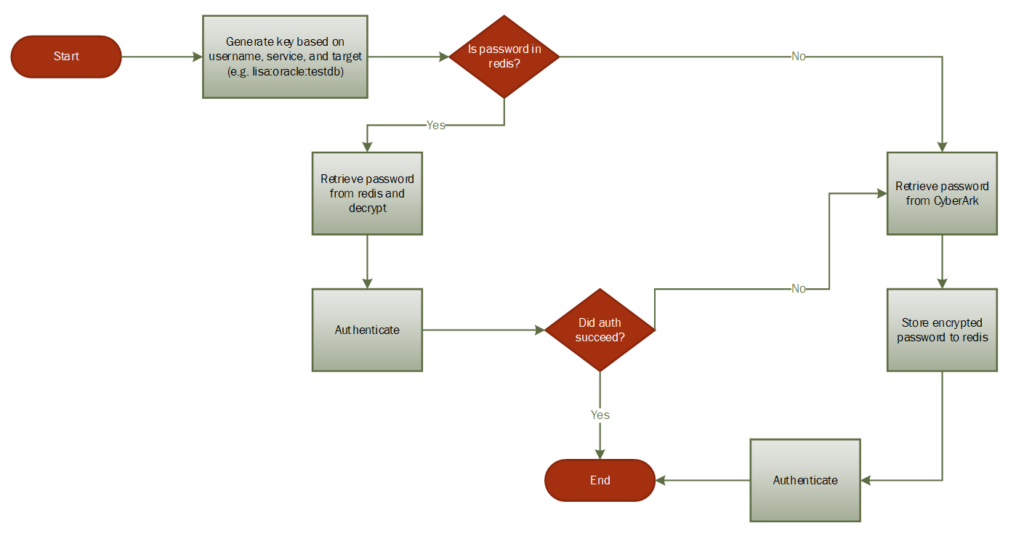

All password retrievals will follow this basic process:

Outstanding questions:

- Using a namespace for the username key increases storage requirement. We could, instead, use allocate individual ‘databases’ for specific services. I.E. use database 1 for all Oracle passwords, use database 2 for all FTP passwords, use database 3 for all web service passwords. This would reduce the length of the key string.

- Data retention. How long should cached data live? There’s a memory limit, and I elected to use a least frequently used algorithm to prune data if that limit is reached. That means a record that’s fused once an hour ago may well age out before a frequently used cred that’s been on the server for a few hours. There’s also a FIFO pruning, but I think we will have a handful of really frequently used credentials that we want to keep around as much as possible.Basically infinite retention with low memory allocation – we could significantly limit the amount of memory that can be used to store credentials and have a high (week? weeks?) expiry period on cached data.Or we could have the cache expire more quickly – a day? A few hours? The biggest drawback I see with a long expiry period is that we’re retaining bad data for some time after a password is changed. I conceptualized a process where we’d want to handle authentication failure by getting the password directly from CyberArk and update the redis cache – which minimizes the risk of keeping the cached data for a long time.

- How do we want to encrypt/decrypt stashed data? I used libsodium because it’s something I used before (and it’s simple) – does anyone have a particular fav method?

- Anyone have an opinion on SSL session caching

################################## MODULES #####################################

# No additional modules are loaded

################################## NETWORK #####################################

# My web server is on a different host, so I needed to bind to the public

# network interface. I think we'd *want* to bind to localhost in our

# use case.

# bind 127.0.0.1

# Similarly, I think we'd want 'yes' here

protected-mode no

# Might want to use 0 to disable listening on the unsecure port

port 6379

tcp-backlog 511

timeout 10

tcp-keepalive 300

################################# TLS/SSL #####################################

tls-port 6380

tls-cert-file /opt/redis/ssl/memcache.pem

tls-key-file /opt/redis/ssl/memcache.key

tls-ca-cert-dir /opt/redis/ssl/ca

# I am not auth'ing clients for simplicity

tls-auth-clients no

tls-auth-clients optional

tls-protocols "TLSv1.2 TLSv1.3"

tls-prefer-server-ciphers yes

tls-session-caching no

# These would only be set if we were setting up replication / clustering

# tls-replication yes

# tls-cluster yes

################################# GENERAL #####################################

# This is for docker, we may want to use something like systemd here.

daemonize no

supervised no

#loglevel debug

loglevel notice

logfile "/var/log/redis.log"

syslog-enabled yes

syslog-ident redis

syslog-facility local0

# 1 might be sufficient -- we *could* partition different apps into different databases

# But I'm thinking, if our keys are basically "user:target:service" ... then report_user:RADD:Oracle

# from any web tool would be the same cred. In which case, one database suffices.

databases 3

################################ SNAPSHOTTING ################################

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

#

dir ./

################################## SECURITY ###################################

# I wasn't setting up any sort of authentication and just using the facts that

# (1) you are on localhost and

# (2) you have the key to decrypt the stuff we stash

# to mean you are authorized.

############################## MEMORY MANAGEMENT ################################

# This is what to evict from the dataset when memory is maxed

maxmemory-policy volatile-lfu

############################# LAZY FREEING ####################################

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

lazyfree-lazy-user-del no

############################ KERNEL OOM CONTROL ##############################

oom-score-adj no

############################## APPEND ONLY MODE ###############################

appendonly no

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

############################### ADVANCED CONFIG ###############################

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

########################### ACTIVE DEFRAGMENTATION #######################

# Enabled active defragmentation

activedefrag no

# Minimum amount of fragmentation waste to start active defrag

active-defrag-ignore-bytes 100mb

# Minimum percentage of fragmentation to start active defrag

active-defrag-threshold-lower 10