I’ve got replicated PostgreSQL database pairs that each have some 50TB of data. The server operating systems need to be upgraded, but there is a constraint: no in-place upgrades. I don’t get to veto that constraint (i.e. the fact that we could just cross our fingers and upgrade a replica … and, if it fails, built new and pull the data again doesn’t matter). Unfortunately, trying to add a second replica delays the existing replication. Since all write operations to to the RW server and reads to to the read-only replica … having the read-only copy a day or two out of sync whilst this secondary replica comes online is a non-starter.

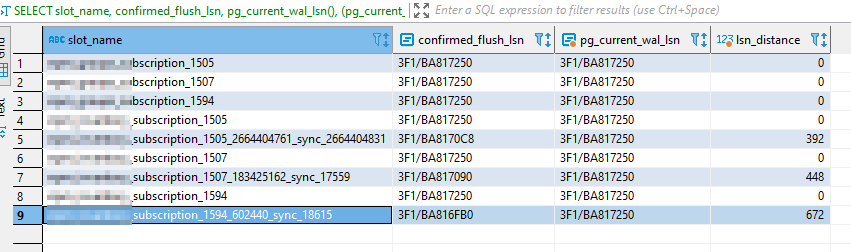

Fortunately, you can cascade replication — seed the new replica from the current read-only replica. Create a new replication slot — here new-pg-ro-replica-pgdata. You need to verify the new server is in the pg_hba.conf file to authenticate with the replication account.

pg_basebackup -h pg-ro-replica.example.net -D /pgdata -U replicatorID -v -P --wal-method=stream --slot=new-pg-ro-replica-pgdata

Wait … wait … wait. It’ll finish eventually. Then tweak your recovery.conf

standby_mode = 'on'

primary_conninfo = 'host=pg-rw-replica.example.net port=5432 user=replicatorID password=your_password' sslmode=require

primary_slot_name = 'new-pg-ro-replica-pgdata'And

touch /pgdata/standby.signal

Finally, start the server

pg_ctl start -D /pgdata

Voila — a second read-only replica. Now they can decom the old server.